Facebook fails to curb coronavirus misinformation

As Mark Zuckerberg this week detailed the results of the company’s latest Community Standards Enforcement Report, he also revealed that Facebook is being inundated with coronavirus misinformation and disinformation—and that the company has been struggling to stop it.

Zuckerberg, who said he is proud of the work Facebook’s content moderation teams have done, also acknowledged that it’s not stopping enough Covid-19 misinformation.

“Our effectiveness has certainly been impacted by having less human review during Covid-19, and we do unfortunately expect to make more mistakes until we’re able ramp everything back up,” he said.

READ MORE ON COVID-19 AND CYBERSECURITY

Secure contact tracing needs more transparent development

Phishers target oil and gas industry amid Covid-19 downturn

Hydroxychloroquine misinformation makes way for political disinformation

Ebola-hacking lessons for coronavirus fighters (Q&A)

How to make your Zoom meetings more secure

CanSecWest, the last tech conference standing in the face of the coronavirus

The report, released Tuesday, says improvements in the company’s machine-learning systems helped Facebook remove 68 percent more hate posts in the first three months of 2020 than it did from October to December 2019. These same systems are responsible for detecting 90 percent of hate speech posts before they’re posted by Facebook users, the company claims. Between the company’s human moderators and automated systems, Facebook says it acted on 9.6 million pieces of hateful content in the first quarter of 2020, up from 5.7 million pieces of hateful content in the fourth quarter of 2019.

On Instagram, the detection rate increased from 57.6 percent in the fourth quarter of 2019 to 68.9 percent in the first quarter of 2020, with 175,000 pieces of content removed—35,200 more than the previous quarter.

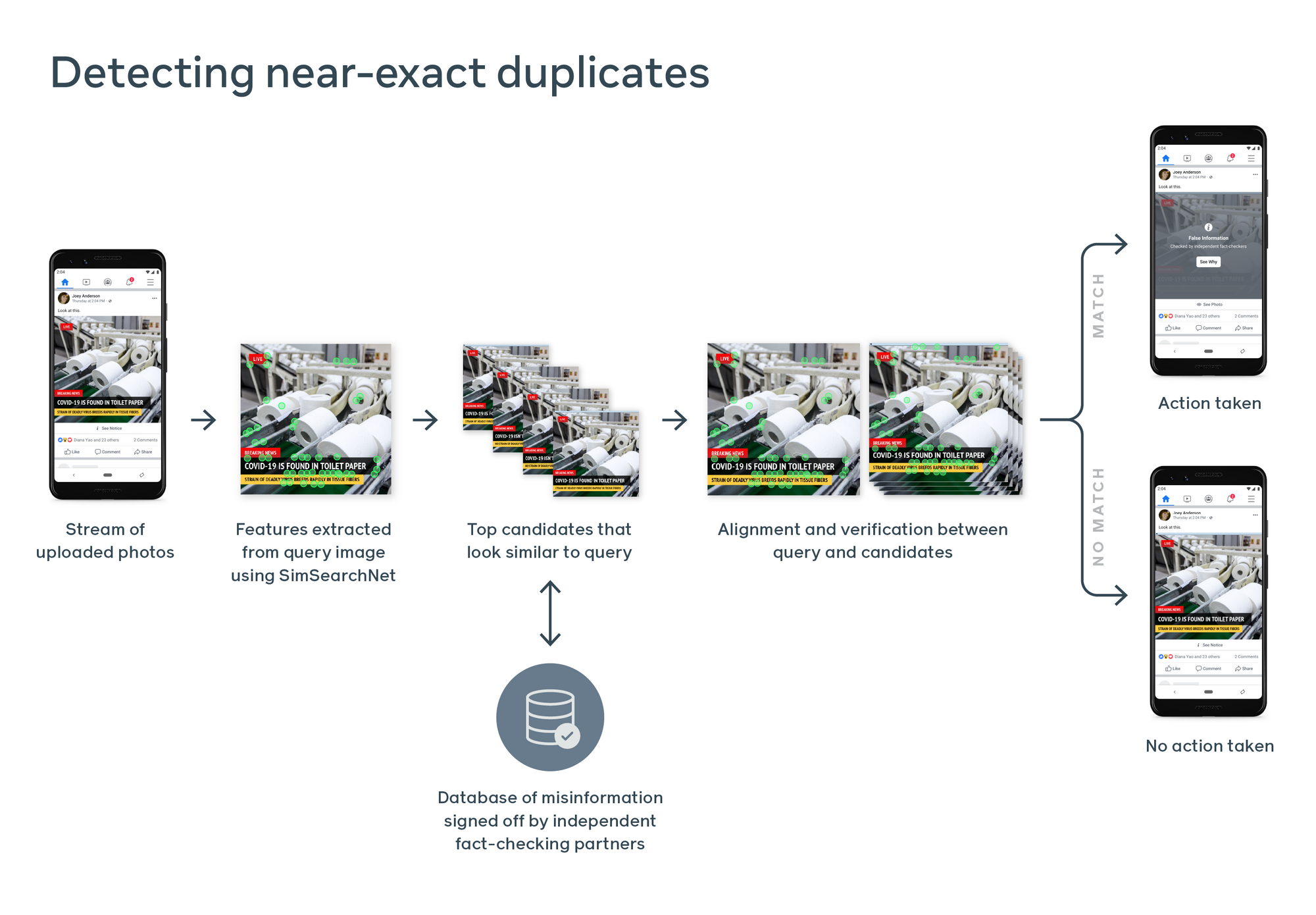

The company also published a separate blog post detailing how it has handled Covid-19 misinformation thus far: In April, it placed warning labels on 50 million pieces of content, based on 7,500 articles by 60 independent fact-checking organizations; and since March 1, Facebook has removed more than 2.5 million pieces of content for attempting to fraudulently sell face masks, hand sanitizer, disinfecting wipes, and Covid-19 test kits.

This story was originally commissioned by Dark Reading. Read the full story here.