Multi-single-tenant architectures in cloud

Automated pet herding for fun and profit.

(source: O'Reilly)

(source: O'Reilly)

Okay. It’s 2020. Cloud is king. Everything’s web-scale. You want to architect and build a massive platform to provide services for hundreds if not millions of users. Maybe you already have one, and want to understand or manage it better. Where do you start?

There are a lot of ways of thinking about systems. This article is not concerned with how REST-ful your APIs are, or which consensus algorithm you choose, or whose database paradigm best fits your need. This article cares about getting the job done: about achieving your users’ needs. So let’s take a step back from the technical specifics, and step up to the next level of abstraction.

Let’s talk about birds[1].

Fine, okay, we’re doing birds now. Where do we start?

In the world of user-centric architecture philosophies, the simplest architecture of all is the single-tenant system. By focusing on one use case, you can build a system so elaborately tailored that it perfectly fits the user’s every need. They want a 10-second cache timeout? Done! Perhaps it can only schedule on machines with an odd number of disks? Why not!

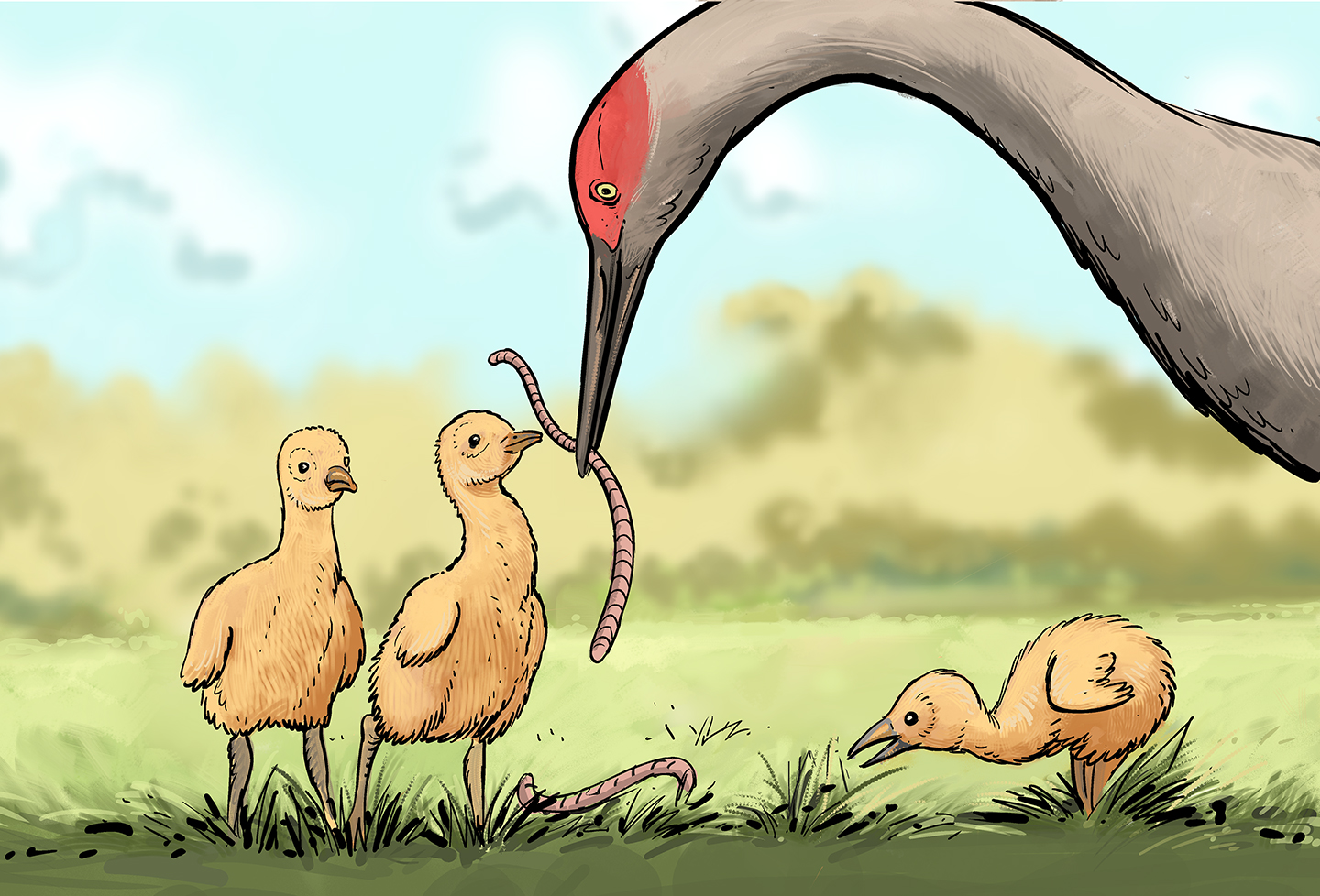

This bird might be extremely funny looking, but it’s going to do exactly what your user needs.

If your end-goal is to serve the needs of one “user”—be that by serving as back end to one system, or as one company’s mailserver, or any other specialized single use—you are extremely happy with this bird. Awesome! You can stop reading.

(Or continue, out of curiosity or the urge to laugh at your less-fortunate colleagues.)

What if I have more than one user?

Now we’re starting to get into large-scale computing territory.

Since 2016, O’Reilly and Google SRE have spoken extensively about ways to build reliable, scalable systems to serve large numbers of users. Much of the advice focuses on multitenant systems, stacks built to handle vast numbers of users quickly. These work, in general, by constraining use cases in the name of uniformity, and by building in both security and isolation. Your search query can be handled by any one of dozens if not thousands of fundamentally identical servers, each of which is handling hundreds of other users at once.

If a server fails, like one crane falling from a flock, it is quickly replaced by another. No one notices.

Along comes cloud

Of course, little is actually that simple.

These days, there are a lot of excellent open source or vendor-provided systems that can solve problems for your stack—technologies like MySQL, or Spark, or Kubernetes—and can be tweaked or customized or configured to run perfectly for your one, single need.

But what happens when you need to run more than one of these? Say, if you wanted to become a provider of this service to others?

In our extended, avian metaphor: you have built up an aviary full of exotic, hyper-specialized single-tenant bird-systems.

Now you have to work out how to keep them healthy.

MST: A better way to host

“Multi-single-tenant architecture” is a slightly tongue-in-cheek name (coined by Google Fellow Eric Brewer) for a pattern where a single system runs multiple, individually-provisioned instances to serve the needs of its users.

A great example here is hosted Kubernetes. With Google Kubernetes Engine (GKE), each individual user—or, in practice, each instance for each user—gets its own, separate Kubernetes cluster, running in its own security domain on its own virtual machines (VM). Another example is an internal system, such as a business intelligence platform, that you wish to bring out as a product externally. For the safety of each user’s data, it’s easier to provide isolation by hosting separate copies than it is to re-architect security boundaries into the system itself.

Why?

Well, many of these systems are highly configurable, and their functionality can be customized to the point that you can’t handle all the use cases in a single deployment. Converting a system that assumes single-tenancy (as, for example, OSS almost always does) into one capable of supporting multitenant use is tricky; you need to add the concept of a tenant, and tenant identification, which requires API changes that break backward compatibility. A good multitenant solution requires strict isolation, security, redundancy, access control, and any number of system-specific concerns. And this conversion work has to be done for every one of the many OSS packages and systems you might wish to host.

What can you do instead? There’s a way to work around the single-tenant nature of these systems without a full conversion: multi-single tenancy (MST). As an architecture design, hosting multiple instances of a system retains the cleaner isolation and higher configurability of single-tenancy, but the cost is that these systems can be fragile and hard to operate. Your aviary must cater to both parrots and penguins.

What to do?

A lot of the standard wisdom for running reliably at scale—establish SLIs and SLOs, make sure changes can be undone—still applies in the multi-single-tenant world. But some of it doesn’t. How can you create generic redundancy when each instance is specifically configured and populated? How can you monitor in aggregate when even one dead instance might mean a user is completely out of commission?

Here are a few approaches we’ve used at Google to wrangle our divergent aerial flocks into something a little more manageable.

Automate healing of instances

First things first: in MST, you can’t just let sick instances die like you might with a multitenant system, since each individual instance is precious to its user, but that doesn’t mean you have to heal them by hand.

Pay attention to the diagnostic flow your support personnel follow. Can any of the root causes be identified by automation? Can the “one-off” fixes be run by robots? You may need to act like veterinarians to your flock, but you can reduce the number of cases that require in-depth debugging by running problems through automation—your robotic vet—first.

And consider investing in prophylactics. If your system’s database has a habit of getting stuck every now and then, why not run a script that reboots it every 12 hours? It’s crude, but effective at scale, and cheap to implement.

Homogenize your fleet

You’re running large numbers of single-tenant instances, but “single-tenant” doesn’t have to mean “unique.”

With some careful thought, you can identify patterns of usage and configuration among your user base, and work to bring the majority of your instances in line with a smaller number of “blueprints.” This may mean locking down customers to a few “types” of deployment—the high-traffic webserver Kubernetes cluster looks quite different from the data-processing high-storage five-new-pods-a-minute Kubernetes cluster, for instance—while allowing enough flexibility to serve power users. Ask where your problem areas live and focus on standardizing these first. And get into the habit of asking, “does this need to be configurable?” of new options as they’re added.

Why? Well, if you have dozens or hundreds of instances with very similar configurations, you start being able to write tests for classes of users, rather than for each user alone. You can also start to perform canary analysis by upgrading a subset of instances to catch failures or regressions before they affect the majority in that class. You open the door to more widely applicable tools, given the increased commonality between instances, and reduce the number of “special cases” your operators must handle.

Isolate pieces that you can turn into services

This is perhaps the most invasive piece of advice; as ever, please perform your own cost-benefit analysis. Having said that: many single-tenant systems, when run alongside others of their ilk, have portions of their flow that could benefit from economies of scale. For example, you will likely run a multitenant control plane to instantiate new single-tenant instances. You may perform authentication, or store data, in a centralized system (or several centralized systems—microservices are very composible in this situation) while still preserving the isolation and independence of the more single-user-critical pieces of your single-tenant system.

Although it may be too expensive to modify the entirety of your system to handle multiple users at once in true multitenancy, don’t underestimate the utility of fragmentation. A system with well-defined sub-components and interaction boundaries is easier to test, less vulnerable to small bugs spiraling into catastrophic system failure, and the first step toward reducing the number of things you need to run uniquely per user.

Conclusion

Once you reach the point of running more than a handful of similar single-tenant systems, the aggregate flock takes on its own momentum with its own rules. Running a group of individual systems will always be more rocky than running one multitenant system because of the delicate care and feeding involved. Use these tactics to make your life (and your users’ lives) smoother and more reliable: automate your healing, restrict individuality to only what’s needed, and build in multitenancy wherever it makes sense. Then you, too, can reduce your outages and keep your birds happily flapping along!

This post is a collaboration between O’Reilly and Google. See our statement of editorial independence.