We’re just three weeks away from Strata 2019 in New York. Here’s what you can expect at this four day event.

When and where?

Strata 2019 takes place between September 23rd and September 26th, 2019 at the Jacob K. Javits Convention Center (aka “Javits Center”) on 11th Avenue in Manhattan. It’s a huge convention center containing many halls and breakaway rooms.

SQream participates as an exhibitor at Strata for the second time this year.

Getting to the Javits Center

Your safest bet is to walk or take the subway close-by.

The closest subway station is 34th Street-Hudson Yards Subway Station (if this is your first time in New York, pay attention to the station names – there are several “34th street” stations).

If you are staying far away, you can also take a cab or ride-share, but during peak hours the amount of people showing up is immense and you may get stuck in traffic somewhat far away.

Peak hours

While Strata officially opens on September 23rd, the expo hall doesn’t open until Tuesday, the 24th in the evening.

The expo hall is open on these days:

- Tuesday, September 24 – 5:00pm–6:30pm

- Wednesday, September 25 – 10:30am–7:30pm (busiest time – 6:00pm-7:00pm)

- Thursday, September 26 – 10:30am–16:30pm (busiest time – 2:00pm-3:00pm)

The party

You don’t want to miss the Data After Dark event, which is a fun party sponsored by some of the show’s vendors. You can expect many (free!) food stalls, drinks, delicious appetizers, music, and activities like VR games, carnival-style games, and more. It’s the peak of the event, and is super fun even if you’re alone!

This year, the party is at the Slate NYC nightclub, at 54 West 21st Street.

Theme

This year’s theme for Strata is Data fuels the future. It’s all about getting ahead on modern data techniques to remain competitive in your business.

While previous years focused on specific types of data infrastructures, Strata has become a wider event. When you participate, you can expect to learn how people like yourself handle the difficult challenge of storing, navigating, and turning huge data stores into actionable insights. Companies exhibiting usually showcase how they succeed in unlocking the intelligence gems hidden in these treasure troves of data they hold, and how it positions them better-suited to compete in an increasingly cluttered and competitive market.

What about Hadoop?

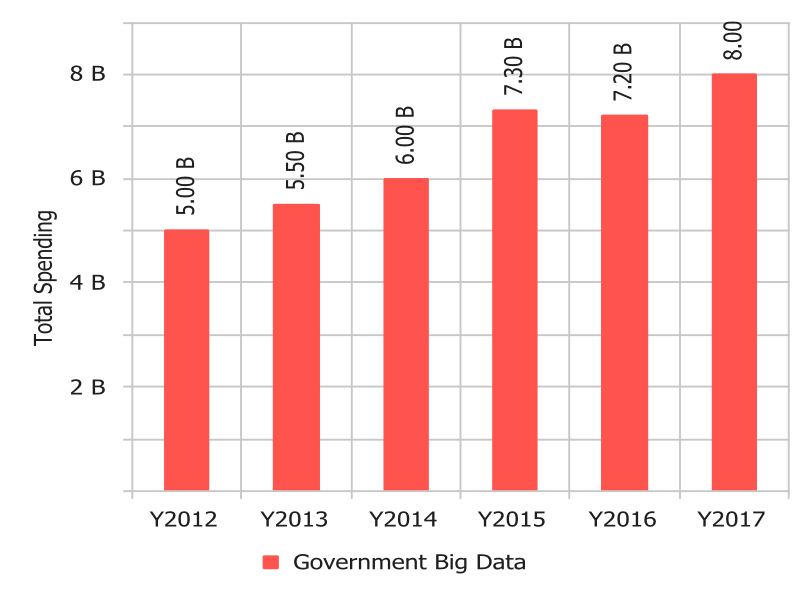

In previous years, Strata was strongly focused on Hadoop. As far back as 2017, Hadoop has been less important, primarily as many companies realized they can’t rely exclusively on Hadoop. Expect to see many complementary solutions that offer an edge over a vanilla Hadoop implementation!

SQream at Strata

Visit SQream at booth 1354 for special and exciting giveaways and live shows (!) focused around unlocking insights from Hadoop data.

SQream at Strata 2019

See you there!

Top 15 Interesting Talks

Here are some talks we think are super interesting

Architecting a data platform for enterprise use

1:30pm-5:00pm, Sep 24 / 1E 09

Building a data lake involves more than installing Hadoop or putting data into AWS. The goal in most organizations is to build a multiuse data infrastructure that isn’t subject to past constraints. Mark Madsen and Todd Walter explore design assumptions and principles and walk you through a reference architecture to use as you work to unify your analytics infrastructure.

It’s not you; it’s your database: How to unlock the full potential of your operational data (sponsored by MemSQL)

10:20am-10:25am, Sep 25 / 3E

Data is now the world’s most valuable resource — with winners and losers decided every day by how well we collect, analyze, and act on data. However, most companies are struggling to unlock the full value of their data. Why? Outdated, outmoded data infrastructure.

The future of Hadoop in an era of exponentially growing data

5:25pm–6:05pm Wednesday, Sep 25 / 1A 04/05

For a while, Hadoop was delivering on its promise to enable data scientists and BI professionals. But what started out as an asset has become a limiting and poorly-performing problem. With the exponential growth of data, Hadoop’s expensive nodes have become strained, and the complex data architecture built around Hadoop struggles to bring business insights. Often, querying such massive data stores is simply infeasible.

This session will explore:

- How to deal with the exponential growth of data in a Hadoop-based infrastructure

- Why data preparation takes so much time – and how to reduce it

- How insights can be generated in a timely manner from raw data

Building a fast, scalable, efficient operational analytics and reporting application using MemSQL, Docker, Airflow, and Prometheus (sponsored by MemSQL)

11:20am-12:00pm, Sep 25 / 1A 04/05

Learn how Akamai uses MemSQL, Docker, Airflow, Prometheus, and other technologies as an enabler to streamline and accelerate data ingestion and calculation to generate usage metrics for billing, reporting, and analytics at massive scale.

Architecting a Data Analytics Service both in the Public Cloud and in the On-Premise Private Cloud: ETL, BI, and machine learning (Sponsored by SK Holdings)

2:55pm-3:35pm, Sep 25 / 1A 04/05

SK Holdings announced a data analytics platform in the cloud in the name of Accuinsight+ with eight data analytic services in January 2019 in the CloudZ, which is one of the biggest cloud service providers in Korea.

Time travel for data pipelines: Solving the mystery of what changed

2:55pm-3:35pm, Sep 25 / 1E 07/08

Imagine a business insight showing a sudden spike.Debugging data pipelines is non-trivial and finding the root cause can take hours or even days! We’ll share how Intuit built a self-serve tool that automatically discovers data pipeline lineage and tracks every change that impacts pipeline.This helps debug pipeline issues in minutes–establishing trust in data while improving developer productivity.

Solve tomorrow’s business challenges with a modern data warehouse (sponsored by Matillion)

4:35pm-5:15pm, Sep 25 / 1E 17

According to Forrester, insight-driven companies are on pace to make $1.8 trillion annually by 2021. How fast can your team collect, process, and analyze data to help solve present — and future — business challenges? This session shares actionable tips and lessons learned from cloud data warehouse modernizations at companies like DocuSign and others that you can take back to your business.

The case for a common metadata layer for machine learning platforms

4:35pm-5:15pm, Sep 25 / 1A 23/24

Machine Learning Platforms being built are becoming more complex with different components each producing their own metadata. Currently, most components provide their own way of storing metadata. In this talk, we propose a first draft of a common Metadata API and demo a first implementation of this API in Kubeflow using ArangoDB, which is a native multi-model database.

Performant time series data management and analytics with Postgres

11:20am-12:00pm, Sep 26 / 1A 15/16

Leveraging polyglot solutions for your time-series data can lead to a variety of issues including engineering complexity, operational challenges, and even referential integrity concerns. By re-engineering Postgres to serve as a general data platform, your high-volume time-series workloads will be better streamlined, resulting in more actionable data and greater ease of use.

Where’s my lookup table? Modeling relational data in a denormalized world

11:20am-12:00pm, Sep 26 / 1E 09

Data has always been relational, and it always will be. NoSQL databases are gaining in popularity, but that does not change the fact that the data they manage is still relational, it just changes how we have to model the data. This session dives deep into how real Entity Relationship Models can be efficiently modeled in a denormalized manner using schema examples from real application services.

Scaling Apache Spark at Facebook

1:15pm-1:55pm, Sep 26 / 1A 06/07

Apache Spark is the largest compute engine at Facebook by CPU. This talk will cover the story of how we optimized, tuned and scaled Apache Spark at Facebook to run on clusters of tens of thousands of machines, processing hundreds of petabytes of data, and used by thousands of data scientists, engineers and product analysts every day.

Executive Briefing: Building a culture of self-service, from predeployment to continued engagement

3:45pm-4:25pm, Sep 26 / 1E 10/11

GE Aviation has made it a mission to implement Self-Service Data. To ensure success beyond initial implementation of tools, the Data Engineering and Analytics teams at GE Aviation created initiatives designed to foster engagement from an ongoing partnership with each part of the business to the gamification of tagging data in a data catalog to forming a Published Dataset Council.

SK Telecom’s 5G network monitoring and 3D visualization on streaming technologies

3:45pm-4:25pm, Sep 26 / 1A 15/16

Architecture and lessons learned from development of T-CORE, SK Telecom’s monitoring and service analytics platform, which collects system and application data from several thousand servers and applications and provides 3D visualized real-time status of the whole network and services for the operators and analytics platform for data scientists, engineers and developers.

Alexa, do men talk too much?

5:25pm-6:05pm, Sep 25 / 3B – Expo Hall

Mansplaining. Know it? Hate it? Want to make it go away? In this session we tackle the chronic problem of men talking over or down to women and its negative impact on career progression for women. We will also demonstrate an Alexa skill that uses deep learning techniques on incoming audio feeds. We discuss ownership of the problem for both women and men, and suggest helpful strategies.

T-Mobile’s journey to turn crowdsourced big data into actionable insights

2:05pm-2:45pm, Sep 26 / 1E 12/13

T-Mobile successfully improved the quality of voice calling by analyzing crowd sourced big data from mobile devices. In this session, you will learn how engineers from multiple backgrounds collaborated to achieve 10% improvement in voice quality and why the analysis of big data was the key to the success in bringing a better voice call service quality to millions of end users.