Highlights of 2018 in brief

Experts have different points of view on whether 2018 was rich in important achievements and events.Machine learning and data science advisor Oleksandr Khryplyvenko notes that 2018 wasn’t as full of memorable breakthroughs for the industry, unlike previous years. No recent achievements can compete with inventions of a multilayer perceptron (MLP), neural net training techniques like backpropagation and backpropagation through time (BPTT), residual networks, the introduction of Generative Adversarial Networks (GANs), and deep Q-learning networks (DQN). ”So, looking back to memorable ones I listed before, there weren't 'brand new' accomplishments in 2018,” summarizes Oleksandr.

BetConstruct's head of data science Dmytro Fedyukov thinks that the release of the new version of the PyTorch machine learning framework for deep neural networks contributes to the unification of modeling, deployment, and debugging with a huge set of third-party libraries that speed up building complex data science pipelines. However, generally not much has changed: “There aren’t advances in algorithmics, at least in the areas in which we’re working — table data, fraud detection, scoring, and computer vision. For our production tasks, we mainly use models and methods that were known at least 3-4 years ago with adding tricks for benchmark optimization, stacking or dynamic model selection. The main peculiarity is about optimized libraries allowing to do the job using minimum resources. In other words, the shift towards minimization of resource use is the most interesting for us because we deal with real-time modeling.”

Not all data scientists are skeptical about 2018 advancements as they mention developments and news in several fields.

Jérôme Louradour, a computer research scientist at Wolfram Research, notes ongoing progress in deep learning for computer vision, natural language processing (NLP), and audio signal processing. The expert’s hot topic list includes GANs, reinforcement learning, the use of invertible neural networks (INNs) for generative modeling, as well as connectivity patterns in neural networks architectures, in particular, attention-based Transformers that overtook recurrent neural networks in natural language processing. Although these developments have been going on over the last four years, the expert notes that significant advances were made in 2018. “It’s tricky to make GANs work, and 2018 brought a bag of new tricks to extend their applicability to real problems.”

“There were quite a lot of significant improvements in ML in 2018,” says data science competence leader at AltexSoft Alexander Konduforov. “First of all, after getting great results mostly in computer vision and sound recognition in recent years, this year, deep learning researchers got another breakthrough in natural language processing.”

Principal data scientist at Booking.com Lucas Bernardi admitted it’s hard for him to say which of the events was the most important because “so much happened this year.” In particular the specialist mentioned the NLP field news — the BERT language representation model, which we’ll discuss in more detail below. “I also liked One pixel attack for fooling deep neural networks and Adversarial Reprogramming of Neural Networks, they show how much work is still to be done towards robust machine learning. I also got the feeling that more and more people are thinking about Machine Learning, AI and Causality, I like this article from Judea Pearl Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution, I truly believe lots of breakthroughs in AI will come from Causality related fields,” shares Lucas.

Now let’s take a closer look at accomplishments, problems, and trends for DS and AI.

Data science projects: shift from pure research to production-oriented work

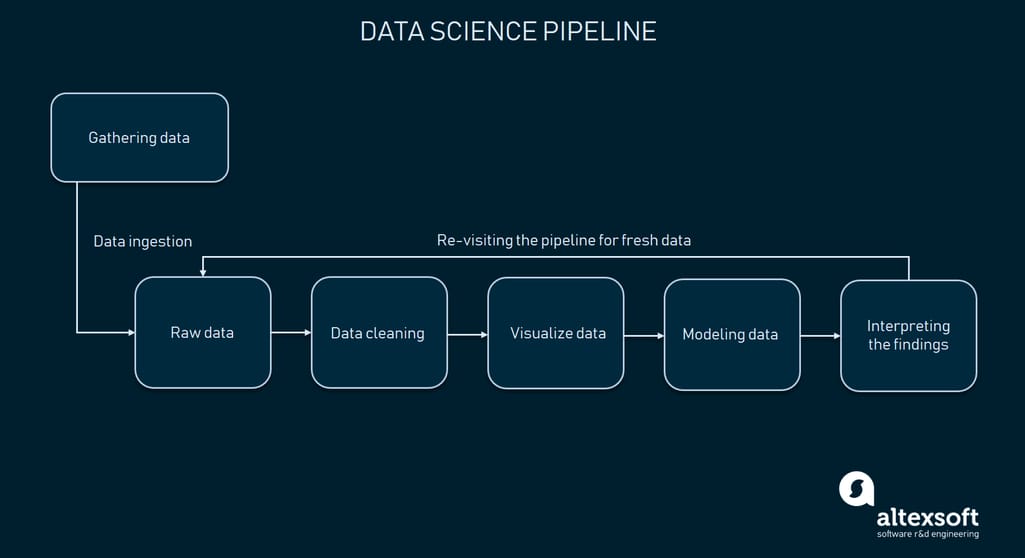

Head of data science at BetConstruct Dmytro Fedyukov notes a more precise transition of data science projects and processes from research- to production-orientation: “We start to put more effort into building data science pipelines. We build and deliver them to working production offers automatically.”A data science pipeline is a set of processes for automating data collection, preparation, visualization, modeling, and interpretation (review) to answer business questions or get informed conclusions. Data pipeline is beneficial for organizations that generate, store (also in cloud), and use high volumes of data or multiple data sources, as well as those requiring real-time or complex data analysis.

A typical data science pipeline. Picture template: Medium

“What has affected us greatly over the past year is the step towards unification when it comes to working with models, their deployment, and debugging. The reason for this change is a release of PyTorch 1.0 and a great promotion of ONNX (Open Neural Network Exchange Format) regarding that model developed in one deep learning framework can be reused in other frameworks,” the expert shares.What does that mean for businesses? As ONNX allows data scientists to move models between frameworks more easily, specialists can put their developments into production faster. “So, it’s not the state-of-the-art that motivates businesses to use data science more but the standardized approach to machine learning model building.”

Dmytro also notes that the meaning of the term research and development (R&D) has changed slightly. Development these days is about the effective delivery of models on production, including deployment and operation. “That means that data scientists should be more of programmers,” concludes the data scientist.

AutoML: automating simple machine learning tasks

While the number of “We’re hiring” posts from tech companies, other businesses, and government agencies increases, fewer specialists are available to fill the new vacancies. And machine learning tasks still must be performed, at least the routine ones. Vendors introduced tools for automating machine learning workflows (autoML) to solve this problem. While MLaaS solutions help novice data scientists and developers solve more complex and typical problems, autoML tools make it possible for users without ML expertise like analysts or software engineers to complete custom and relatively simple tasks.These may be data preparation (feature engineering, feature extraction, and feature selection) and modeling tasks, for instance, algorithm selection, hyperparameter tuning, or model assessment.

“Automated machine learning was a popular topic in 2018: Auto-Keras open source software released, and many companies are working on their own products and libraries. This work will definitely proceed further,” thinks Aleksander Konduforov.

Solving the issue of model interpretability

It’s important for heavily regulated organizations, such as healthcare providers or financial institutions, to understand the logic behind AI-based systems’ predictions. These entities must know all the facts that influenced a particular decision and provide them if needed to prove they didn’t discriminate against a customer.Understanding how a model makes decisions — model interpretation — has been on the front burner since the end of 2017. Decision support systems and models they are based on that don’t explain which features influenced their decisions were known as black boxes.

Data scientists working with neural networks are obviously the ones with the most severe headaches. “Deep learning models have been black boxes for us — we couldn’t exactly tell why they made forecasts they made. In our case, the problem is about the interpretation of numerous scoring models in fraud detection. Sometimes we have to explain why we deny financial transactions or don’t agree with some game situations using regulatory principles,” shares Dmytro Fedyukov.

Model interpretability is not only important for companies that need to fulfill legal obligations to customers. It serves a technical purpose as well. Every ML model considers input features (problem properties) to predict results (outputs). The more relevant features we create and use to train an ML model during feature engineering, the more accurate results we can get and the simpler our model is. That’s why the ability to understand how the model makes predictions is crucial for its debugging.

“The good news is that there are tools providing data scientists with more information for model debugging and validation [evaluation to achieve its best performance.] Some such tools are included in TensorFlow (i.e., TensorBoard) and PyTorch,” adds Dmytro.

Another nifty and relatively new tool is Featuretools — an open source Python library for automated feature engineering created by Feature Labs in 2017. Featuretools has a built-in LIME (Local Interpretable Model-Agnostic Explanations) module, which is a technique that helps to explain predictions of any model.

The purpose of LIME and use case examples

For the sake of simplicity, we won’t describe how the mechanism works. However, you can learn more about LIME here.“So, this technique becomes applicable in production systems, and that’s what businesses and regulators need,” summarizes Dmytro Fedyukov from BetConstruct.

The popularity of Featuretools has grown significantly in 2018 among data scientists and developers, according the vendor’s report.

Natural language processing (NLP): pre-training for deep neural networks and improvements in interactive tasks

Natural Language Processing (NLP) is a branch of AI that focuses on machine understanding, interpretation, processing, and usage of human language. NLP tasks include sentiment analysis, semantic search, summarization, dialogue state tracking, language translation, and others.BERT pre-training technique

The NLP community has been discussing how natural language understanding has to be done, shares Oleksandr Khryplyvenko. “What does it mean to ‘understand’ a sentence?”Google’s response to this question is the BERT technique for pre-training NLP applications. Pre-training entails using a huge volume of unlabeled generic text from the web to train a language representation model before fine-tuning it on a small dataset prepared specifically for a task. BERT (Bidirectional Encoder Representations from Transformers) is intended to help developers and researchers working with deep neural networks to deal with the shortage of training data (labeled/annotated public datasets).

BERT models can predict each input word in a context (based on previous words in a sentence), as well as understand the relationships between sentences. Google also optimized Cloud TPU (Cloud Tensor Processing Units used to streamline ML workloads). The company researchers note these improvements will allow for training an NLP model in 30 minutes using a single TPU or in a few hours with a single GPU (graphics processing unit.)

Principal data scientist at Booking.com Lucas Bernardi thinks that BERT will have a huge impact on the industry: “The BERT language representation model not only gives state of the art results on many NLP tasks but also makes rich word representations accessible to more researchers and engineering without the need of end to end training of large and complex neural networks.”

The introduction of BERT, as well as Universal Language Model Fine-tuning (ULMFiT), ELMo, and other proposed solutions brought the power of transfer learning to texts and were able to improve many state-of-the-art results, according to Aleksander Konduforov of AltexSoft. Transfer learning is the use of knowledge gained while solving a former problem to deal with the latter, related problem. In this case, transfer learning entails pre-training an NLP model on a generic text. “This can lead to improvements in many applications. For instance, virtual assistants (Siri, Alexa, and Google Assistant) will be able to better understand the speech and give more relative answers to a user; the capability of chatbots to define the context of requests might increase as well,” the expert adds.

Machine translation

Wolfram Research‘s Jérôme Louradour says that a big breakthrough in machine translation was made in 2018: “State-of-the-art deep learning models have reached human performance in such tasks as Chinese to English translation. Besides, tremendous progress was made in unsupervised machine translation for which specialists don't need to build a labeled training dataset with translation examples.”Specialists also introduced new methods for training translation models using two independent sets of text in the source and the target language, without any alignment. “These advances unlock the possibility for training machine translation models on rare languages, such as Urdu," the expert notes.

Question answering

Machines now can compete with humans in question answering, which is another application of NLP. OneConnect, a subsidiary of Ping An Insurance has developed a model with an accuracy of 83.435 percent, which is close to the human average performance (86.831 percent). The model was trained on the latest version of Stanford Question Answering Dataset (SQuAD).SQuAD2.0 is a reading comprehension dataset that consists of more than 100,000 question-answer pairs from its previous version and over 50,000 unanswerable questions. The dataset publishers’ goal is to force models to understand when a question can’t be answered given a context.

Ping An representatives note that the model can be applied to enhance customer service.

Oleksandr Khryplyvenko notes that NLP will continue transforming customer support through automated virtual assistants. Numerous companies deliver messaging automation solutions, for instance, Agent.ai. Intra-corporate information and contact search is another common use case for the technology that will remain in 2019. One of such services, the People.ai platform, can be used for CRM contact management to ease workflows for marketing, customer success, and sales teams.

“Personalize everything”: the critical need for a tailored customer experience in various industries

Brands are looking for ways to engage with clients in relationships that would be mutually beneficial. Businesses strive to personalize interaction with customers showing content that may be meaningful for each of them. Data on customer preferences, their individual details, online behavior, or device must be collected and processed to deliver a personalized experience.Entertainment

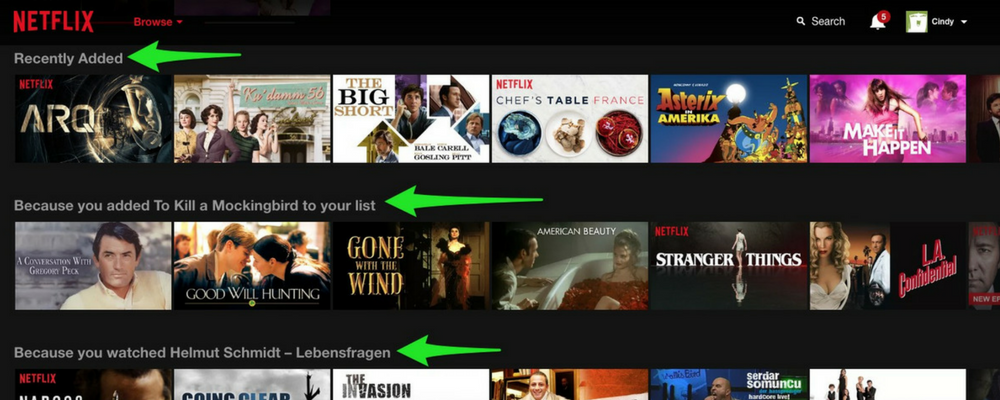

Entertainment is one of the industries leveraging DS and AI to recommend media content (think of suggestions on Spotify, Netflix, or YouTube.)

Recommendations on Netflix. Source: RE•WORK

Dmytro Fedyukov notes that personalization remains the industry trend: “Even in our case case [BetConstruct provides online gaming and sports betting solutions], we see more granular personalization aimed at targeting a particular user with their needs, as well as the attempt to create a customized, comfortable environment. We start relying less on classic recommender systems and try to communicate with a user solely via a personal channel providing tailored information and content that are relevant for a user at a given time. For example, if a user likes the 2018 FIFA World Cup, the environment must fully inform him or her about this event. But in a not very intrusive way.”Travel

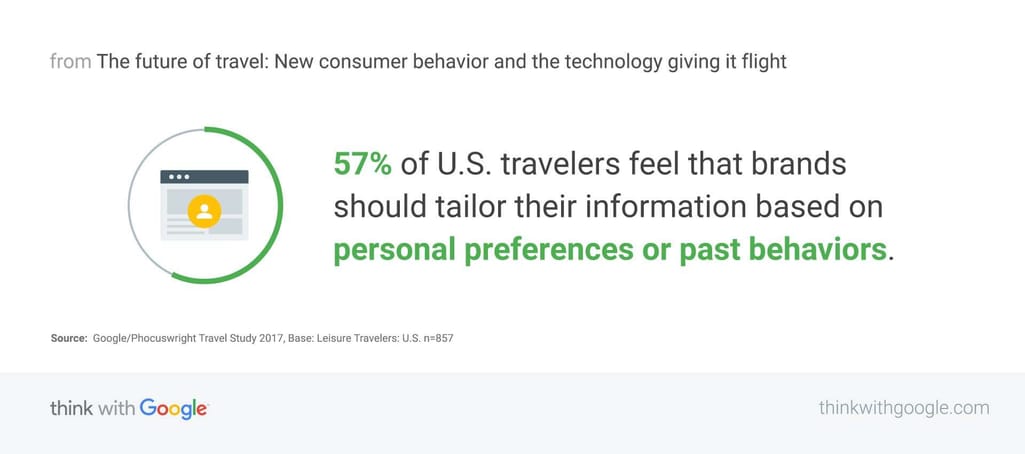

Travelers appreciate offers with a personal touch. According to Google/Phocuswright Travel Study 2017, 57 percent of US travelers feel that brands should consider personal preferences or past behaviors when providing information.

US travelers on personalization

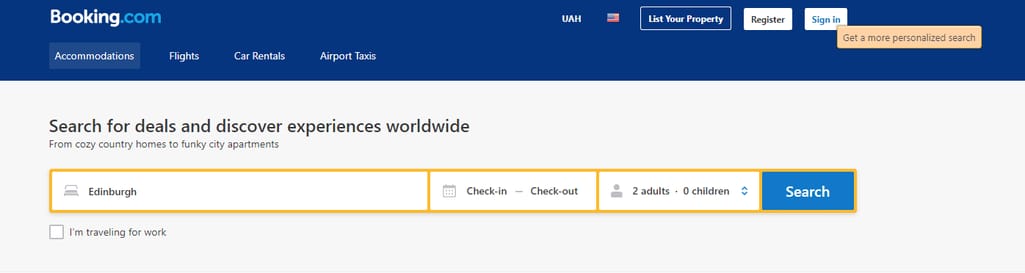

What’s more, 36 percent of customers are likely to pay more for services if companies tailor information and overall travel experience. We discussed approaches to customer experience personalization in travel and hospitality in a dedicated article, so feel free to check it if you want to know more.Global online travel brands respond to traveler preferences putting high volumes of data to work to improve customer experience. Principal data scientist at Booking.com Lucas Bernardi notes that the personalization is the main opportunity for machine learning in the travel industry: “More and more people want personalized services, these services are becoming a must, so we need to make sure we can offer that.

The travel company specialists deal with plenty of data of all types. “In particular, we’re working a lot with what we call transactional data (user searches, clicks, reservations, etc.) We’ve been also working with computer vision systems for a couple of years now. Text data is also present across the whole funnel, from searches, to destinations and accommodations descriptions and guest reviews. We try to select content that’s relevant and useful for our customers.” Bernardi also talks about major success with machine translation used for such tasks as translating hotel descriptions.

Personalization at glance: Booking.com tailors products/services based on traveler type, past searches from an endpoint device, and offers a more personalized search to registered users

However, building and developing a recommender system for the travel sector poses several challenges. One of the problems is caused by the exactitude of services/products provided via an online travel agency. Unlike media content (songs or movies), accommodation options are limited and dynamic: “You cannot really recommend one room to everyone because of a limited number of rooms that we can sell.” Moreover, recommending a wrong hotel or an apartment may cause more customer frustration than suggesting a video, for instance. So, many attributes for booking options (location, facilities, refund policies, etc.) must be taken into account to make the suggestion that meets the preferences and requirements of a particular person. Data sparsity is another problem: “The interaction of users with our platform is sparse. People don’t get to us every day, they use the website only a couple of days a year. So, it’s very tricky for us to construct a proper user profile.”All these interconnected issues are related to the general match and supply problem: Travelers are looking for the best accommodations and suppliers need to showcase their properties in ways to attract the right guests. You can read more on this topic in the Learning to Match research paper. Although many of the techniques and methods developed for the Movies Recommendations case are also relevant for the travel sector, one can’t look at this challenge as recommendations systems alone, stresses Lucas. Booking.com addresses this problem by looking at it from various angles (i.e. information retrieval, econometrics, game theory). “The real challenge is how to combine the relevant methods from each field into a system that makes sense as a whole.”

Reinforcement learning: internet advertising as one of the numerous applications

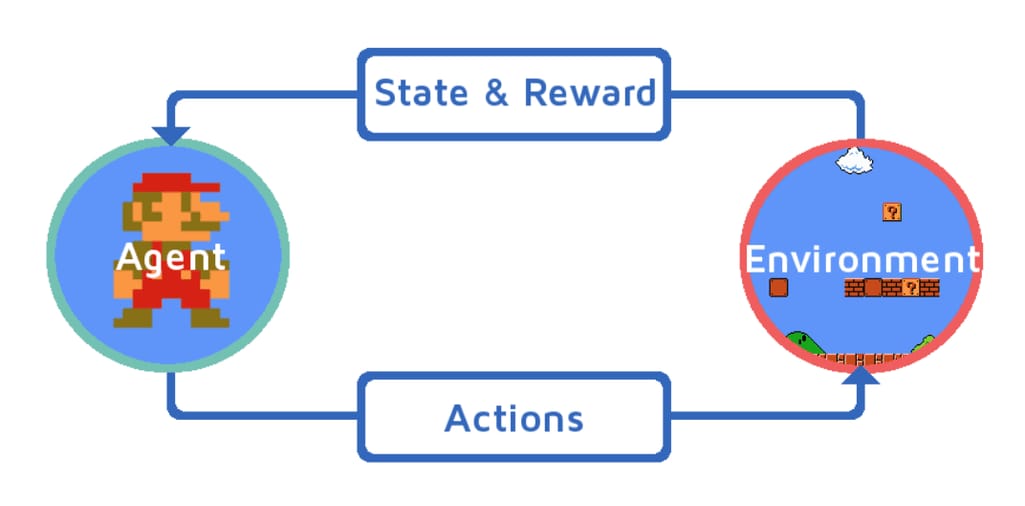

Reinforcement learning (RL) is a machine learning technique that entails training an agent (algorithm) in an interactive environment. The agent learns on its own by trial and error: It gets rewards for performing a task correctly and penalties for making mistakes. In other words, the agent uses feedback based on its experiences and actions to define the optimal way of solving a problem while getting a maximum cumulative reward.

The principle of reinforcement learning. Source: freeCodeCamp

Aleksander Konduforov notes that this year introduced major improvements in reinforcement learning: “Big technology companies are getting good results with deep reinforcement learning, which can influence robotics, drone-based delivery, gaming, and will potentially also create new types of weapons, unfortunately.”Internet advertising is another use case for reinforcement learning that will bring more and more profits to those who use it, thinks machine learning and data science expert Oleksandr Khryplyvenko: “Reinforcement learning is being welcomed by lots of companies. They were afraid of using it first, but the desire to optimize costs defeats the fear of adopting new technologies and taking risks.”

RL is used to improve real-time bidding strategy to dynamically allocate the advertisement campaign budget “across all the available impressions on the basis of both the immediate and future rewards.” During real-time bidding, an advertiser bids on an impression (ad view), and if they win an auction, their ad is displayed on a publisher’s platform.

The Alibaba Group, for instance, applies RL to optimize bidding on the eCommerce platform Taobao with a MARL (multi-agent reinforcement learning) algorithm. The linked research paper was published in September 2018. The results are solid: Data scientists achieved 340 percent ROI with 99.51 percent of spend budget with RL-based bidding. With manual bidding, ROI was 100 percent with 99.52 percent of budget spent.

AI ethics: using personal data with respect to privacy

The pervasive use of personal data by big corporations would have caused concerns about data security and privacy among individuals, governments, and organizations sooner or later. As a result, the General Data Protection Regulation (GDPR) has been in place since May 25, 2018; the California Consumer Privacy Act was approved and will take effect on January 1, 2020. These regulations put increased responsibility on companies and entities as they have to comply with both. “Since data science relies on data, specialists must take these regulations into account for their activities and solutions,” notes Aleksander Konduforov.Microsoft and Google released their AI principles as a counter to the Cambridge Analytica scandal and other Facebook problems with personal data protection.

The discussion continues. In November 2018, the International Association of Privacy Professionals (IAPP) and UN Global Pulse have published the Building Ethics into Privacy Frameworks for Big Data and AI report. The European Commission’s High-Level Expert Group on Artificial Intelligence (AI HLEG) published the AI Ethics Guidelines draft a month after, on December 18.

Obviously, the attention to data ethics will continue growing in 2019.

Computer vision and artificial image generation

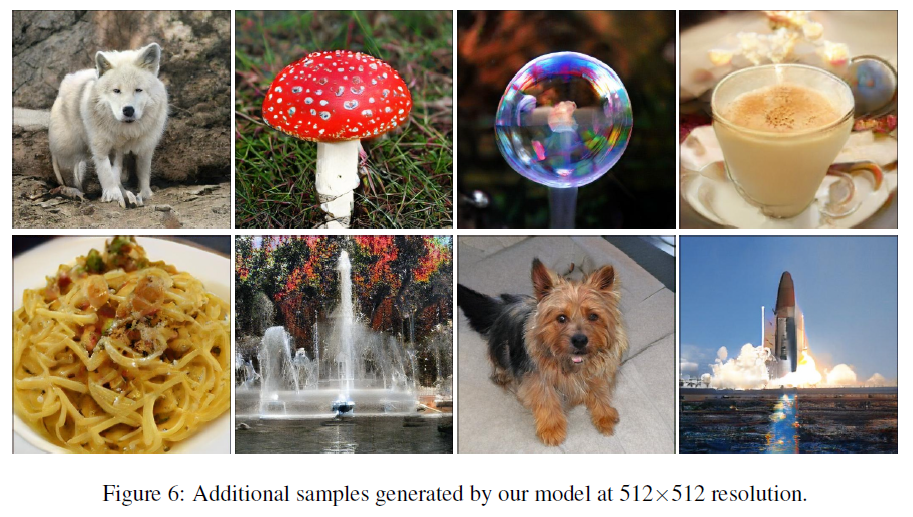

Computer vision, a branch of computer science aimed at enabling machines to see, analyze, and provide observation results, made some news in 2018.Aleksander Konduforov notes that many of the computer vision advances were about image or video generation. For instance, BigGANs developed by DeepMind and Heriot-Watt University (UK) researchers introduced a new level of quality in image generation. BigGAN (generative adversarial network) is a neural network with a larger number of nodes (artificial neurons, its elementary units) trained on more images than usual.

“We demonstrate that GANs benefit dramatically from scaling, and train models with two to four times as many parameters and eight times the batch size compared to prior art,” the researchers note in the paper.

Artificial image samples generated by BigGAN. Source: Large Scale GAN Training for High Fidelity Natural Image Synthesis

You can read more about the experiment in Large Scale GAN Training for High Fidelity Natural Image Synthesis published on arXiv.NVIDIA became another newsmaker in the area of image generation. The company created a style-based generator architecture for adversarial generation networks (GANs) which provides an easy way to control and change the appearance of a generated image. According to NVIDIA researchers, adversarial generation networks can automatically learn about particular elements of images without human supervision. These generators perceive each picture as a collection of ‘styles’ (i.e. face shape, hair color, age), and each style controls the effect at a particular scale.

Once trained, a GAN can generate new, real-looking photos of humans, animals, or any objects based on specific characteristics of provided pictures.

Generated realistic images by a style-based generator

This development can have business potential.

Deepfake videos became very popular and caused a lot of discussion in media and the public. Deepfake is a technique that allows for combining and superimposing (placing) one video or image on top of other image or video content. Simply put, it’s face-swapping. The technique may exploit generative adversarial networks (GANs).The term appeared after the Reddit user Deepfakes shared face-swapping sexually explicit videos in November 2017 (according to some sources, in December.) The Redditor claimed that he used Keras and TensorFlow frameworks for his creations. In January 2018, Deepfakes launched FakeApp, a face-swapping application. The guy was banned from Reddit later in February.

“Because of deepfakes, video footage is no longer the most reliable information source. It’s difficult to apply this technology today. However, it will definitely evolve and become easier and faster to use in the coming years. Given that we are already living in an information space with a lot of text, image, and sound fakes, having video fakes without being able to quickly fact-check their origin will worsen a situation,” says Alexander Konduforov.

What about self-driving cars? The automotive industry players will likely concentrate on smaller automation tasks with the help of deep learning and classic ML models, thinks Dmytro Fedyukov. Self-parking is an example of the developments that can be put into production.

BMW demonstrates self-driving and self-parking features in one of its 7 Series models

The expert doubts that self-driving cars will become a commodity in 2019 or 2020.DS and AI adoption across industries

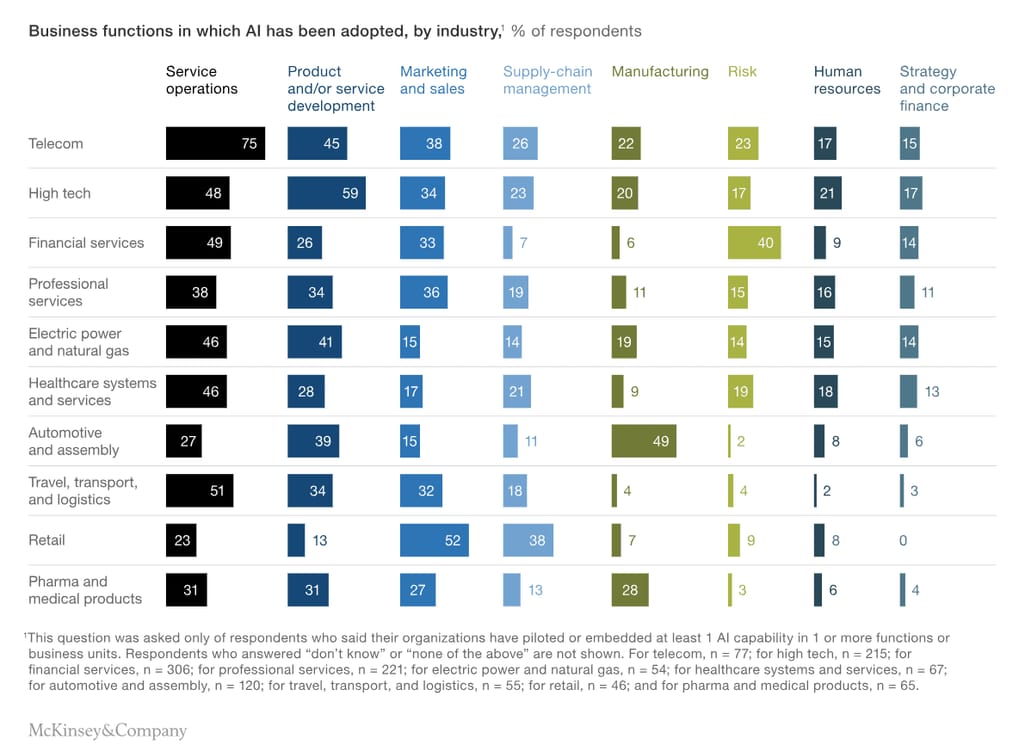

Year after year, the number of companies and organizations applying AI and DS to improve operations is on the rise. Forty-seven percent of 2135 respondents surveyed by McKinsey&Company for the 2018 Global Survey AI Adoption by Industry and Function said they have implemented at least one AI technology. Another 30 percent reported using AI on a trial basis. By comparison, 20 percent of 2017 study participants were using AI in a key part of their business and/or at scale.

AI adoption for different business purposes across industries. Source: McKinsey&Company

According to the visualization, purposes for AI utilization differ across industries: 52 percent of retailers use AI for marketing and sales while only 13 percent of them apply it for product/service development. In contrast, 59 percent of high tech companies develop services and/or products using AI.Forecasts for AI and DS application for industries. Sectors that adopted AI better than others and those experimenting with various AI-driven solutions will obviously proceed with AI utilization in 2019, in Alexander’s opinion. “Agriculture is also very interested in AI, especially in different types of automated field analysis and crop prediction,” the data scientist adds.

Dmytro Fedyukov predicts that pharma and medical industries will be the leaders in DS adoption using more precise research tools (i.e., for medical imaging) and large volumes of data on various groups of people. The active use of the Internet of things (IoT) is also beneficial for pharma.

According to Jérôme Louradour of Wolfram Research, the automotive industry, smartphone industry, and home robotics will obviously continue using developments in deep learning-based computer vision and audio processing. Reinforcement learning will have a great impact on robotics in the years to come as well. “There is also a lot of potential of deep learning for text analytics, with applications such as conversational agents (chatbots), text mining, and knowledge management,” the computer research scientist adds.

The demand for data science expertise keeps growing. AutoML tools are here to help non-experts harness insights from their data and facilitate decision-making. However, headhunting for data scientists that can handle more sophisticated tasks intensifies.

Companies across the UK are also looking for data scientists to join their workforce or consider such a move. MHR Analytics has surveyed 200 business decision-makers for its Data Surge research report and found out that 80 percent of midsize and large UK companies plan to become data-wise due to economic and political uncertainties.

However, 47 percent of UK business leaders admit their employees are limited in the technical skills required to drive and maintain the digital transformation of their companies. So that’s another challenge for businesses to overcome.

AI understanding is becoming as important as computer skills. “It is actually hard to find industries where data science does not have the potential for bringing huge impact,” says Booking.com principal data scientist Lucas Bernardi. “It reminds me of that article titled IT Doesn't matter which author makes the point that IT brought competitive advantage that was quickly lost as soon as IT commoditized. I think data science is a bit like IT, and we are in the middle of that transition from competitive advantage for a few to a technology you cannot ignore if you want to run a business.”

Dmytro Fedyukov notes that data science becomes a necessary component of numerous processes: “People are more conscious of applying data science during software and product development cycles.” Talking about his team, Dmytro notes that data scientists already realize that they must put much time and effort to gain domain knowledge.

The experts are optimistic about the opportunities for artificial intelligence and data science across industries. And constantly increasing demand for data science expertise in the global job market shows there is good reason for such optimism. DS and AI have a broad scope of application, from customer support automation to product recommendations to medical image analysis.

Nevertheless, one of the challenges that will remain for researchers and developers is to ensure that state-of-the-art technologies can be successfully applied to solving real-life problems.