As torrents of data continue to swamp organizations worldwide, the technologies that manage, store and process them are evolving at a rapid pace. And the adoption of AI in efforts to harness the power of data is quickly becoming a mainstream occurrence. The organizations that do it well can effectively gain competitive advantage with better insights into customers, operational efficiencies, and more consistent outcomes. Numerous questions and opinions abound on how best to drive data science initiatives — and we have some answers.

Being one of the world’s largest providers of essential IT infrastructure, Dell Technologies strives to make it easy to design and deploy your valuable IT and data science resources for successful AI initiatives. We do so by offering simplicity and choice in how you build an optimal IT infrastructure. And NVIDIA, a leading innovator of server GPUs, takes a very similar approach as it brings new products and solutions to market.

Over the last few years, our two companies have established a strong relationship as we work to help organizations accelerate their AI initiatives. Our collaboration is built on the philosophy of allowing flexibility and informed choice across extensive portfolios of products and solutions. Together, our technologies provide the foundation for successful AI solutions which drive the development of advanced deep learning (DL) software frameworks, deliver massively parallel compute in the form of NVIDIA GPUs for parallel model training and scale-out file systems to support the concurrency, performance, and capacity requirements of unstructured image and video data sets.

The recent releases of new PowerScale and DGX Systems showcase the latest evolutions of AI architectural building blocks designed for the rigors of data science. We’ve published a new Reference Architecture, Dell EMC PowerScale and NVIDIA DGX A100 Systems for Deep Learning, and this Solution Brief, to illustrate how we’re achieving breakthrough performance.

As tested, this architecture provides storage that starts small and scales concurrently with data growth, keeping compute and GPU resources performing optimally all while giving seamless, multi-protocol access to larger datasets. This powerful combination of massively parallel compute and flexible, scalable storage is the foundation for an IT infrastructure that can support AI initiatives today and tomorrow.

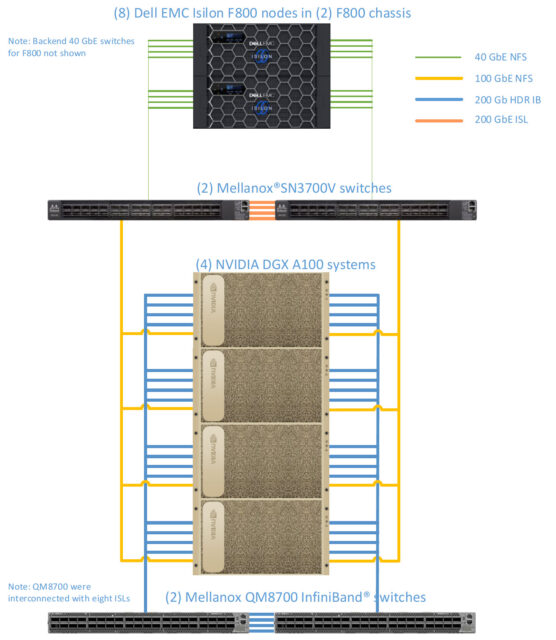

Key components of the Reference Architecture include:

- Dell EMC PowerScale — Isilon F800 All-Flash nodes deliver up to 924 TB max raw capacity with scale-out storage, supporting up to 250,000 IOPS and 15 GB/s bandwidth per chassis.

- NVIDIA DGX A100 — a universal system for all types of AI workloads. Integrated with eight NVIDIA A100 Tensor Core GPUs, offers unprecedented acceleration for data science.

- NVIDIA Mellanox SN3700V and QM8700 switches — provide high-speed connectivity between the Isilon F800 cluster nodes and NVIDIA DGX A100 systems.

If past is prologue, then you can expect data to continue amassing and AI architectures evolving to keep pace. And you can also expect that Dell Technologies will stop at nothing in helping our customers achieve success in their AI practices.

Together with NVIDIA, we’ll continue to deliver Reference Architectures along with turnkey solutions that make it easier to design and deploy optimal IT infrastructure to support AI at scale.