As we’ve shared in our annual trends report, 2021 was the year that a significant number of organizations had the realization that they will not scale AI without enlisting diverse teams to build and benefit from the technology — and MLOps is a critical part of any robust AI strategy. The diversification of involvement is fueled by new players who are joining the teams developing, deploying, and managing AI and the success from initial AI projects has sparked interest from business stakeholders who want visibility into projects and may even want to review and sign off at key steps.

As Gartner® notes in the “Achieve DSML Value by Aligning Diverse Roles in an MLOps Framework” report, “Data and analytics leaders should create opportunities for the organization’s business and technology peers to work together by creating fusion teams, which blends technology expertise with other types of domain expertise. Build teams that showcase an array of technical and nontechnical skills and experience, manifested in a diverse mix and balance of data science and other roles.”*

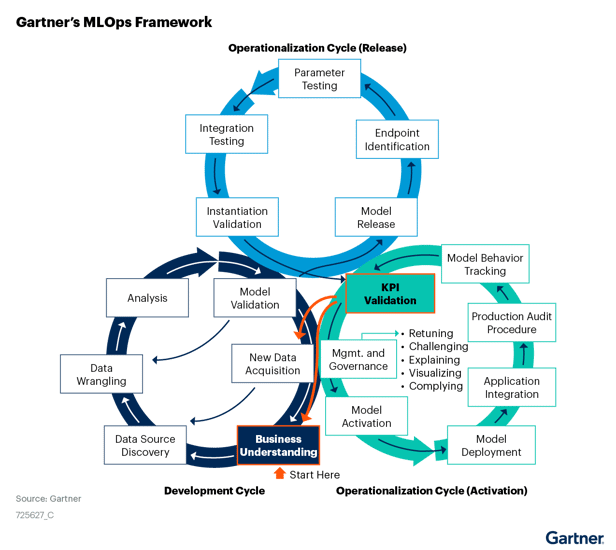

However, that’s easier said than done as organizations aim to not only identify the various roles and skills that are necessary for successful machine learning (ML) operationalization — but map them to activities that are part of any data science and ML project, each of which requires a mix of skills that a fusion team can bring to life. Gartner offers data and analytics leaders a three-stage MLOps framework to operationalize ML projects:

Stage 1: Development Cycle

According to Gartner, “The development cycle starts with identifying a business problem for a ML/data science project. Data science professionals, domain experts, and business stakeholders should work together closely to define business problems, associated key performance indicators, and expected business outcomes.”* This stage also includes data collection, modeling, data preparation, and exploration.

Stage 2: Operationalization Cycle (Release)

This stage covers model development and testing. It includes stakeholders from specialized data scientists (who own feature engineering, model development model testing, tuning, and more) to citizen data scientists (who support business applications) and IT (who creates a sandbox environment that enables users to bring in a variety of data and experiment with different tools.

Stage 3: Operationalization (Activation)

According to Gartner, “ML operationalization is a critical step in aligning analytics investments with strategic business objectives — that “last mile” toward business value.”* It requires tight collaboration across data science, business, and IT teams and includes pre-production, production itself, and post-production to measure success.

*Gartner, Achieve DSML Value by Aligning Diverse Roles in an MLOps Framework, Anirudh Ganeshan, Afraz Jaffri, Farhan Choudhary, Shubhangi Vashisth, 22 September 2021

MLOps and Dataiku

At Dataiku, we look at MLOps as the standardization and streamlining of ML lifecycle management — deploying, monitoring, and managing ML projects in production. Dataiku enables practitioners to design and code their own custom models and still take advantage of all the benefits Dataiku Visual ML has to offer, such as automatic experiment tracking and diagnostics, interpretability and performance metrics, auto-documentation, and ease of version monitoring in production.

When it comes to operationalization, Dataiku provides an architecture with dedicated environments for design, deployment and governance tasks, scalable compute via containerized and distributed execution, and code integrations with familiar languages and CI/CD platforms.

From a monitoring perspective, Dataiku offers model evaluations and comparisons to give more context into deployment decisions. The model evaluation store automatically captures and visualizes historical performance trends in a single location, arming ML operators with the context they need to ensure models remain relevant. Automated drift analyses also allow stakeholders to investigate changes to data or prediction patterns to assess model health. Visual model comparisons enable side-by-side views of performance metrics, features handling, and training information to make experiment tracking and model comparison less tedious and time consuming.

Lastly, with a robust set of AI Governance features, teams can go beyond the tactical to ensure strategic AI risk management and oversight. In addition to audit trails and model documentation to aid in regulatory compliance, Dataiku also offers a central model registry, risk and value qualifications to assess projects across multiple dimensions before allocating resources, and workflow standards which can include guardrails such as mandatory feedback and sign off prior to production deployments.