Deployment frequency and product quality provide a competitive edge in the market. The DevOps practice is one of the ways companies achieve faster deployments and better efficiency in general. But no team is perfect: There are always some weaknesses we want to spot and fix.

That’s why we need metrics. When it comes to DevOps, the metrics should suit specific practices and processes, so the progress becomes measurable.

In this article, we’ll explore some of the most important metrics used to track DevOps processes and measure their success. Given this set of metrics, you’ll be able to track both the progress of your DevOps adoption and the efficiency of practices that are already in use. So let’s jump right into it.

The whole idea of DevOps is based upon five key principles explained in the acronym CALMS:

The theoretical implication of DevOps is a large topic, deserving of a separate article. If you want a deeper explanation, we suggest you run through our dedicated articles or watch a short explainer on our YouTube.

DevOps main principles and practices

Automation and CI/CD tools for DevOps

DevOps engineer vs Site reliability engineer

That’s why we need metrics. When it comes to DevOps, the metrics should suit specific practices and processes, so the progress becomes measurable.

In this article, we’ll explore some of the most important metrics used to track DevOps processes and measure their success. Given this set of metrics, you’ll be able to track both the progress of your DevOps adoption and the efficiency of practices that are already in use. So let’s jump right into it.

What is DevOps in a nutshell?

In a nutshell, DevOps stands for development and operations. It implies breaking the silos to unite engineers and operations specialists in one team and improve overall workflow. More broadly, DevOps suggests numerous practices like infrastructure automation, continuous testing, continuous deployment, and so on.The whole idea of DevOps is based upon five key principles explained in the acronym CALMS:

- Culture stands for a DevOps way of organizing teamwork and communicating ideas.

- Automation is the means to reduce manual work in different parts of a process, e.g., test and deployment automation, etc.

- Lean is an approach that suggests removing all the unnecessary things to keep focus on the core value of a product.

- Measurement refers to constant tracking of your success.

- Sharing connotes the principle of shared knowledge and responsibilities between the team members.

The theoretical implication of DevOps is a large topic, deserving of a separate article. If you want a deeper explanation, we suggest you run through our dedicated articles or watch a short explainer on our YouTube.

DevOps main principles and practices

Automation and CI/CD tools for DevOps

DevOps engineer vs Site reliability engineer

A short explanation of DevOps principles, roles, and technologies

While all of the principles are equally important, the measurement aspect in DevOps plays a crucial role for a few reasons. So let’s discuss it before we jump to actual metrics.Continuous improvement and measurement in DevOps

DevOps stresses the importance of measurement. The leading DevOps teams assemble whole ecosystems for automated monitoring, feedback sharing, and reacting to it faster. But that's on the technical side. Process-wise, we also need metrics to understand whether new practices give our team an advantage, rather than burden it.In DevOps, there is a range of specific processes we need to keep track of to see the progress. Once we receive feedback, we can apply it to improve our processes or approaches to certain tasks. This is a concept of continuous improvement, which takes advantage of constant feedback gathering to make DevOps teams more efficient.

So here we’ll run through 10 metrics any DevOps team should consider:

- Mean Time to Changes,

- Mean Time for Lead Changes,

- Deployment Frequency,

- Mean Time to Failure,

- Mean Time between Failures,

- Mean Time to Detection,

- Mean Time to Recovery,

- Defect Escape Ratio,

- Server Uptime, and

- Application Performance Index.

MTTC, MTLC and Deployment Frequency: general productivity metrics

The first group of metrics help track overall DevOps productivity, measuring speed of different parts of a process.(MTTC) Mean Time to Change

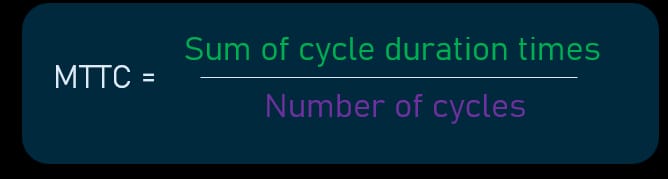

What does it show? A Mean Time to Change or MTTC is the main productivity metric for DevOps. It measures the time between the ideation phase and the final implementation of a feature or a bug fix. MTTC is calculated by summing up the time of cycles for a certain period, and dividing it by the number of cycles.

For example: During Q1 of 2021, there were 18 feature cycles (from ideation to implementation), with a total time of 356 hours, which divided by 18 gives us a 20-hour average cycle duration.

Why use it? In contrast to Agile long-term iterations, DevOps aims at shorter cycles of development and more frequent releases. Using MTTC, you’re able to calculate how long a cycle of producing a feature takes for your team from its initial stage to production. The shorter the cycle, the better.

Important to understand. As we strive for shorter iterations between each release, measuring MTTC becomes vital to see the bigger picture as it allows us to understand what parts of the process have to be optimized. But this is valid when we speak about features or fixes that require ideation. In other cases, it’s better to use other metrics that track specific parts of a process.

(MLTC) Mean Lead Time for Changes

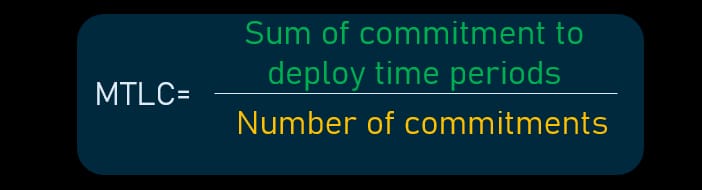

What does it show? Mean Lead Time for Changes or MTLC takes into account the time between the first commitment of the code until it’s deployed. The score of MLTC is calculated by summing up all of the time periods from commitment till production dividing it by the total number of commitments.

For example: There were 11 code commitments during the previous week. The total commitment-to-production time was 30 hours for all code commitments. The MLTC score would be 2.7 hours per commitment.

Why use it? This metric effectively measures how fast our CI/CD pipeline is and our DevOps team works with it. During the time period between commitment and deployment, the team runs manual/automated tests, gets approvals, or fixes bugs, and finally goes to deployment. But unlike MTTC, it targets a specific part of the process.

Important to understand. Since MTLC shows an average time, you can also extract granular data by measuring separate parts of the process. This will provide each member of a team with a targeted metric for improvement.

Deployment Frequency

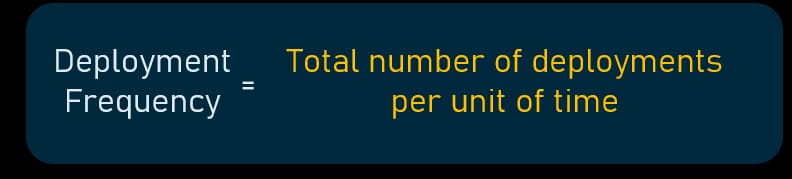

What does it show? Deployment frequency measures the general number of deploys over a certain period of time.

Why use it? The deployment frequency shows how often your team produces working software. Without understanding the release frequency, we're not able to tell whether we generate enough value to customers in time. By comparing productivity across different periods of time, the team gets valuable insight into how efficient the DevOps cycle is.

Important to understand. Once you get to measuring your deployment frequency, it is worth noting that you should count only those releases that went into production without any trouble. In other words, a successful release is the one that doesn’t create bugs to the system. As we’re aiming at the quality of a product and delivering value to the customer, counting unsuccessful releases will cloud the understanding of how often you deploy working software.

MTTF, MTTD, MTTR, and more: quality metrics for DevOps

The quality of a final product is also a priority. When it comes to automated pipelines and fast delivery teams, it’s harder to keep track of the number of bugs and how fast we are able to fix them. The following set of metrics offers the solution to measuring quality control methods.You can read about other quality assurance metrics in our dedicated article.

(MTBF) Mean Time Between Failures

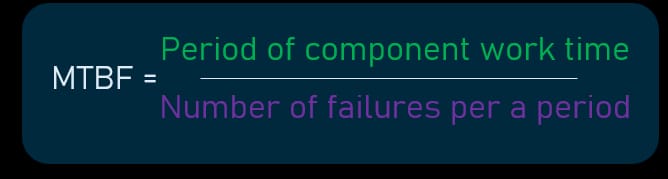

What does it show? MTBF measures the average time between failures for a single component. To calculate it, we take the total work time of the component and divide it by the number of failures.

For example: A code integration pipeline shows 94 hours of total work this week. During this period, 3 failures occurred, so our MTBF is 32 hours.

Why use it? Mean Time Between Failure is a general maintenance metric that shows frequency of breaks for a single component or feature. Usually, we’re trying to get more hours in between each break, which indicates our fixes work for a longer term. The metric also shows the most problematic features to focus on. Probably, you will need to redesign some components completely because they break too often compared to the rest of the systems.

Important to understand. The specifics of using this metric are the same as MTTF. Focus on what you consider a failure, and MTBF will show your team the weaknesses in the maintenance/testing process or in component design as well. You obviously have to investigate failure cases or the data won’t give you the context for improvement.

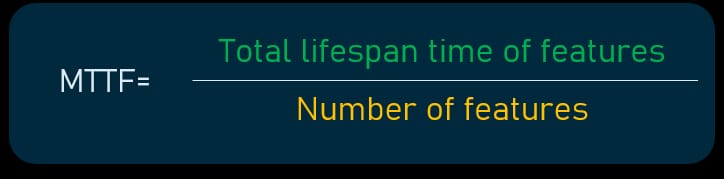

(MTTF) Mean Time to Failure

What does it show? Mean Time to Failure in software development can be used to track average time before a component or asset will require replacement. While hardware can break due to its working capacity limits, software components may require replacement because we need to completely redesign them. So MTTF can be used to measure the lifespan of both. The failure in this case is an event that makes a component obsolete.

For example: There were 4 newly deployed features that failed after 480, 160, 120, and 300 hours. To calculate MTTF, we need to sum up the lifespan time (1060) and divide it by the total number of items (4). The Mean Time to Failure will be 265 hours.

Why use it? MTTF will suit those teams that often update their software or hardware components due to the changing environment. Tracking this metric provides an approximate time for when changes must be made to the component again.

Important to understand. The important thing is what to consider a failure. Failure is any situation when a feature, component, or asset doesn’t produce expected results. While we can count only actual breaks, MTTF traditionally takes a broader definition of failure.

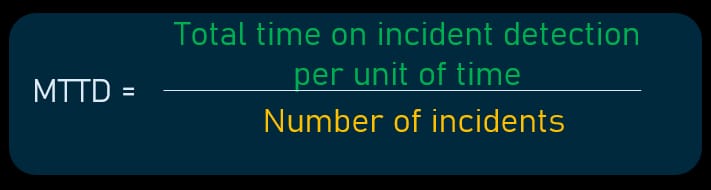

(MTTD) Mean Time to Detection

What does it show? MTTD is the average time a team spends to find a bug once it emerges. This is an indicator of both how good our monitoring system is as well as how fast our DevOps team can spot problems.

For example: We take the total number spent on detecting bugs – let’s say 20 hours – and divide it by the total number of incidents per a time period. If there were only 9 incidents, MTTD will be 2.2 hours.

Why use it? This metric vividly shows the efficiency of your bug detection capabilities, while it complements MTTF with the additional data. Mean Time to Detection provides valuable insights both for A-level management and DevOps team members.

Important to understand. Keep in mind that end-users can also detect bugs in software on production. So these numbers should rather be skipped to receive a clearer picture of your quality control. However, when it comes to incidents that impact revenue, the total amount of such cases should also be measured with separate metrics.

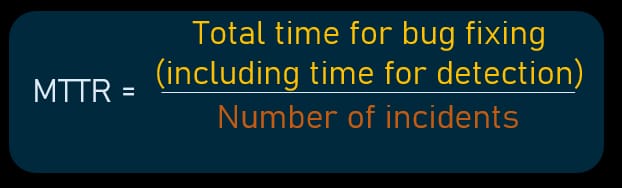

(MTTR) Mean Time to Recovery

What does it show? Once the bug is found, we have to fix it as fast as we can. So here we can use Mean Time to Recovery. This metric takes into account the time from the issue occurence to detection of a bug and the time until it’s fixed. This one is calculated like MTTD.

For example: Total downtime of features (13 hours), divided by total number of incidents (7 incidents), gives us a 1.8 MTTR score.

Why use it? Mean Time to Recovery is a direct indicator of your team’s troubleshooting speed combined with incident detection capabilities. As long as bugs will always be occurring, it’s better to focus on quickly finding and fixing them. You may complement this metric with the Mean Time to Repair, which measures only the time period from detection to recovery. This way, you can focus on your big-fixing processes more.

Important to understand. In the traditional software business, bugs are the responsibility of those who produce them. So, it’s usually developers who fix issues after they are spotted. However, the bugs can be found in production and occur due to infrastructure conditions. In this case, it will be the responsibility of ops and devs to find the reason and eliminate it. That’s why MTTR is considered to be a general quality metric of your team, not only the QA part of it.

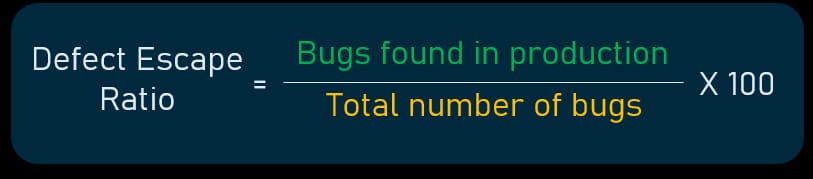

Defect Escape Ratio

What does it show? Defect Escape Ratio helps us measure the percentage of bugs that went into production as opposed to those that we managed to capture before deployment. By comparing those, we can decide whether our QC measures are any good.

For example: For a given time period, there were 11 bugs caught in production, and 71 bugs caught before production. Our defect escape ratio will be 13.4 percent.

Why use it? In terms of quality control, it is always important to spot the bugs before the production stage. Otherwise we’re risking revenue and customer loyalty losses. Knowing your Defect Escape Ratio shows the overall efficiency of your testing processes and may cause more frequent revision of your automated testing infrastructure.

Important to understand. While the number of bugs caught in production and before it are compared, we can also use them as separate metrics. Moreover, by investigating the bugs, we can also find new test cases to be covered by our QA engineers, which will help you to decrease the Defect Escape Ratio.

Server Uptime and Apdex: infrastructure reliability and software performance metrics

This section covers a couple of infrastructure and software metrics that will be suitable for DevOps needs.Server Uptime (Availability)

What does it show? Server Uptime or service availability is a general infrastructure reliability metric and is usually included in nonfunctional requirements for the product. It calculates the total time your server remains available. The calculation procedure only requires dragging data from your monitoring system and checking the uptime.

Why use it? Some applications and services require constant availability, because end users might perform business critical operations in the system. For example, banking operations run with latency of one second. And concurrently, there might be billions of them. Besides that, for the sake of positive user experience, availability of the server should be as high as possible for any product. So the higher your availability rate, the better.

Important to understand. Server Uptime is considered good and stable when its score is 99.9 percent. But as for demanding services like banking we mentioned before, an additional one hundredth of a percent will play a role.

Furthermore, most applications follow a Service Level Agreement or SLA, which has Server Uptime as one of its metrics (and requirements). So basically, it’s impossible to ignore it.

Application Performance Index (Apdex)

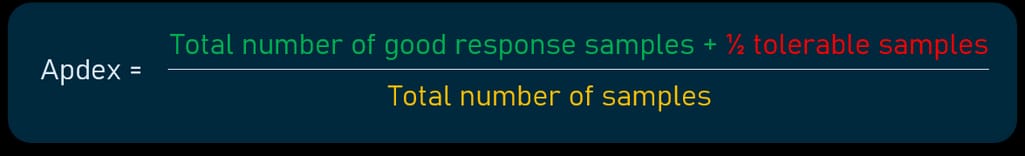

What does it show? Apdex or Application Performance Index is not a traditional software metric as it indicates the level of relative customer satisfaction with your product performance. The calculation process entails finding a service response time that is considered good.

For example: 0.9 seconds is our good response time as shown by usability tests. We collect samples of responses and place them in groups that correspond to a good time, say, (≤ 0.9s), tolerable (≥ 0.9s but < 3s), and those considered bad (> 3s).

To get the Apdex score, we need to sum up a total of good scores plus half of tolerable ones, and divide them by the total number of samples. Let’s assume there were 367 total samples with 151 of them rated as good ones, 146 marked as tolerable, and 70 as bad ones.

The index is usually measured between 1 and 0, where one is the absolute best performance. So let’s use these numbers for our calculations: good ones 151, plus half of toralable 73 will be 224. We divide those 224 by the total number of samples, 367, and we get an Apdex score of 0.61. This is considered bad performance. Usually, it’s better to strive for 1 - 0.94.

Why use it? While Apdex doesn’t provide you with end-user feedback, it shows how your application performs in relative numbers. Which gives your team the understanding of how the infrastructure works, how different features perform, and whether they need any improvement.

Important to understand. The Apdex is traditionally applied to high throughput applications, where performance plays a crucial role for business objectives. While it’s always good to have quick response time, some services will always show low Apdex due to the difference in sample time.

How to apply metrics in DevOps?

Metrics are helpful for keeping track of any good or bad things. But it’s even more important if we want to constantly improve our DevOps practices. So, here are a couple suggestions on how to apply DevOps metrics wisely:Continuously compare performance over periods of time. Don’t treat metrics as snapshots of your performance. Make sure your tracking is systemic and you can always refer to it to see the pace of changes in your DevOps processes. So for example, it’s good to discover that your monthly deployment frequency has changed from 0.3 to 4 deployments per day in a matter of a year.

Share feedback and measurement results. While some of the metrics will interest A-level managers, the ones who apply data to make change are your engineers, testers, and administrators. So, make sure there is a practice of sharing and discussing findings across DevOps team members.

Automate monitoring. As we’ve mentioned before, DevOps relies on automation tools to remove manual burden and decrease the human factor. The same goes for monitoring tools that may inspect the health of your infrastructure, gather data about the bugs or the number of successful deployments. In such a form, it will be easier to review and compare results side by side, as well as share metrics with your DevOps team members.