In this article we will learn how to design a LRU Cache, understand it’s cache replacement algorithm. We also look at description of LRU Cache with some examples. Then, we look at the implementation of this design in code with their complexity analysis.

Caching is a method of organizing data in a faster memory, usually RAM to serve future requests of same data in a efficient way. It avoids repetitive main memory access by storing frequently accessed data in cache. However, the Cache size is usually not big enough to store large data sets compared to main memory. So, there is a need for cache eviction when it becomes full. There are many algorithms to implement cache eviction. LRU caching is a commonly used cache replacement algorithm.

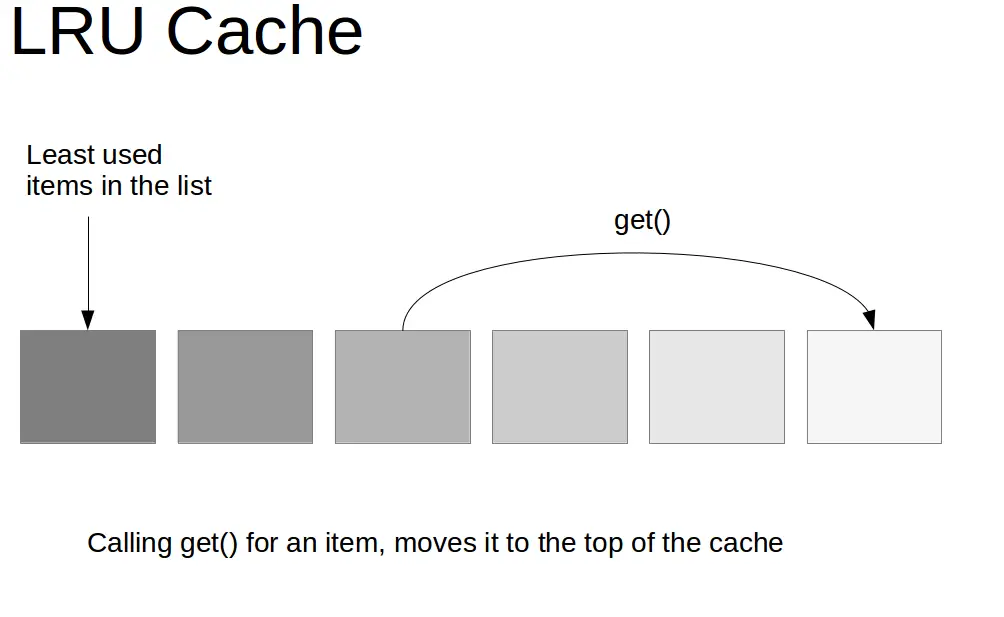

Least Recently Used (LRU) Cache organizes data according to their usage, allowing us to identify which data item hasn’t been used for the longest amount of time. The main idea is to evict the oldest data or the least recently used from the cache to accommodate more data followed with replacement of data if already present in the cache (Cache Hit) and bring it in the front or top of the cache for quick access.

Example:

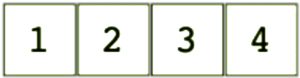

Let’s consider a cache of capacity 4 with elements already present as:

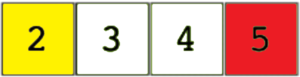

Elements are added in order 1,2,3 and 4. Suppose we need to cache or add another element 5 into our cache, so after adding 5 following LRU Caching the cache looks like this:

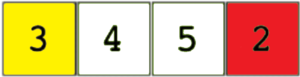

So, element 5 is at the top of the cache. Element 2 is the least recently used or the oldest data in cache. Now if we want to access element 2 again from the cache. The cache becomes:

So, element 2 comes to the top of the cache. Element 3 is the least recent used data and next in line for eviction.

LRU Cache Implementation

We follow these steps to implement a LRU Cache in our program:

- We use two Data Structures a Deque or Doubly Ended Queue, where insertion and deletion can take place from both ends and a Hash-Map. The Deque will act as our Cache.

- In Queue, we enter each element at first/front of the queue, so we use a Deque. If we need to access any element already present in our cache we search that element in queue, remove it and then add it to the front of the queue/cache. But searching an element in queue could take O(n) time in worst case and will be a costly operation.

- So, to avoid this we use a Hash-Map which provides look-up or search time for our keys in O(1) time. Then, we can directly delete the element without the need to search in cache. So if our Hash-Map contains the data then we can just bring that element to the front of queue and add data as entry to our map for future look-ups.

- If our capacity is full then we remove from the rear end of Deque which contains least recent data. Along with this, we remove the entry of the element from our Map.

- So, we mainly need to implement two methods for our cache: One to get element from cache and other to add element into our cache following the LRU algorithm and above steps.

- In get method we need to just get the value of data item if it’s present in our cache. In put method we add the data into our cache and the Map and update the order of cache elements.

Why Hash-Map not Hash-Set?

Since we only need to search whether an element is present in our cache or not we can also do it by using a Hash-Set then what is the purpose of Hash-Map. The reason is while accessing resources from the Cache a key is required to access the data. The key will be unique for each data. So with the key we can access the actual data. In real life scenario, for a product there can be many attributes we need to access with the product key. As we know, Hash-Map stores data in Key-Value Pair the Key Field holds the key of data and Value field can hold the actual data or attributes.

Now let’s look at the implementation of above in Java:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 |

//import the required collection classes import java.util.Deque; import java.util.HashMap; import java.util.LinkedList; import java.util.Map; class CacheObject { int key; // key to access actual data String value; // data to be accessed by cache CacheObject(int key, String value) { this.key = key; this.value = value; } } public class LRUCache { // queue which acts as Cache to store data. static Deque<Integer> q = new LinkedList<>(); // Map to store key value pair of data items. static Map<Integer, CacheObject> map = new HashMap<>(); int CACHE_CAPACITY = 4; public String getElementFromCache(int key) // get data from cache if key is already present. { // if item present in cache remove and add to front of cache if(map.containsKey(key)) { CacheObject current = map.get(key); q.remove(current.key); q.addFirst(current.key); return current.value; } return "No such element present in Cache"; } public void putElementInCache(int key, String value) { if(map.containsKey(key)) { CacheObject curr = map.get(key); // we check if element already present in cache through Map q.remove(curr.key); // remove if already present } else { if(q.size() == CACHE_CAPACITY) { int temp = q.removeLast(); // if cache size is full we remove from last of queue map.remove(temp); } } // then we just add already present item or new item with given key and value. CacheObject newItem = new CacheObject(key, value); q.addFirst(newItem.key); map.put(key, newItem); } // Driver Code to test above methods. public static void main(String[] args) { LRUCache cache = new LRUCache(); cache.putElementInCache(1, "Product-A-Name"); cache.putElementInCache(2, "Product-B-Name"); cache.putElementInCache(3, "Product-C-Name"); cache.putElementInCache(4, "Product-D-Name"); // We get 2 from cache System.out.println(cache.getElementFromCache(2)); System.out.println(); // We print our queue and see 2 will be at front of cache System.out.println(q); System.out.println(); //Element 5 is not present in Cache System.out.println(cache.getElementFromCache(5)); cache.putElementInCache(5,"Product-E-Name"); System.out.println(); //Now after adding 5 in cache it will be at front and 1 is deleted. System.out.println(q); System.out.println(); } } |

Output:

|

1 2 3 4 5 6 7 |

Product-B-Name [2, 4, 3, 1] No such element present in Cache [5, 2, 4, 3] |

So this was the implementation of LRU Cache in code let’s have a look at the time and space complexities of our approach.

Time Complexity: Basically we implement this cache in O(1) time, as the search for an element present in cache require constant time and with our Map the search time is also constant. In put method, we add elements to front of cache which also require constant time. So overall time is O(1).

Space Complexity: We use a Deque which store n number of keys and a Map which store n Key-Value pairs so the overall space complexity is O(n).

That’s it for the article you can post your doubts in the comment section below.

Since the code uses the Deque class from the standard Java library, and a call to Deque’s remove(someObject) method is an O(n) operation, the cost of both lookup and insertion in this implementation is O(n). Switching to a custom implementation where we have access directly to the list element’s pointers is an alternate approach that can achieve O(1).