Although most people see the value of Sprint Goals, how to create them is a huge source of confusion and frustration. Is the Spring Goal there before the Sprint Backlog? How do you create a Sprint Goal out of the unrelated set of items at the top of your Product Backlog? And should everything on the Sprint Backlog be related to the Sprint Goal?

There are some great posts out there that present a conceptual model for thinking about Sprint Goals, like this one and this one. But no matter the model, people frequently struggle to translate it to their own work.

So instead, I decided to write the story of a real product and (some of) the Sprint Goals we used there. By working from real examples, I hope I can help people build a better understanding of the kind of reasoning that informs Sprint Goals. And how it shapes both the Sprint Backlog, refinement and the other way around.

A bit of background

This post concerns the development of a product that aimed to make a set of related HR-products — both our own and from partners — accessible through a single platform. This ranged from software for hour registration to invoicing and from recruitment to personnel planning. The new product was intended to reduce the pain of having dozens of individual products, all with their own logins, dashboards and purchasing procedures. A dedicated Scrum Team worked on the product for over a year, and although the composition changed, the product is still maintained by them. Sprints had a length of two weeks (later one). The team was cross-functional in the sense of having the skills for requirement analysis, visual design, testing, development, and quality assurance.

The Definition of Done of the team included everything necessary for the team to release to production at least at the end a Sprint. It includes rules such as:

- Web-pages don’t have dead links or buttons that don’t work;

- Web-pages don’t have temporary texts or images;

- Web-pages work in recent versions of Firefox, Chrome, and Safari and on a selection of mobile devices;

- Automated tests are written according to the testing pyramid; unit tests for all business logic, integration tests to verify the entire stack and UI-tests to verify critical user paths;

- Every item is verified against relevant and common OWASP attacks. This was automated to some extent in later Sprints;

- The code for an item is peer-reviewed by someone else in the team. Someone else in the team verified that the item worked as specified;

- The item is deployed to production.

Note: this post is a rough and incomplete overview of the work that actually happened. For brevity, I have taken some shortcuts here and there. I also left out some details that are business-sensitive.

Product & development strategy

Before work began, we used a story map to create a rough outline of the work needed. Every column in the story map involved a certain step in the flow (e.g. website to the webshop, webshop to the dashboard, and so on for twelve more columns). From there, we devised a rough strategy for the first Sprints:

- First focus on building a simple-as-possible webshop so we could at least present the product catalog and allow customers to purchase single products;

- Then focus on building a dashboard where widgets from the various products can be integrated into a single overview of what is important;

- Then focus on expanding the webshop with support for complex purchases with multiple licenses and users;

- Then focus on making branded versions of the product available to large customers;

This strategy helped inform the goals for the first dozen Sprints. The Product Backlog was constantly revised as we learned more.

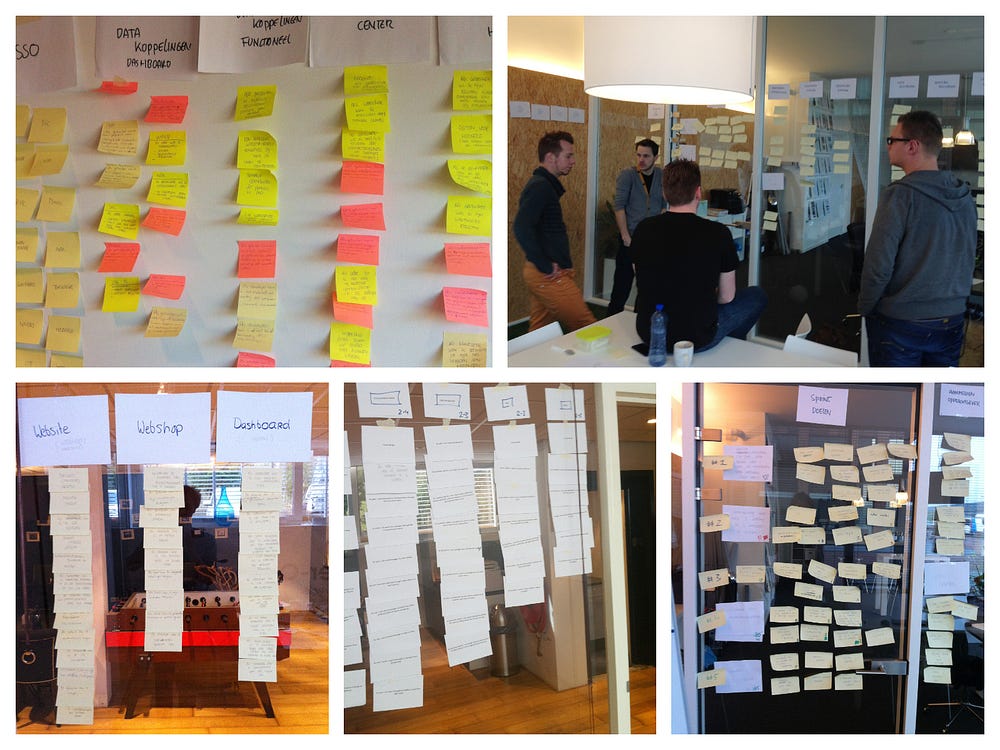

Lots and lots of stickies. The picture in the bottom-left was one of the first Product Backlogs we created (more columns to the right). The picture on the bottom-right shows the Sprint Goals for the first four Sprints (the white papers) and a potential selection of work that would fit those goals. We used that as input to re-order the Product Backlog accordingly.

“From the very start, we agreed to ship to production as frequently as possible.”

The goal for Sprint 1: “Set up deployment pipeline & release an empty site to production”

From the very start, we agreed to ship to production as frequently as possible. A very practical consideration was that the Product Owner wanted to return on the investment as soon as possible, and couldn’t afford to wait for months to have something online. We also wanted to collect realistic feedback based on a public-facing website. This pressured us to keep our quality consistently high. Because we knew that a manual release process would result in postponing releases or lowering the quality by skipping tedious checks, we decided to automate from the start.

Because this was the first Sprint, we also had to set up our development and production environments. We still wanted to deliver a tiny functional slice of work to production, if only to prove that our infrastructure and pipeline was working.

Below are some of the items that ended up on the Sprint Backlog:

- Create a sticky-based Sprint Backlog and Product Backlog on the wall in the team-room;

- Set up servers for the production environment (including database);

- Set up a build server to build commits, run unit tests and compile deployment packages;

- Set up a distributed service bus (NServiceBus);

- Before deploying to production, automatically back-up the database;

- Set up an empty API that connects to any empty database;

- Before deploying to production, automatically run integration tests on API;

- Create and deploy an empty Angular website that connects with an empty API to show the version of the deployed release;

What we discovered during the Sprint

Setting up the infrastructure turned out to be a bit too ambitious for a single Sprint. With the Product Owner, we agreed to limit to two servers instead. Setting up the first version of a working deployment pipeline would make adding more servers later easier anyways.

We ended up adding a logo and contact information to the website, as well as the version of the deployment in the footer. It just looked better that way.

The Sprint Review was fairly limited from the perspective of stakeholders. In terms of working software, we could only demonstrate a basic website with a logo and a footer. We actually changed something during the Sprint Review and deployed it (automatically). Although not working software, we did gather a lot of useful feedback on the visual designs created for the homepage and the product page.

What we refined during the Sprint

Knowing that the coming Sprint would be spent on the homepage, our designer already made a start with visual design. We also refined the work that would be needed for the homepage. The focus here was on refining our upcoming work to what was minimally necessary and adding all the other work somewhere down the Product Backlog.

The goal for Sprint 2: “Show top-selling products on the homepage”

For the second Sprint, we wanted to get into the design and exploration of how to present products in the webshop. With the Product Owner, we decided to go for a homepage that would only display a selection of products. Although potential customers wouldn’t be able to order anything through the shop just yet, we could at least give them the option to let us contact them. We decided to limit the product catalog to the 10 most important ones, as we didn’t want to spend time on searching and navigating a larger catalog (for now). The catalog would be uploaded directly to the database, as we wanted to first see learn how to best structure product-data before building a management environment for it.

Below are some of the items that ended up on the Sprint Backlog:

- Create HTML-based style guide based on visual design;

- Configure Bootstrap for basic styling elements in the homepage;

- Identify the 10 most important products for our shop and gather product details & images

- Set up and seed table for Products in the database with selected products

- Implement a page where visitors can see details of a selected product

- Test homepage in modern browsers;

- Implement basic “Call Me Back”-button for each product where potential customers can leave their phone number behind;

- Deploy to production (multiple servers);

What we discovered during the Sprint

During the Sprint we discovered that the product detail page benefited from having more than three images (our initial acceptance criterium). But it would involve quite a lot of work to add a more extensive gallery of images. So we consulted the stakeholders and decided to stick to three for now. The option to add more images was added to the Product Backlog.

During the Sprint Review, stakeholders gave us helpful feedback on how they wanted to see the product information presented. As it turned out, the description was too long (‘I don’t have time to read the whole thing’). We also got a better sense of how to organize the product information. Although useful, these changes didn’t end up on the very of the Product Backlog.

Architecturally, this Sprint also allowed us to verify that we could deploy the webshop to multiple servers. Each instance had its own copy of the product catalog, using a CQRS pattern to keep them synchronized. Although the use case for scaling wasn’t relevant yet, it was something that we kept in mind from the very start.

What we refined during the Sprint

After this Sprint, we could’ve expanded the product catalog further. This would allow us to explore the usability of pagination, searching and ordering. But we knew that many useful patterns already existed for this and that it was easy to implement with our framework (Angular). Instead, we decided to tackle the more complicated ordering process. So refinement focused on identifying the work we needed to do for that. Our designer worked on a rough sketch for the ordering process.

The goal for Sprint 3: “A visitor can order a product”

Roughly, customers had to go through three steps to order something: 1) select a product, 2) enter address and billing information 3) perform the payment. We discovered several exception paths. First, some customers couldn’t pay with a credit card and required an invoice instead. Another was that customers from particular countries weren’t allowed without approval. Yet another example was that some products involved per-user licensing and other per-product. Finally, not all credit cards were accepted or allowed.

We decided to first implement support for credit card payments (with Stripe), as that was most commonly used. The option to receive an invoice would be implemented later. We also focused on per-product licensing to avoid the complexities of per-user licensing for now. Finally, we decided to skip a shopping basket. Our webshop effectively sold software licenses, and customers in our current sales process never purchased more than one product at a time.

Below are some of the items that ended up on the Sprint Backlog:

- When an order is placed, send an email to our sales department;

- Allow customers to pay for their order by credit card (through Stripe);

- Remember failed payments in an audit log so we can track potential abuse;

- When a user purchases a product, record their license in a separate service;

- Encrypt user-sensitive information in order (e.g. email, address);

What we refined during the Sprint

With a rough, but working, ordering process in place we wanted to extend the product catalog to draw in more customers to actually order something. Not only was this important from a business perspective, but it also gave us more opportunities to learn how people used the ordering process. We knew we could make good use of this information to inform our work in upcoming Sprints. Again, the designer worked one Sprint ahead by designing the extended product portfolio.

“We learned a lot about the ordering process by implementing it. For example, we discovered more unhappy paths than we initially expected.”

What we discovered during the Sprint

We learned a lot about the ordering process by implementing it. For example, we discovered more unhappy paths than we initially expected. As it turned out, some credit cards would take a day to validate. Although it was rare, we needed a mechanism for handling this while still allowing a user to complete their purchase. We also ran into the challenge of how to test this process without actual credit cards. Thankfully Stripe supported several fake credit cards for testing purposes, but we had to support this in our flow as well.

In terms of technology, we discovered that the sequential flow of ordering (click to order, customer information, billing information, confirmation) didn’t always play well with the asynchronous nature of the services running behind them. The aforementioned example with the credit cards was one, but we also ran into others. For example, another service kept track of the licenses, but that service was updated asynchronously. So technically, it was possible to purchase two of the same licenses if you were fast enough. We temporarily worked around the problem in this Sprint and added an item to near the top of the Product Backlog to find a more robust solution later.

The goal for Sprint 4: “Extend product catalog to more products”

Now that we had a basic ordering process in place, the Product Owner felt the most valuable next step would be to add other products and add-ons to the catalog. Although the ordering process could definitely use work to support more use cases, it did work in its current form. And the Product Owner felt more uncertain about how to best present our full range of products, modules and add-ons (about 30-something).

- Gather product information about our full range (pictures and descriptions);

- Allow visitors to search the catalog by keyword and type of product;

- Present only 10 products at a time, allowing visitors to navigate to back and forth between pages;

- Extend the number of pictures to be shown per product to 10 (instead of three);

- Fix a breaking issue in the ordering process (not related to Sprint Goal, but important enough);

What we discovered during the Sprint

The diversity of add-ons was much larger than expected. So we worked with the Product Owner to add the most important ones now and add the others to the Product Backlog. We made use of (admittedly rough) sales data to guide this decision making.

The Sprint Review was used to let stakeholders perform a purchase (with a fake credit card) on a product with a selection of add-ons. We simply handed them the mouse and keyboard. This actually gave some good insights into potential improvements in the user experience, as people didn’t know what to do at the end of the process. One developer added animated confetti at the end of the ordering process to celebrate the order, which was a nice touch that stakeholders appreciated.

The flow of Sprint Goals

So what happened after the first couple of Sprints? I won’t bother you with all the details — this post is already too long — but these are some of the goals we worked on:

- “Make product catalog manageable for our sales department”

- “Allow customers to log in to the various products with OpenID (single sign-on)”;

- “Create a dashboard with basic widgets for the most common two platforms”;

- “After purchase, guide customers through the set-up and configuration of [Product A]”;

- “After purchase, guide customers through the set-up and configuration of [Product B]”;

- “Allow users to order by using pre-paid credits”;

- “Translate user-facing sites and messages to the user’s language”;

I won’t presume that these are all excellent Sprint Goals. Yet, all of them offered direction to the work of the Development Team during the Sprint. Each goal helped us select work from, and re-order, the Product Backlog as needed.

The flow behind the goals is different from what would happen when a release to production takes place only at the end. There, development teams would probably start with the infrastructure, architecture login mechanisms and support for globalization before moving on to the webshop and dashboards. Instead of focusing on what is the most valuable right now, teams would focus instead on what is technically the most challenging (or the most interesting) right now.

“We constantly balanced two related needs: how can we deliver the most value now? And how can we learn the most about what else is needed?”

Closing Words: A Trade-Off Between Value And Learning

It is clear from the goals and the reasoning behind them that we constantly balanced two related needs: how can we deliver the most value now? And how can we learn the most about what else is needed? In exceptional cases, we used Sprint Goals to make our work in the near-future easier, like we did for the first Sprint.

So was the Sprint Goal first, or the Sprint Backlog? As you can see, it wasn’t black-and-white. There was always an on-going conversation between the Product Owner and the Development Team — usually during refinement — about what objective made the most sense for the next Sprint. That guided both the formulation of the Sprint Goal as well as the selection of the Sprint Backlog. While selection work and reducing the Sprint Goal to a size that we could commit to, we always asked ourselves “What is the most commonly used?” and “What is the most valuable right now?”. I’ve found that asking these two questions is the best way to reduce scope to what is absolutely necessary to release after this Sprint. Everything else can be done later.