Before we begin, let’s do introductions. In our recent Product Days session, AI / Governance: A Two-Way Street, our host François Sergot, Product Manager at Dataiku, had the opportunity to meet with Aaron Kalb, Co-Founder and CDAO at Alation to discuss a hot topic in the data science community — AI and data governance. This blog will recall the key takeaways and insights from the presentation.

Dataiku, Data Governance, and Alation

Dataiku is working to cross the chasm of visionary to mainstream machine learning, and on the pursuit to Everyday AI adoption, topics such as data governance now demand greater attention. This is where the conversation with Alation comes into play. Alation is a Dataiku partner that brings state-of-the-art data documentation and data cataloging to the data process, enabling successful AI systems. Alongside Dataiku, which supports end-to-end data efforts such as data preparation, training, and deployment, all the way through monitoring and MLOps, the two systems bring forth an elegant and collaborative data management and data science approach to organizations.

Drumroll Please….

As you have probably already guessed from the title, today’s topic is on data governance and AI but also on AI and data governance. Confused? Don’t worry. We will explain. This two-way street that Kalb elaborates on can be easily explained as: good AI enables good data governance and, in turn, that good data governance enables desirable AI outcomes. This means that AI is necessary for positive data intelligence and good data intelligence leads to things like efficient and beneficial AI automation. The two (AI and data governance) work hand in hand at all times. It’s a mutualistic relationship.

Let’s Dig Deeper on That “Data Intelligence” Part

At Alation, success is measured through customer impact, so Kalb references a popular customer story with Pfizer. In regard to Pfizer’s analytics workbench, there are three main players: Alation, Tableau, and Dataiku. Each player provides a unique and necessary leg of the overall data process at Pfizer. Dataiku supports data preparation, model training, and deployment into production. Tableau provides data visualization tooling, and Alation is where data intelligence comes into the picture.

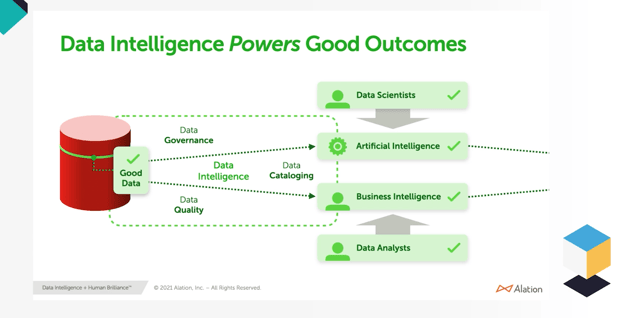

Intelligence, by definition, is the ability to gain and apply learned knowledge and skills. Speaking in terms of data, this is an input-output process. Effective data intelligence is a necessity because AI and BI are only as good (or as bad) as their input. No matter the high complexity and world-class reputation of the algorithms being used, if said algorithms are fed with faulty dataflows, negative outcomes and setbacks will be the output.

The end goal is to banish black-box barriers in order to vividly see the underlying potential of data to produce smart and ethical outcomes which will subsequently engender enterprise-wide innovation. This ongoing search for this correct and beneficial data is referred to as data intelligence. A diverse suite of technologies and techniques make up this concept of data intelligence — data governance, data cataloging, data quality. Alation covers all of these.

One way to think of data intelligence is like a layered map.

The top layer is metadata extraction. Imagine this as the big picture or a zoomed out map to begin with. We now want to take a closer look and figure out exactly what humans can and are doing with the data in our metaphorical map. To do so, we begin the data intelligence process — data governance, data cataloging, and data quality initiatives paint a clear and engaging picture of the data we are looking at.

These data intelligence steps include data sampling (our top physical layer) and auto-titling (the underlying layer of ML and natural language processing). This investigation continues on to query log ingestion which examines data in a behavioral context and, finally, we assign popularity to certain aspects of the data. For example, we can pick out top users to choose stewards and even determine common filters in order to extract useful insights for AI applications.

These are not fundamentally simple tasks, and the scale and complexity of datasets demands a higher level of sophistication, so Alation helps organizations get data documentation done in an efficient way through the use of AI tools. Fun fact: many organizations have over ten million fields in their database warehouses. So, as you can assume, it is essentially impossible to sort through all of the data by hand.

Data Intelligence Examples

Klab gives us two examples to help us understand data intelligence in practice. The first example is defining race in datasets for credit scoring. For this example, we can see how various outcomes result from small changes in the data input.

Is using the race field mandatory, optional, or forbidden? It depends. Lack of race definitions in data can lead to a lack of awareness of systematic bias, but if the data itself is not obtained using a controlled process and environment, bias is unfortunately created. The best way to mitigate the risk of these biases from both sides is to publish aggregated reports with cross tabulations and carefully inspect for anything that looks abnormal. Make sure to check for margin of error and don’t neglect to check each stage of the data acquisition and preparation process.

The next example provided by Klab illustrates how unchecked non-response bias in dataflows can be harmful to AI applications down the line. In this specific example, a respondent neglects to properly report the date of birth (DOB) which is being used as a key metric in AI-generated marketing later on.

The lack of response led to the social messaging being sent to the wrong demographic.

The best thing to do to prevent this scenario is to have a selective and particular prediction system in place. The problem is that these biases are not always easily evident in vast amounts of data. You need a clear data catalog for all of your data in order to point out these problem areas quickly. Creating this kind of catalog is not an easy feat. Luckily, AI can assist here. AI has the capability of tracking all of the little footprints made on our data map. Alation believes that curators can write and then computers can delegate, prioritize, propagate, and automate data documentation end-to-end.

What Goes Into Data Documentation

- Delegation means getting data documentation to the right people at the correct stage of the data process. Getting key information from the data intelligence such as top users is crucial for tapping into the best talent.

- Prioritization makes sure that the data distribution across the environment is even. Unevenly distributed data projects will hurt productivity and accuracy. In contrast, prioritization boosts the impact of important data projects.

- Propagation means that a little work can go a long way. Time is a precious resource among others, so utilizing smart tactics to share discoveries and understand the lineage, or downstream effects, of changes in your dataflows is important. Finally, rescuing data language and code throughout dataflows is a key step that benefits collaboration.

- Automation introduces ease to the process by getting data documentation done in a reasonable amount of time so that organizations can reach the business outcomes they desire on a more attractive timeline.

Wrapping it Up

To recap the takeaways from the presentation, we now understand that data governance is essential for scaling AI. Trusting that the data is accurate and compliant is necessary for an organization to be comfortable and confident in ML models. Steps of data governance like data documentation are not just a want but a need for a data process that leads to trustworthy, effective AI. If you only can remember one thing, remember that good data governance leads to good AI, and having good AI enables good data governance. It’s a two-way street.