In this blog series, we bring you one of our enterprise client's cloud migration success story.

The groundwork for migrating our client's on-premise legacy infrastructure to Azure was laid during Phase 1 of the project. An IaC framework was developed to speed up deployment and an Azure Landing Zone was deployed during this phase.

Then came the crucial part: Application migration and, along with it, database migration. You can read all about it here.

Existing Application Landscape

Our client managed their core applications across four different environments. Some applications were publicly accessible while others were restricted to the organization's network. There were also over 50 external integration APIs.

A dependency mapping helped us unearth the services the applications relied on, including databases, on-premises file shares, and cache services. All of these had to be carefully managed during migration.

With such a heavy mandate, we set out to execute the migration.

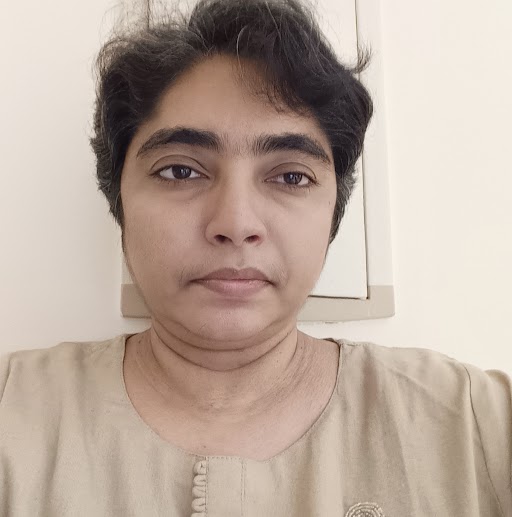

Here's a high-level view of the process we followed.

To Rehost or Rearchitect?

In our case, both these migration strategies were relevant.

We proposed rearchitecting wherever possible to capitalize on the advantages of cloud-native environments. While most components like core applications and external integration APIs required a clear rearchitecture, others, such as an error logging solution utilizing legacy open-source libraries, required a rehosting approach to maintain compatibility with the new infrastructure.

Establishing Application Infrastructure

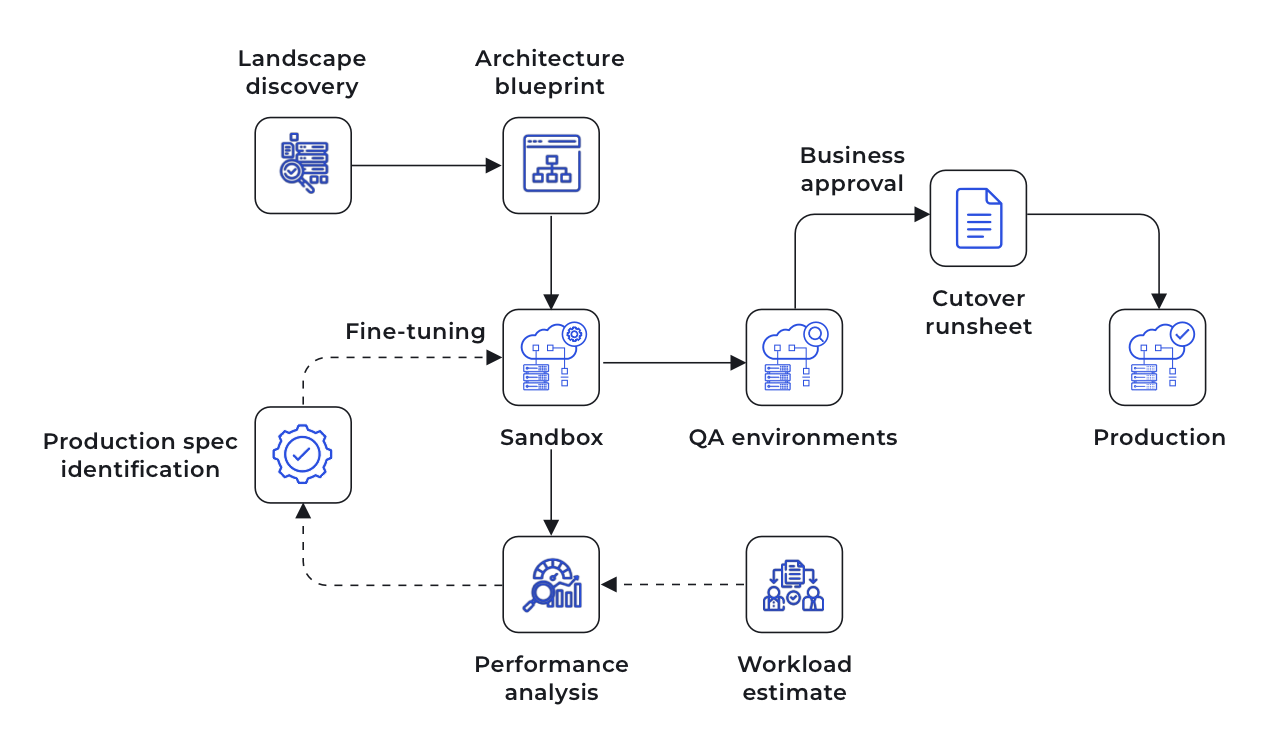

With a clear picture of the dependencies, we created a blueprint of how the applications would be set up and run in Azure. Application scalability, security, and availability were factored into the design. Adherence to the client's security best practices and Azure policies was also prioritized.

We chose containerized deployment for the core applications to make them portable and scalable, without getting locked into any service provider. After evaluating various Azure compute services, we opted for Azure Kubernetes Service (AKS) to host the core applications. We chose Azure Function Apps to host the external integration APIs.

We set up a sandbox environment based on our architecture to gauge application performance and identify potential issues. We then ran load tests in this sandbox using current business workload estimations. The results gave us the insights to tweak and perfect our architecture and infrastructure specifications.

Four discrete application environments were established in Azure. Each environment was deployed to an individual Azure subscription within the Azure Landing Zone, enabling better organization of the resources.

To efficiently manage this large infrastructure, we developed Terraform code and IaC pipelines adhering to the GitOps workflow defined in CAF. This also enabled faster infrastructure provisioning and promoted consistency across environments.

Modernizing Applications

As decided earlier, we rearchitected the applications to harness the power of the cloud.

The legacy application framework and dependent packages, which had remained unchanged for years, were upgraded to the latest stable versions. We ensured that the new application landscape aligned with recommended industry standards utilizing libraries such as OpenSSL v3 and TLS 1.3. This was not an easy process, considering the incremental upgrades and open-source package customizations that were required to maintain the existing functionalities with zero regression.

We disabled the Non-SSL port in Azure Redis Cache in compliance with Azure policies and reconfigured the applications to communicate with it in SSL mode. We also introduced a robust authentication mechanism to the applications by integrating them with Microsoft Entra ID, replacing the outdated Central Authentication Service (CAS) approach.

Then, we containerized all the applications ensuring adherence to industry best practices. As part of this process, Dockerfiles were meticulously created, employing multi-stage builds to minimize image size and build time.

To migrate external integration APIs to Azure Function Apps, we chose the Functions Premium (Elastic Premium) plan, which offers Vnet integration capability. Considering the scalability factors and cost implications of rehosting 50+ APIs under premium plans, we rearchitected the APIs into a single dynamic proxy service. This proxy service would handle requests for multiple APIs and redirect all incoming requests to the respective applications.

Deployment Automation

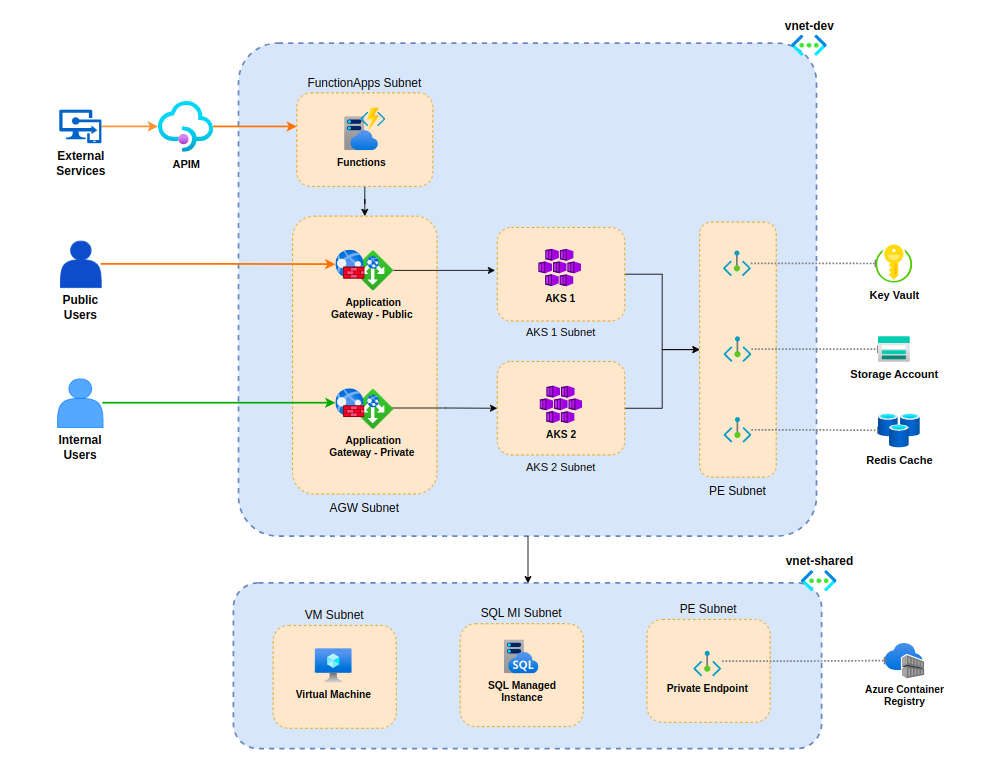

The client previously relied on GitLab and Jenkins for their development and deployment processes. Aiming to leverage the seamless integration offered by the Azure ecosystem, we made the deliberate choice to utilize Azure DevOps to automate the continuous integration and deployment.

To facilitate this transition, we developed Helm charts for efficient packaging and deployment of Kubernetes applications. This approach ensured consistency and ease of deployment across multiple environments.

Additionally, we developed YAML template-based CI/CD pipelines for each application, providing a structured framework to automate the process. These were carefully created to ensure readiness for disaster recovery scenarios. Running these pipelines in CIS-hardened self-hosted agents enabled secure and reliable application deployments to private AKS clusters.

Database Migration

A large part of application migration is database migration. We began with a detailed assessment of the existing application databases. We analyzed the data types for their compatibility with SQL server's latest version.

Our client’s data resided in on-premise MySQL and MSSQL servers, which meant separate tools would be required to execute the migration. We chose Azure SQL Managed Instance (SQL MI) as the target database because it has all the must-have features such as SQL Server Agent and copy-only user backups used in the on-premise database server.

MSSQL to Azure SQL MI Migration

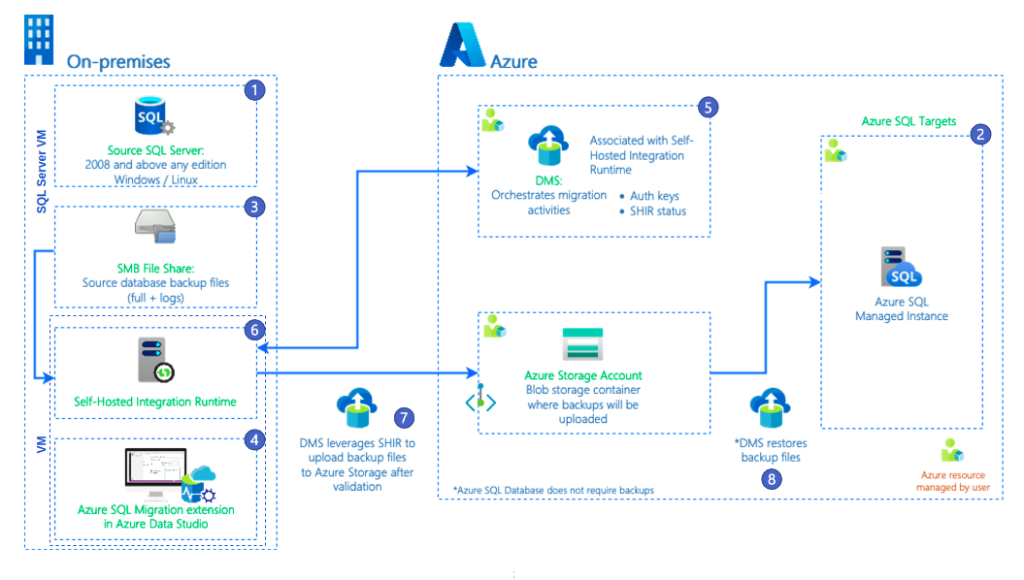

For migrating the on-premise MSSQL server to Azure SQL MI, we made use of the Azure Data Studio Migration extension. It provides compatibility checks for the on-premise databases and SKU recommendations for the target server based on the performance data from the source.

Online migration ensured our source and destination databases stayed in sync throughout the process and minimized application downtime. But the process was not without hiccups!

Network lag caused some errors when multiple log files were uploaded simultaneously. To overcome this, we resorted to manual cutover whenever the error persisted. The PowerShell commands provided by Microsoft Support came in handy.

The data migration process unfolded through a series of steps as illustrated below.

MySQL to Azure SQL MI Migration

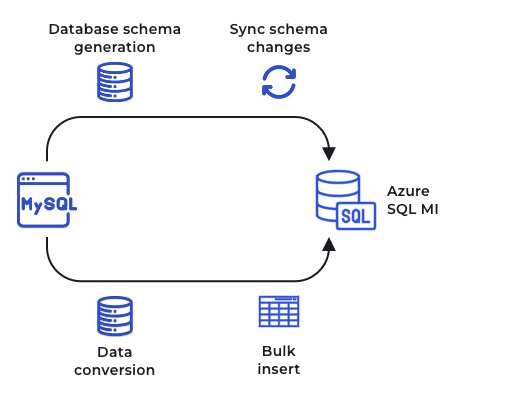

SQL Server Migration Assistant (SSMA) is the go-to tool for migrating data from MySQL to Azure SQL MI. Since it supported only offline migration, we carefully planned the migration keeping the application downtime in mind.

We experienced dropped connections while migrating large tables. This seemed to be an issue with Azure SQL MI. To overcome this, we used an intermediate SQL server. The migration process is illustrated in the following diagram:

After completing the migration of each database, we conducted post-migration validations, cross-verifying the number of rows, indexes, primary keys, and constraints in both the source and target tables to ensure data integrity and completeness.

We didn’t want to leave anything to chance!

Production Cutover

As we approached the end of our application migration journey, we had to finalize the production cutover date, our make-or-break moment!

We reached out to all the stakeholders of the applications and finalized the cut-over date to reduce the impact on business continuity. We prepared a run sheet to outline individual tasks to be performed as part of the cutover. As a precautionary measure, we prepared rollback plans for as many tasks as possible to address unforeseen challenges.

To begin with, we verified that the production infrastructure was healthy. Then, we kicked things off by initializing the database synchronization and gracefully stopped the on-premises applications, draining the database connections to prevent data loss or corruption.

With the databases synchronized, we executed the database cutover, officially transitioning to the Azure SQL MI. Next, we commenced application deployment to AKS, bringing the latest version of the cloud-based applications to life.

Finally, we conducted rigorous business verification testing to validate the functionality and stability of the migrated applications.

And just like that, we successfully crossed the finish line of our migration journey, ensuring minimal disruption and downtime!