In 2006, Clive Humby, a mathematician and data scientist, said, “Data is the new oil.” Today, data obsession is all the rage, as all businesses struggle to get information. However, unlike oil, data is worthless unless you can make sense of it.

Dedicated data engineering and data science practitioners are today’s gold miners who find new methods to collect, process, and store data.

Businesses implement these methods using specific tools and practices to generate valuable insights. One of the most common ways enterprises leverage data is business intelligence (BI), a set of practices and technologies that transform raw data into actionable information.

Data is used for various purposes, such as performing analytics or creating machine learning models, but it can’t be applied in its raw format. Any system dealing with data processing requires moving information from storage and transforming it into something that people or machines can utilize. This process is known as Extract, Transform, Load, or ETL. Usually, it is carried out by an ETL developer – a specific type of engineer.

In this article, we will discuss the role of an ETL developer in a data engineering team. We will cover their main responsibilities, job description, and skills while debunking common misinterpretations of an ETL developer and related roles.

What is ETL developer?

ETL is an abbreviation that stands for extract, transform, and load. An ETL developer is a software engineer who manages the Extract, Transform, and Load processes, implementing technical solutions for these operations.

ETL pipeline

To move data from one system to another, an ETL developer builds a specific data pipeline that covers the Extract, Transform, and Load stages mentioned above.

Extract. Businesses store historical information or stream real-time data into many systems. This information is scattered across different software and is structured in various formats. The extraction phase entails defining required data sources, whether it is an ERP, CRM, or third-party system, and gathering data from them.

Transform. When data is gathered from its sources, it’s usually placed in a temporary storage area called a staging area. While in this area, the data is formatted according to defined standards and models. For example, financial numerics in different formats, $34.50, 0.90 cents, and $01,65, will be changed into a single coherent format: $34.50, $0.90, $1.65.

Load. The final stage of an ETL process is loading the structured and formatted data into a database.

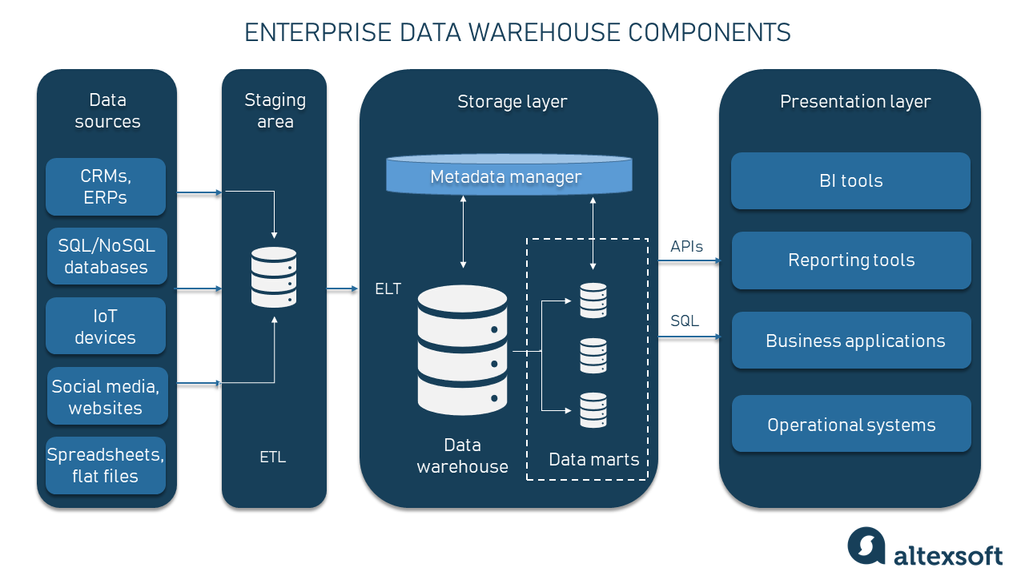

If the amount of data is small, any kind of database can be used. A specific type of database needed for BI, big data analytics, and machine learning is called a Data Warehouse. It may include several tools to represent data from multiple dimensions and make it accessible for each user.

Data representation or BI tools are connected to a warehouse so that users can drag data out and manipulate it. They representation tools are the actual BI tools that offer analytical data through interactive dashboards and reporting instruments.

ETL developer as part of a team

Usually, an ETL developer is part of a data engineering team — the cool kids on the block in charge of data extraction, processing, storage, and maintenance of the corresponding infrastructure. Their main task is to obtain raw data, decide how it should look, make it consumable, and then store it somewhere.

The team roster depends on the project's scope, goals, steps of data processing, and required technologies. Thus, the data engineering team may include the following roles:

- Data architect. Their role is to decide on a data tech stack and project the infrastructure that data engineers will develop.

- Data engineer. This type of software engineer develops interfaces and the ecosystem to gain access to the information.

- Data analyst. This team member defines data collection methods, data models, and types and outlines the transformation process.

- Database/Warehouse developer. This expert is responsible for modeling, developing, and maintaining data storage, be it a common SQL database or a data warehouse

- DBA or Database Administrator. This person is in charge of database management if there are multiple databases or the structure of a DB/warehouse is as complex as rocket science.

- Data scientists. Projects dealing with machine learning also include a data science specialist or even a dedicated data science team.

- Business intelligence developer. This is a software engineer who focuses on developing BI interfaces.

- An ETL developer. As we said, it’s a software engineer who develops/manages the corresponding infrastructure for the Extract, Transform, and Load stages of data processing.

To learn more about how each one of these roles is involved in the whole data science process, check our video:

ETL developer vs data engineer

These two professions are often confused. Indeed, a data engineer can combine both functions in a small company, but if we are talking about a large team and a huge amount of data, it makes sense to hire separate performers for these positions.

An ETL developer’s job mainly revolves around data integration and building ETL pipelines to convert the data into the required format and move it from the original source to the destination system. Their day-to-day tasks may involve writing code, though not necessarily — sometimes, it’s enough to master ETL tools that have a graphical interface.

On the other hand, data engineers solve more strategic problems by dealing with the overall data ecosystem, big data technologies, and cloud computing. They design, build, and optimize systems to collect, store, scale, access, and analyze data.

A data engineer may also perform management duties, lead teams, and assign projects to ETL developers. Therefore, a data engineer can be the next career step for an ETL developer.

ETL developer job description: responsibilities and specific tasks

Regarding a BI project, an ETL developer is one of the significant engineering roles. While the primary responsibility is to take charge of the Extract, Transform, and Load stage, an ETL developer performs tasks connected with data analytics, testing, and system architecture. To outline all the possible tasks an ETL developer can perform, we will cover shortly all the possible tasks:

- ETL process management,

- data modeling,

- data warehouse architecture,

- ETL tools implementation and data pipeline development, and

- ETL testing.

Now, let’s talk about each point in detail.

ETL process management

ETL is one of the key stages in data processing. It uses both methodologies and technologies. The main task of an ETL developer, or a dedicated team of developers, is to:

- outline the ETL process, setting the borders of data processing;

- provide system architecture for each element and the whole data pipeline;

- document the requirements of the system and manage its development;

- take part in the actual development/implementation of ETL tools; and

- conduct testing of the tools and data pipelines.

So, an ETL developer can be an engineer, tech lead, project manager, or QA of the ETL process, depending on the scope of the system. ETL developers often collaborate with other team members to get information about business requirements or end-user needs. It takes a proper understanding of what data formats are required, how it should be loaded (by portions or with dynamic updates), and how it will be represented in a warehouse (OLAP cubes, SQL, NoSQL, data marts, etc.).

We contacted Wayne Yaddow, a Data Quality Trainer and BI consultant, to elaborate on ETL developer duties. Wayne suggests numerous points to consider.

An ETL developer is in charge of analyzing, interpreting data models, and resolving data issues in collaboration with data analysts. All system designs and publication activities pass the validation of an ETL developer. Consider requirement validation, conducting user interviews with system users and developers, and monitoring business requirements for the ETL process

Data modeling

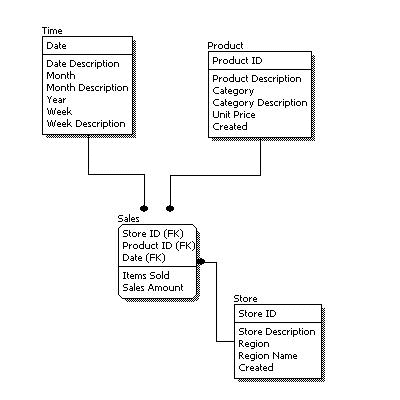

An ETL developer should define the required formats before the data is extracted from its sources. The end formats of data that will be represented in the warehouse (and user interface) are called data models.

Logical data model example. Source: 1keydata.com

Data models are constructed and documented by collaborating with business analysts, data analysts, and data scientists. An ETL developer will use the models to define the transformation stage and underlying technologies that will perform formatting.

Data warehouse architecture

A warehouse is a large storage facility used to house structured data. It’s often decomposed into smaller elements like data marts, which offer dedicated departments access to required data with specific properties. For example, if a warehouse is a large storage area with all the information gathered, data marts are smaller databases that store thematic data (accounting, website metrics, etc.).

The warehouse itself or data marts are connected to the end-user interface, helping users access the information, manipulate it, make queries, and form reports. Additionally, the data can be enriched with metadata during the formatting stage, which also involves changes in the overall warehouse architecture.

An ETL developer is responsible for defining data warehouse architecture and determining the tools to load data into it. Warehousing is a complex process; a dedicated database engineer usually develops it. However, an ETL developer can possess all the required skills and knowledge to build it.

ETL tools implementation and data pipeline development

The final stage after each system component is designed on its own is the implementation of ETL tools and development of the data pipeline — a technical infrastructure that will automatically perform the following actions.

Data extraction from a given source. As long as the information is stored in various systems, an ETL tool should be integrated with each of them.

Data uploading into a staging area. The staging area is where the formatting happens. In some cases, it can be done in the warehouse, but most often, a separate database is used to speed up the process and keep the warehouse clean.

Data formatting. Data is formatted to meet the defined standards when transferred to the staging area. This may include such operations as:

- data cleansing, the process of deleting useless data fields;

- data structuring/mapping, the process of defining data types and the connections between them; and

- adding metadata to enrich the information with details

Loading structured data into the warehouse. The data can be loaded by portions or constantly updated. Dynamic information may require query methods to request updated data from the data source. If updates are not required, the data is loaded in portions.

ETL testing

An ETL developer is in charge of testing the system, units, data models, and warehouse architecture. Besides usual QA activities, ETL testing has the following aspects:

- data model testing.

- data warehouse architecture testing,

- representation tools check,

- data flow validation,

- uploading/downloading/querying speed testing, and

- system performance tests.

Technical implementation is usually carried out by a QA team and software engineers. However, data-specific aspects are checked by an ETL department and data analytics.

ETL testing includes ETL system code, data design, and mapping techniques. Root cause analysis on all processes and resolving production issues are also a part of the process, as are routine tests on databases and data flow testing

ETL engineer experience and skills

To perform their duties, ETL developers must have expertise and skills across several fields.

ETL developer experience required to execute corresponding responsibilities

Experience with ETL tools. As data engineering is a mature industry, there are a lot of ready-made solutions on the market. Instruments like Talend, Informatica, Pentaho, and Apache Airflow are considered industry standards. So, the experience with these tools for an ETL developer is like Photoshop for a designer.

ETL tools are out-of-the-box solutions that perform Extract, Transform, and Load steps right from the start. In this case, an ETL developer is the administrator of the data integration tool connected to data sources and the warehouse. The task is to integrate existing instruments with the ETL tool, manage operations, and implement an interface to make data usable.

There are also big data platforms like Hadoop and Spark that can facilitate ETL operation for large-scale projects.

Database/DBA/architect background. An ETL developer must be a specialist in database engineering. To understand data storage requirements and design warehouse architecture, an ETL developer should have expertise with SQL/NoSQL databases and data mapping.

Data analysis expertise. As long as an ETL developer takes part in data modeling, mapping, and formatting, data analysis expertise is required. Experience with data modeling tools, such as Toad Data Modeler, Erwin Data Modeler, etc, would come in handy.

Knowledge of scripting languages. If you deal with large datasets and complex pipelines, you’ll need some automation. ETL developers may use scripts to automate small parts of the process. The most popular scripting languages for ETL are Bash, Python, and Perl.

Software engineering background. ETL developers must have strong expertise in programming languages. C++ and Java are the most used in ETL.

Troubleshooting. Data processing systems operate with large amounts of data and include multiple structural elements. An ETL developer is responsible for the proper functioning of the system, which requires strong analytical thinking and troubleshooting skills.

When to hire ETL developer

An ETL developer intersects with other data engineering roles, because of the technical background and the field of responsibilities. An ETL developer is critically needed when building a large-scale data processing system, and the data flow is complex. In such a case, an ETL developer would be irreplaceable.

Warehouse developer instead of ETL developer: If the focus of your system falls on the operations inside the data warehouse, a warehouse developer is a good alternative to an ETL developer. A dedicated database/warehouse developer with strong expertise in BI projects can carry out data flow implementation, as well as use data integration tools.

Business intelligence developer instead of ETL developer: BI developers are software engineers specializing in implementing BI interfaces that rely on data pipelines. If your project focuses on ready-made solutions and integrations with providers of business intelligence tools, a BI developer will be up to the task of maintaining the system.

How to become an ETL developer: degree, certification, and what salary to expect

ETL developer is a sought-after profession that is growing in popularity every year. According to the US Bureau of Labor Statistics, overall employment of database administrators and architects (which includes ETL developers) is projected to grow 8 percent from 2022 to 2032.

Typically, the path to an ETL developer position includes the following steps.

Get a bachelor’s degree in computer and information technology or a related field, such as engineering. Employers might favor candidates with a master’s degree specifically focused on data or database management.

Get a certification from software vendors or vendor-neutral certification providers. Having an ETL certification can help you stand out from other candidates for a position or significantly improve your career prospects if you are already employed.

While there is no one-size-fits-all license for ETL developers, employers may mandate certification in the particular technologies the company utilizes. So the more certifications you have in different tools, the better.

Let's list a few popular ETL tools and their corresponding certifications.

Talend Big Data Certified Developer and Talend Data Integration Certified Developer indicate that you've mastered big data tools and Talend's data Integration solutions.

Oracle Data Integration Certification is an industry-recognized credential that validates your ability to work with Oracle's data integration tools.

Microsoft Certified Azure Data Engineer Associate confirms that you are proficient in Microsoft's data engineering tools and technologies. In particular, to get the certificate, you need to learn how to handle data pipelines using Azure Synapse Analytics, Azure Data Factory, Azure Databricks, and other Azure solutions, integrate them with third-party services, and design and deploy data storage facilities. SQL and Python are also included in the course.

IBM Data Engineering Professional Certificate validates the skills and knowledge of professionals who use IBM's data engineering tools and technologies. Courses in this program include working knowledge of SQL and NoSQL databases, mastering Python, and building ETL and other data pipelines with Bash, Airflow, Kafka, etc.

Cloudera Data Engineer verifies knowledge and proficiency with the Cloudera Data Platform (CDP), data orchestration with Apache Airflow, and data processing with Apache Spark.

The estimated average salary for an ETL Developer is $124,000 in the United States. So it’s certainly worth the effort. But the most important thing, as always, is to be genuinely interested in your work, as this is the best motivation.