The CDP Operational Database (COD) builds on the foundation of existing operational database capabilities that were available with Apache HBase and/or Apache Phoenix in legacy CDH and HDP deployments. Within the context of a broader data and analytics platform implemented in the Cloudera Data Platform (CDP), COD will function as highly scalable relational and non-relational transactional database allowing users to leverage big data in operational applications as well as the backbone of the analytical ecosystem, being leveraged by other CDP experiences (e.g., Cloudera Machine Learning or Cloudera Data Warehouse), to deliver fast data and analytics to downstream components. Compared to legacy Apache HBase or Phoenix implementations, COD has been architected to enable organizations optimize infrastructure costs, streamline application development lifecycle and accelerate time to value.

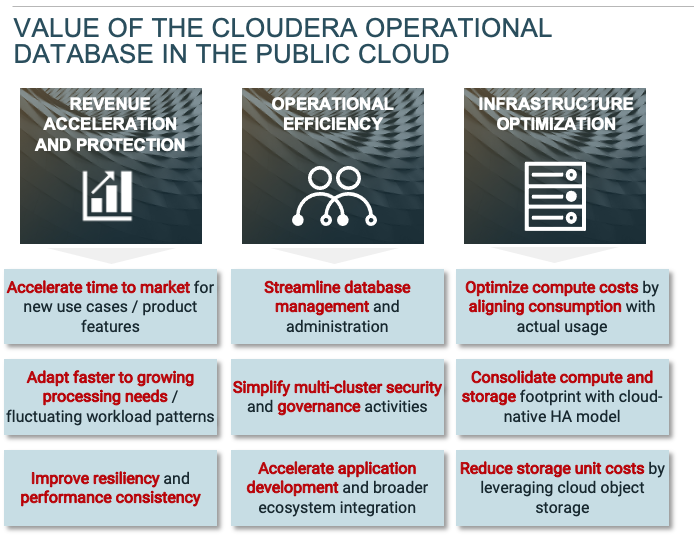

The intent of this article is to demonstrate the value proposition of COD as a multi-modal operational database capability over legacy HBase deployments across three value areas:

- Infrastructure cost optimization by converting a fixed cost structure that previously consisted of infrastructure and cloud subscription costs per node into a variable cost model in the cloud based on actual consumption

- Operational efficiency across activities such as platform management / database administration, security and governance, and agile development (e.g., DevOps)

- Accelerating and de-risking revenue realization, by enabling organizations to develop, operationalize, and scale transactional data platforms centered around COD, also integrating with the remaining data lifecycle experiences to deploy Edge2AI use cases with CDP

The sections that follow dive into the technology capabilities of COD and, more broadly, the Cloudera Data Platform that deliver these value propositions.

Technology Cost Optimization

There are two major drivers of technology cost optimization with COD:

- Cloud-native consumption model that leverages elastic compute to align consumption of compute resources with usage, in addition to offering cost-effective object storage that reduces data costs on a GB / month basis when compared to compute-attached storage used currently by Apache HBase implementations

- Quantifiable performance improvements of Apache Hbase 2.2.x that delivers higher consistency and better read / write performance over previous versions of Apache HBase (1.x)

Cloud-Native Consumption Model

The cloud-native consumption model delivers lower cloud infrastructure TCO versus both on-premises and IaaS deployments of Apache HBase by employing a) elastic compute resources b) cloud-native design patterns for high-availability and c) cost efficient object storage as the primary storage layer.

Elastic Compute

As a cloud native offering, COD uses a pricing model that comprises Cloud Consumption Units (CCUs). Spend based on CCUs depends on actual usage of the platform, as COD invokes compute resources dynamically based on read / write usage patterns and releases them automatically when usage declines. Consequently, cost is commensurate to business value derived from the platform and organizations will avoid high CapEx outlays, prolonged procurement cycles and significant administrative effort to meet future capacity needs.

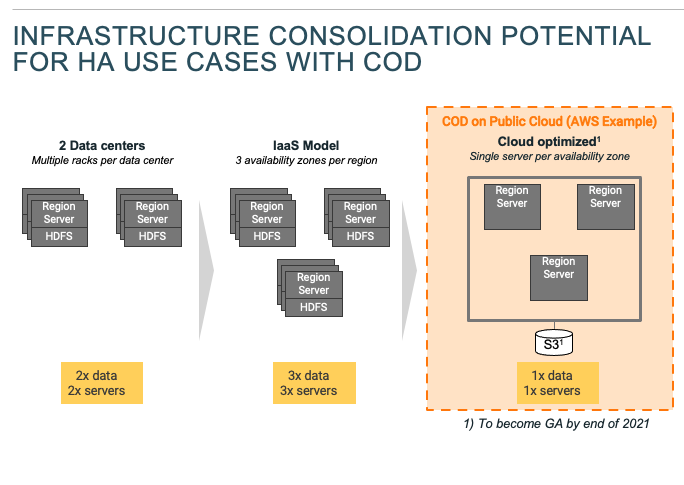

Cloud-Native Design Patterns

To avoid duplication of compute resources in high availability (HA) deployments, COD has adopted vendor-specific cloud-native design patterns (e.g., AWS and Azure standards) reducing cost, complexity and ensuing risk mitigation in HA scenarios:

That type of architecture results in consolidation of compute and storage resources by up to a factor of 6 (moving to COD from an HA based IaaS model) reducing associated cloud infrastructure costs.

Before we delve into the topic of storage however, we will quantify compute savings over the lift-and-shift deployment model by conducting a sensitivity analysis across different combinations of factors contributing to the variation of cost savings on a node instance basis. These factors include current environment utilization, deployment region (that influences compute unit costs by cloud provider), type of instances used in the IaaS deployment etc.

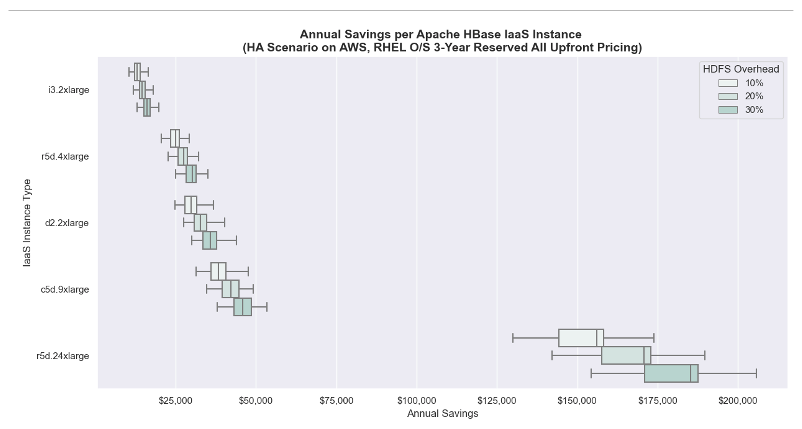

Savings opportunity on AWS

To quantify the savings opportunity on AWS, we compared the annual costs of a Highly available IaaS deployment (dual availability zone configuration) across all supported COD regions and for three different ‘hdfs capacity overhead’ scenarios, each reflecting the low, mid and high end of that overhead that corresponds to the incremental compute deployed over and above the nodes required by the Apache HBase and / or Phoenix storage footprint:

The chart above presents the average annual cost savings potential per Apache HBase node deployed in a Highly available IaaS deployment for a range of node utilization scenarios between 25%-60% that we have observed in most of client environments. The cost comparison was conducted using list EC2 pricing for 3-Year (All Upfront reserved) RHEL instances between five instance types that are commonly used in IaaS scenarios and an i3.2xlarge instance used by COD on AWS. As we can see from the chart, organizations should expect to see annual savings in the range of $12K-$40K on a node basis for most instance types used in IaaS deployments.

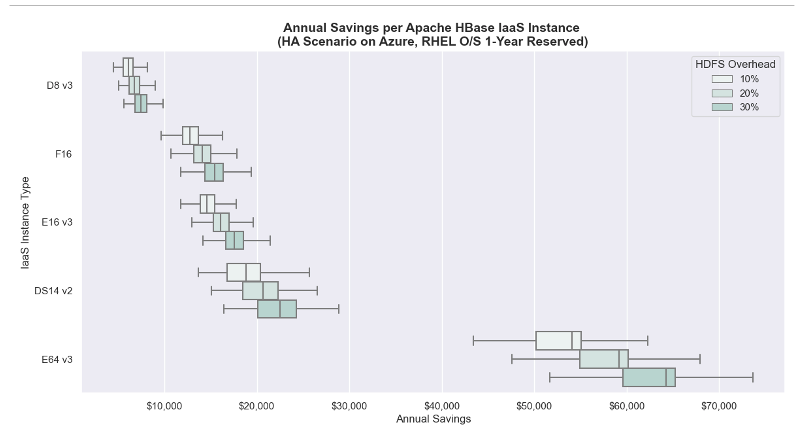

Savings opportunity on Azure

Similarly, in the case of Azure, the annual savings opportunity was estimated by employing a scenario-based approach, using analogous assumptions based on Azure-specific characteristics, available virtual machines and compute billing types. For instance, we are using the D8 v3 instance type for COD workloads on Azure and we calculated the savings opportunity based on 1-year reserved pricing for RHEL instances, since Azure doesn’t offer the 3-year reserved pricing billing type for most of the regions where RHEL-based Virtual Machines are available:

Object Storage

When it comes to storage, COD takes advantage of cloud-native capabilities for data storage by:

- Using cloud object storage (e.g., S3 on AWS or ABFS on Azure) to reduce storage cost resulting from HA Apache HBase deployments and lower unit cost for storage (compared to the more expensive types of storage used by either on-premises or IaaS deployments)

- Leveraging a caching layer on each VM to support low-latency workloads. Caching eliminates the latency overhead of object storage and most of the access costs for object storage (which can be substantial for operational workloads)

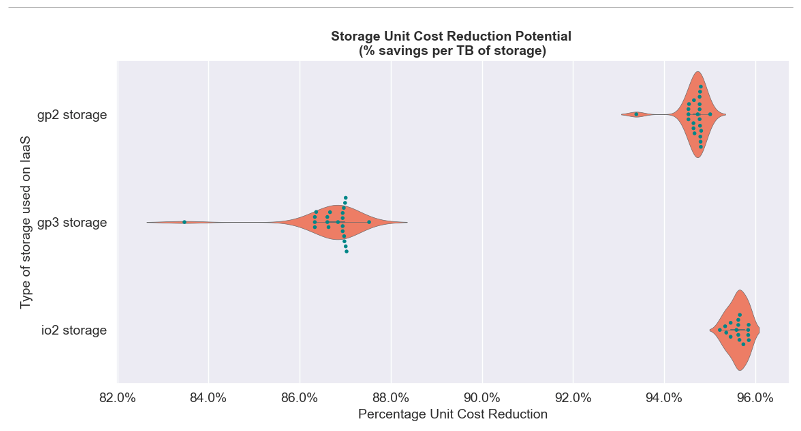

To quantify the range of benefits for storage when moving from a HA IaaS deployment to COD in the Public Cloud, we will consider the same scenario as above: A HA deployment with the dual site configuration and a 3x data replication factor. In addition, we have assumed a hdfs buffer of ~25% (incremental storage capacity to accomodate storage consumption growth without manually scaling the cluster):

The violin plot above illustrates the distribution of storage savings on a per-TB basis for three SSD storage types used in most IaaS implementations across different regions where COD is available. The dots in the chart correspond to the different deployment regions and, as the plot suggests, clients should typically expect to see savings between 85% – 95% on the total storage bill.

Performance Improvements in Apache HBase

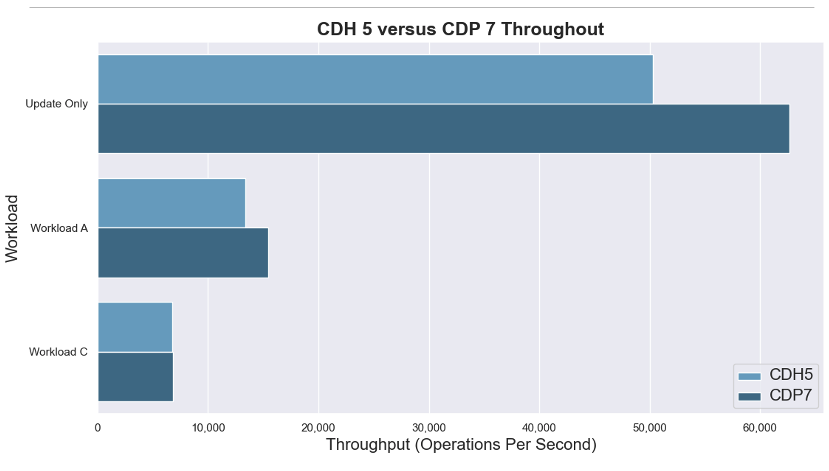

The migration from previous versions of Apache HBase to version 2.2.x included in CDP PvC and CDP Public Cloud will also deliver substantial performance improvements that will translate into infrastructure cost savings / avoidance (e.g., avoidance of further OpEx / CapEx for use case growth). For example, in a recent performance comparison between CDH 5 and CDP 7, workload performance was up to 20% better on CDP 7 based on the YCSB benchmark:

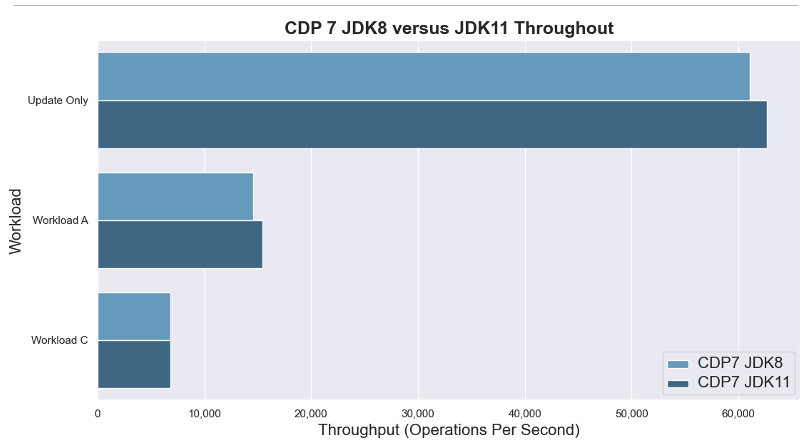

In addition, CDP 7 with JDK 11 in the YCSB benchmark delivered 5-10% better performance when compared against JDK8:

Recap of Technology Cost Optimization Opportunity with COD

In the section above, we presented in detail the potential for optimizing infrastructure costs (both on-premises and in the cloud) by migrating a CDH or HDP deployment of Apache HBase and / or Apache Phoenix to COD, the cloud native experience of the Cloudera Data Platform for operational database workloads:

- Compute: Clients that are currently operating CDH or HDP environments on IaaS (i.e., using lift-and-shift approach) should expect a cost reduction of ~$12K-$40K per EC2 instance (using RHEL 3-Year Reserved Pricing for both the baseline, IaaS, configuration and COD deployment) on AWS, or ~$5K-23K per Azure VM instance (using RHEL 1-Year Reserved Pricing for both the baseline, IaaS, configuration and COD deployment) on Azure

- Storage: Clients that are currently operating CDH or HDP environments on IaaS should expect a reduction in unit costs for storage (e.g., on a TB basis) of ~85-95% by moving from SSD EBS storage to mainly S3 Object Storage on AWS (similar savings would apply to IaaS CDH or HDP implementation on Azure

- Performance Improvements: While it is difficult to quantify technology cost reduction as a direct result of performance improvements in the latest Apache HBase runtime included with COD, current benchmarks point to a performance gain (in terms of IOPS) of up to ~20% by moving from CDH 5 / Apache HBase 1 to CDP 7 / Apache HBase 8 and up to ~15% of higher throughput by upgrading JDK 8 to JDK 11 on CDP 7

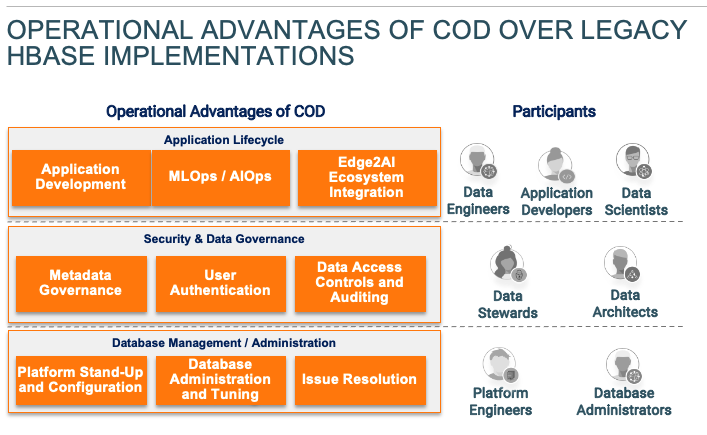

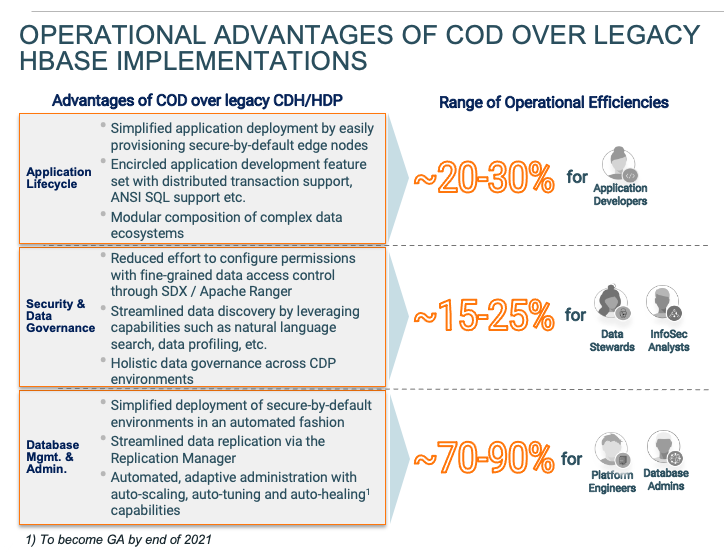

Operational Efficiency

Operational efficiency is the value area where COD delivers the greatest improvement, and spans across all operational domains, including database management and administration and application development activities:

The sections below drill down into the specific capabilities that accelerate different data lifecycle activities:

Database Management and Administration Activities

Platform management streamlines activities related to initial environment build-out, ongoing management and issue resolution. The major capabilities that improve day-to-days tasks of a platform / database administrator include the following:

- Streamlined configuration: COD simplifies deployment of secure-by-default environments in an automated fashion, eliminating previously manual and error prone tasks such as configuring Kerberos for multiple clusters that required a lot of architectural decisions and scripting effort. In the past, initial Kerberos configuration would typically require 1-2 month involvement from 2 dedicated resources with deep expertise on hardening CDH or HDP systems (at a cost of ~$50K – $200K, depending on the type of resources involved, internal or external)

- Simplified data replication: Replication Manager dramatically simplifies setting up replication with a simplified wizard based approach.

- Automated administration: COD has many intelligent features to ensure that the parameters of the system are automatically adjusted to reflect ongoing capacity requirements, while proactively applying changes to prevent outages and performance degradation. For example, COD offers auto-scaling that automatically adjusts available compute capacity based on usage / consumption patterns and auto-tuning that automatically detects and remediates issues such as hotspotting.

Security and Governance Activities

When it comes to Security and Governance, COD leverages capabilities available with the Shared Data Experience (SDX), to streamlining authorization, authentication and auditing capabilities across all Cloudera experiences:

- For previous CDH clients, SDX includes Apache Ranger that offers fine-grained access control (column and row level filtering and data masking) that reduces effort to configure permissions at user and role level

- For both CDH and HDP users, CDP Data Catalog expands on the feature set of both Atlas and Navigator, adding new capabilities that streamline activities such as data auditing, data profiling, application of business context to data

- For both CDH and HDP users, the Shared Data Experience offers an abstraction layer across multiple clusters, thus eliminating security governance silos at cluster / BU-level, which was previously the case. What’s more, the SDX-enabled security and governance overlay applies to all data experiences consistently, versus the narrow scope of previous implementations which focused at the technical, use case-level.

Application Development Lifecycle Activities

In addition to the database / platform management efficiencies introduced previously, COD delivers additional capabilities that improve the DevOps lifecycle:

- Simplified application deployment: Beyond the environment configuration and deployment capabilities that we covered previously that accelerate application delivery, COD also simplifies the deployment of edge nodes used to run custom applications that the client has built on top of HBase / Phoenix such as a web serving layer. Edge nodes are set up within the Kerberos domain of the environment and managed by Cloudera for DNS, OS level patching, etc.

- Enriched application development feature set: With features such as distributed transaction support combined with ANSI SQL and a slew of other improvements (star schema, secondary indices etc.), COD provides a more robust development toolset to database developers to simplify application development with familiar RDBMs features. This makes it easier than ever to migrate from overgrown / sharded relational databases to Operational Database. These migrations also provide significant additional savings

- Composable architectures for end-to-end use cases: Instead of adding a different service (e.g., Spark) to a COD database, thus increasing configuration / deployment complexity, CDP offers a dedicated experience for each other data lifecycle stage, and allows for modular composition of data ecosystems, enabling better reusability and maintainability (an example of using COD with our machine learning experience can be found here) for more comprehensive, ‘Edge2AI’ use cases

Quantifying Operational Efficiencies

Based on the framework above and the empirical evidence from successful COD implementations, we expect to see the following operational benefits throughout the application development lifecycle:

The metrics above correspond to the efficiency delivered with COD by migrating an existing Apache HBase and / or Apache Phoenix implementation that has been deployed on-premises or retrofitted to run in the Public Cloud as an IaaS deployment with CDH / HDP. The ranges reflect different environment configurations / levels of maturity that will determine the level of benefits introduced with COD. Those parameters include e.g.

Environment complexity in terms of different clusters / environments, number of technical use cases intertwined together (i.e., Apache HBase, Store and Spark) etc. In general, the more complex the current CDH / HDP environment is, the greater the improvement potential given the improved automation that COD delivers (thus reducing manual and repetitive steps across multiple environments) and the greater simplicity in scaling and tuning separate CDP data experiences (that the technical use cases currently deployed would be converted to).

Baseline Environment Performance given the current read / write workload pattern. Organizations that have historically faced challenges with read-heavy and write-heavy consumption patterns (e.g., large backlogs of incoming data or regionserver hotspotting that could cause instability to the environment) would benefit the most, given the increased automation and self-tuning / self-healing capabilities that we have introduced with the Cloudera Operational Database.

Internal Technical Expertise: Existing users that have deployed Apache HBase and / or Apache Phoenix but lack the internal expertise required to scale their existing deployment, will find that COD removes that adoption barrier by simplifying deployment of more complex environments. That is because it requires less expertise / effort to deploy and manage more complex use cases with Apache HBase and / or Apache Phoenix. That improvement applies to all stakeholders involved in such a deployment, Platform Engineers, Database Administrators and Application Developers, with the latter group benefiting the most from the enriched developer toolset that includes ANSI SQL support, making writing applications easier for Software Engineers familiar with RDBMS app development concepts and programming languages.

Ultimately, the level of operational improvements will vary on a client basis, however, efficiencies will be applicable to both mature, large scale implementations of Apache HBase and / or Apache Phoenix that will benefit from improved complexity management and automated issue resolution and smaller, emerging deployments where organizations will be able to use familiar concepts to build enterprise-grade applications without the configuration and scalability challenges of the past (e.g., capacity projections, environment sizing and tuning).

Accelerating and De-Risking Revenue Realization

The ulterior motive behind the evolution of the Operational Database, was to develop a modern multi-modal dbPaaS offering that improves agility and simplicity eliminating the need for complex management and tuning required for HBase. As a consequence, COD enables faster revenue realization for new revenue streams and de-risks (i.e., ensures) revenue realization for existing ones.

Accelerated Realization of Revenue Streams

- New application development: COD makes it significantly simpler to build new applications by enabling traditional star-schema based approaches along-side of evolutionary schema providing choice and flexibility regardless of whether you are building a new application or migrating an existing application that has overgrown its relational database. COD provides support for ANSI SQL (and supports TPC-C transactional benchmarks out of the box) means that application developers can use the SQL/relational database skills they have developed over their careers as they adopt COD — they no longer have to learn alternative technologies in order to move forward

- Modular data pipelines: As previously explained, COD eliminates many of the manual and arduous tasks related to database management and application provisioning, while also reducing a lot of the ‘guesswork’ inherent in architecting large-scale database systems. In addition, as organizations leverage more data lifecycle experiences to develop complex applications from Edge2AI, CDP offers a modular framework to seamlessly compose data ecosystems and accelerate time to market

- Continuous Delivery / Tuning: The automated, self-healing and auto-tuning features accelerate responsiveness to changes in customer requirements, increase in data volumes, sudden fluctuations in workload patterns (e.g., heavy reads versus heavy writes) etc. As a result, it reduces deployment frequency and lead time to changes

Risk Mitigation for Existing Revenue Streams

- Improved Resiliency: The simplicity associated with developing Highly Available environments with minimal manual effort and the efficiencies introduced in the data replication activities improve the resiliency of database applications developed with COD. In addition, capabilities such as Multi-AZ stretch clusters ensure that the level of resilience for your database is able to meet the needs of today’s Tier 0, mission critical applications but without the level of effort required previously to set up the database to be resilient due to AZ outages from your cloud vendors

- Consistent Performance: The critical nature of COD-based workloads make consistent performance a key prerequisite in an enterprise grade deployment. With the automation that COD introduces (self-healing and auto-tuning) and codebase optimizations (e.g., off-heap caching, compaction scheduler) database workloads enjoy consistent performance, even as the platform scales in terms of computational and architectural complexity. As a result, COD alleviates performance issues related to noisy neighbours and hotspotting through better tenant isolation and resource management

Conclusion

In the sections above, we outlined the value proposition of COD over legacy Apache HBase deployments on CDH and HDP across value and technology areas:

To learn more about the technology capabilities that we have added to COD please refer to some of the more technical blogs such as distributed transaction support, and performance configurations. Further reading on some of the CDP capabilities such as data exploration, security automation using Ranger and automated TSL management will provide greater insights into platform ecosystem improvements.

The Value Management team can help you quantify the value of migrating your on-prem or IaaS environments to CDP Public Cloud.

Acknowledgment

Authors would like to thank Mike Forrest who helped with the arduous task of collecting AWS pricing metrics