Secret management is something that often is difficult to implement within your infrastructure. It’s becoming even more difficult when you need to work with artifacts that are confidential or private. This article will provide some information on how to tackle this problem and a way to fix it.

The challenge

The challenge is to retrieve artifacts from JFrog Artifactory by a Virtual Machine (VM) in Google Cloud (GCP), whilst using some sort of authentication and authorization mechanism (IAM). Since misconfigurations and unexpected events are very common in the cloud (csa-review ), the best is to use a way to validate access with a token that when discovered has a limited value.

The resolution

HashiCorp Vault (HV) is being used as a secret manager that can distribute access tokens with a very limited time to live (TTL). This is not functionality that JFrag Artifactory delivers by itself.

To enable HashiCorp Vault in delivering this value, there needs to be an integration with the two other parties involved: JFrog Artifactory and a VM on GCP.

The Proof of Concept

In our Proof of Concept (PoC) we have proven that this integration is very easy, even in a multi-cloud setup, and that the ephemeral access token functionality can be delivered by HashiCorp Vault.

Below we present more detail on the design of the PoC and provide code snippets to do this for your own solution.

The PoC design

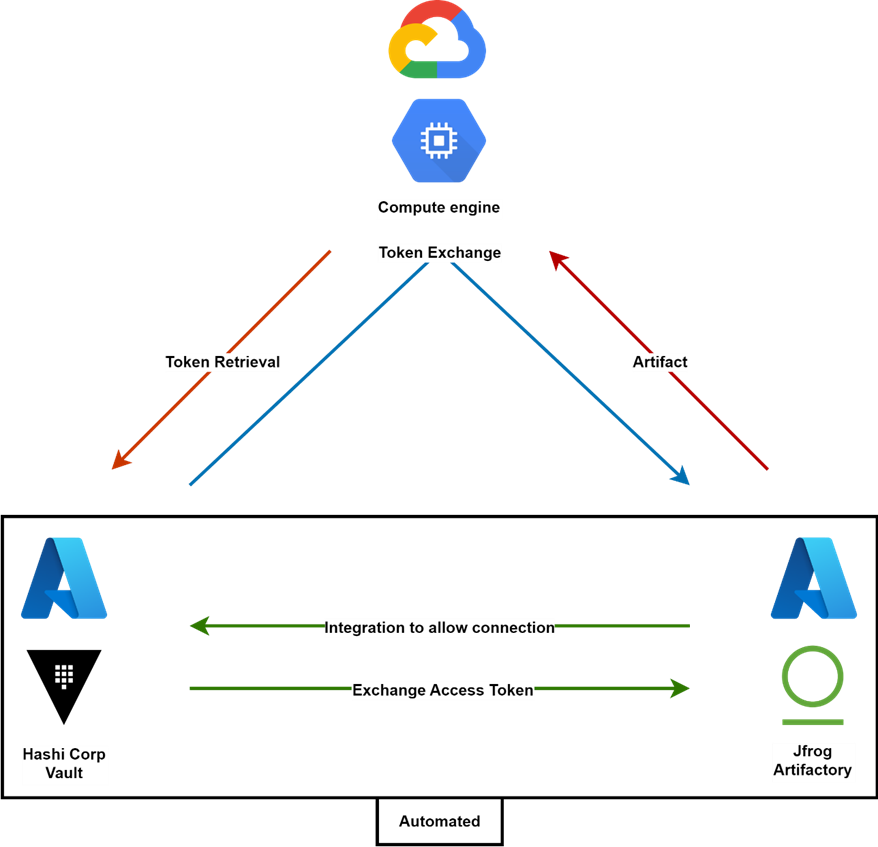

The goal of the PoC is to understand how complicated it is to have a service running on Google Cloud (GCP) that authenticates with JFrog Artifactory on azure with an access token retrieved from Hashicorp Vault also on azure in a different environment.

The service on GCP could be everything similar to a piece of computing that would need to retrieve or get an artifact, in this case, a small text file, from JFrog Artifactory.

For this PoC solution, we installed Jfrog Artifactory (version 107.47.11) on a Kubernetes Cluster within Azure, and separately Hashicorp Vault (version 1.12.2) also on Azure simulating a separate environment.

The functionality we want to prove is that we retrieve an ephemeral access token from Hashi Corp Vault to request access to JFrog Artifactory to retrieve the artifact by a Virtual Machine in GCP.

The PoC conclusion

JFrog Artifactory has published code for the integration with Hashicorp Vault, on GitHub in the form of a plugin. Important to note that even without the provided plugin, the integration of Artifactory and Vault was easy to do. It only needed a small number of lines of code. The provided plugin made it just a bit easier.

Most efforts were spent on the setup of the solution components as the infrastructure Vault runs on and the setup of Artifactory. In a real-life situation at your organization, these components are probably already available, configured, and running. Therefore our conclusion is that the integration should be very easy from a technological perspective.

Figure 1 – The Secret Management Triad

Research questions

For this PoC there were some questions that we wanted to be answered.

- Is the integration between Hashicorp Vault and JFrog Artifactoryout out of the box easy to implement, or does it need plenty of custom code for the integration to work?

- Is the integration between Hashicorp Vault on azure and a VM on GCP realistic on complexity and latency or does it need extra infrastructure components as VPC or Squid, to enable connectivity?

- Is the integration between Azure and GCP possible, with VPC and squid in between?

- Does Hashicorp Vault support ephemeral access tokens, or what is the Time-To-Live (TTL) configuration range?

Obstacle – TLS

The first obstacle that we ran into is the fact that Jfrog Artifactory doesn’t come with Transport-Layer-Security (TLS) enabled out of the box. This is needed because else there is not the way to create a safe communication line from Artifactoy to the Vault.

Jfrog Artifactory has a default feature to integrate easily with Hashicorp Vault, but the feature demands TLS to be enabled. We did this by enabling TLS via the YAML file that can be used as a configuration to install Artifactory via Helm. Enabling this feature prevented us to connected to the http connection to the web application. To use proper https, we had to configurate a certified authority to connect to with an installed certificate on the local device. Due to the fact that this was out of scope, we left this outside the POC.

Obstacle – integration

The second obstacle we ran into is the fact that as for now, you have to create an access token in Jfrog Artifactory and store this token in Hashicorp Vault. This is all done manually at this moment.

There is an extra plugin for Vault, that Jfrog is maintaining on their GitHub page, to connect Vault to Jfrog Artifactory and let Vault handle the access token creation and storage. In ascension, what this plugin does is create a new token and send it to Jfrog to tell Artifactory that this is the new access token to be used.

We didn’t get it running since it’s very difficult to get this plugin in the right folder on the right container within the Vault Kubernetes Cluster. You will need to create an extra container that is downloading the plugin to separate storage and connect this storage to the container and place the file in the right plugin folder for the vault.

server:

extraInitContainers:

- name: jfrogapp

image: "alpine"

command: [sh, -c]

args:

- cd /tmp &&

wget https://github.com/jfrog/artifactory-secrets-plugin/releases/download/v0.1.2/artifactory-secrets-plugin_0.1.2_linux_amd64.zip -O artifactory-secrets-plugin.zip &&

unzip artifactory-secrets-plugin.zip &&

mv artifactory-secrets-plugin_v0.1.2 /usr/local/libexec/vault/artifactory &&

chmod +x /usr/local/libexec/vault/artifactory

volumeMounts:

- name: plugins

mountPath: /usr/local/libexec/vault

volumes:

- name: plugins

emptyDir: {}

# volumeMounts:

# - mountPath: /usr/local/libexec/vault

# name: plugins

# readOnly: true

volumeMounts:

- mountPath: /usr/local/libexec/vault

name: plugins

standalone:

config: |

plugin_directory = "/usr/local/libexec/vault"

ui = true

listener "tcp" {

tls_disable = 1

address = "[::]:8200"

cluster_address = "[::]:8201"

# Enable unauthenticated metrics access (necessary for Prometheus Operator)

#telemetry {

# unauthenticated_metrics_access = "true"

#}

}

storage "file" {

path = "/vault/data"

}

# Example configuration for using auto-unseal, using Google Cloud KMS. The

# GKMS keys must already exist, and the cluster must have a service account

# that is authorized to access GCP KMS.

#seal "gcpckms" {

# project = "vault-helm-dev"

# region = "global"

# key_ring = "vault-helm-unseal-kr"

# crypto_key = "vault-helm-unseal-key"

#}

# Example configuration for enabling Prometheus metrics in your config.

#telemetry {

# prometheus_retention_time = "30s",

# disable_hostname = true

#}Questions answered

- The integration from Artifactory to Hashicorp Vault is fairly easy, Hashicorp Vault to Artifactory needs a bit of custom code.

# Name of file values.yaml (Jfrog Artifactory HELM config) access: accessConfig: security: tls: true - The VM on GCP only needs to curl or do an Invoke-webrequest to the vault to retrieve the token, there is no integration needed. On the other hand, you are connected over the open internet, so you can experience a minor bit of latency. This needs to be further stress tested. (see code snippets)

- There will be some work on the certificate part of this solution, since we need a certificate authority for the certificate exchange. A reverse proxy with squid can help us to achieve that goal.

- Hashi Corp Vault has a function where you can configure the lifecycle of a token. For example, if a token’s TTL is 30 minutes and the maximum TTL is 24 hours, you can renew the token before reaching the 30 minutes. You can renew the token multiple times if you are using it. However, once the token reaches the 24 hours of its first creation, you can no longer renew the token.

Code snippets

TOKEN=$(curl -H "X-Vault-Token: hvs.fLQP2KrR1i12OIXlZemRY5II" \

-X GET http://20.86.213.41:8200/v1/secret/data/artifactory -s | \

jq -r '.data.data.access_token')

URL=$(curl -H "X-Vault-Token: hvs.fLQP2KrR1i12OIXlZemRY5II" \

-X GET http://20.86.213.41:8200/v1/secret/data/artifactory -s | \

jq -r '.data.data.url')

URLFILE=$(echo "$URL""/example-repo-local/test.txt.txt")

curl -u admin:$TOKEN $URLFILE -k

OUTCOME >> dit is een testSharing Knowledge

Xebia’s core values are: People First, Sharing Knowledge, Quality without Compromise and Customer Intimacy. That is why this blog entry is published under the License of Creative Commons Attribution-ShareAlike 4.0