Introduction

In this blog, we will show you how to build a conversational search application that can interact with Azure Cognitive Search (ACS) and retrieve relevant content from a web-scraped index by asking natural language questions, requesting for summary information, and using vector search. The application will also use OpenAI embeddings, which are pre-trained models that can embed queries and documents into vectors, and Azure Chat OpenAI, which is a service that can generate natural language responses using OpenAI models.

Vector search is a technique that uses deep learning models to represent queries and documents as vectors in a high-dimensional space. These vectors capture the semantic meaning and context of the queries and documents, and can be compared using a similarity measure, such as cosine similarity, to find the most relevant matches. Vector search enables you to perform semantic search, which can understand the intent and meaning of the user’s query, rather than just matching keywords.

- Azure Cognitive Search: A cloud-based service that provides a rich set of capabilities to index, query, and analyze structured and unstructured data. We will use ACS to create and manage our web-scraped index, as well as to perform vector search using the built-in semantic ranking feature.

- OpenAI: A research organization that develops and provides access to cutting-edge artificial intelligence models and tools. We will use OpenAI to create and deploy a custom language model that can generate natural language responses based on the search results, as well as to condense follow-up questions into standalone questions.

- ConversationalRetrievalChain: A Python class that implements a conversational retrieval pipeline using a large language model (LLM) and a vector-based retriever. We will use this class to orchestrate the interaction between ACS and OpenAI, and to handle the user input and output.

Prerequisites

To follow along with this blog post, you will need the following:

- An Azure subscription

- An Azure Cognitive Search service

- An Azure Cognitive Search index with some data. You can use any data source that you want, but for this example, I will use a scraped blog index that contains some blog posts from various websites. You can find the instructions on how to create and populate this index [here].

- Azure OpenAI service: You will need this to access the OpenAI embeddings and Azure Chat OpenAI services.

Conversational Search Application

Here are the steps to use the Conversational Retrieval Chain to fetch data from Azure Cognitive Search and generate responses using OpenAI ADA model:

-

Import the necessary modules and classes from the Conversational AI Toolkit and other libraries.

from langchain.chains import ConversationalRetrievalChain from langchain.vectorstores.azuresearch import AzureSearch from langchain.chat_models import AzureChatOpenAI from langchain.embeddings.openai import OpenAIEmbeddings from langchain.prompts import PromptTemplate

-

Import the necessary modules and classes from the Conversational AI Toolkit and other libraries.

- deployment: the name of the deployment that hosts the model.

- model: the name of the model that generates the embeddings.

- openai_api_base: the base URL of the OpenAI API.

- openai_api_type: the type of the OpenAI API (either sketch or engine).

- chunk_size: the number of sentences to process at a time.

embeddings=OpenAIEmbeddings(deployment= “ada_embedding_deployment_name”, model=” text-embedding-ada-model-name”, openai_api_base=” https://abc.openai.azure.com/”, openai_api_type=” azure”, chunk_size=1)

-

Set up the AzureSearch class, which will access the data in Azure Cognitive Search. You need to provide the following parameters for the Azure Cognitive Search service:

- azure_search_endpoint: the endpoint of the Azure Cognitive Search service.

- azure_search_key: the key to authenticate with the Azure Cognitive Search service.

- index_name: the name of the index that contains the data.

- embedding_function: the embedding function is the same as the one we created in step 2 using the OpenAIEmbeddings class, so we can use the embeddings object that we already have.

vector_store: AzureSearch = AzureSearch( azure_search_endpoint="https://domain.windows.net", azure_search_key="your_password", index_name="scrapped-blog-index", embedding_function=embeddings.embed_query)

-

Configure the AzureChatOpenAI class, which will be used to generate natural language responses using the OpenAI Ada model. You need to provide the following parameters for the OpenAI Ada model:

- deployment_name: the name of the deployment that hosts the model.

- model_name: the name of the model that generates the responses.

- openai_api_base: the base URL of the OpenAI API.

- openai_api_version: the version of the OpenAI API.

- openai_api_key: the key to authenticate with the OpenAI API.

- openai_api_type: the type of the OpenAI API (either sketch or engine).

llm = llm = AzureChatOpenAI(deployment_name="ada_embedding_deployment_name", model_name= "open_gpt_model-name", openai_api_base="https://model.openai.azure.com/", openai_api_version= "2023-07-01-preview", openai_api_key=OPENAI_API_KEY, openai_api_type= "azure")

-

Define the PromptTemplate class, which will be used to rephrase the user’s follow-up questions to be standalone questions. You need to provide a template that takes the chat history and the follow-up question as inputs and outputs a standalone question.

CONDENSE_QUESTION_PROMPT = PromptTemplate.from_template("""Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question. Chat History: {chat_history} Follow Up Input: {question} Standalone question:""")Construct the ConversationalRetrievalChain class, which will be used to generate responses based on the user’s questions and the data in Azure Cognitive Search. You need to provide the following parameters for this class:

- llm: the language model that generates the natural language responses

- retriever: the retriever that fetches the relevant documents from the Azure Cognitive Search service.

- condense_question_prompt: the prompt template that rephrases the user’s follow up questions to be standalone questions.

- return_source_documents: the option to return the source documents along with the responses. - verbose: the option to print the intermediate steps and results.

qa = ConversationalRetrievalChain.from_llm(llm=llm, retriever=vector_store.as_retriever(), condense_question_prompt=CONDENSE_QUESTION_PROMPT, return_source_documents=True, verbose=False)

-

Define a function called search, which will take the user’s input as a parameter, and return a response.

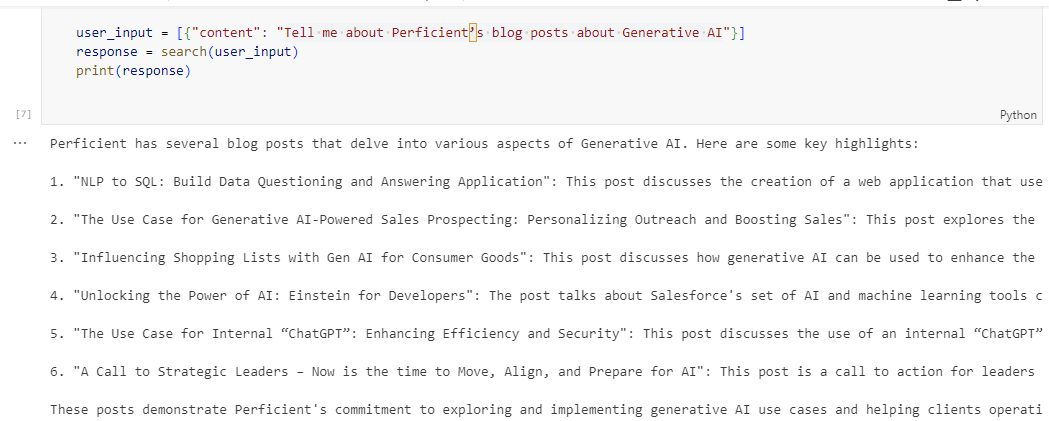

def search(user_input): query = user_input[-1]['content'] history = [] if len(user_input) == 1: chat_history = "" result = qa({"question": query, "chat_history": chat_history}) response = result["answer"] else: for item in user_input[:-1]: history.append(item["content"]) chat_history = [(history[i], history[i+1]) for i in range(0, len(history), 2)] result = qa({"question": query, "chat_history": chat_history}) response = result["answer"] return response - Test the function with some sample inputs and see the outputs in the notebook.

user_input = [{"content": "Tell me about Perficient’s blog posts about Generative AI"}] response = search(user_input) print(response)Here is the screenshot for more reference:

Conclusion

In this blog, we have demonstrated how to build a conversational search application that can leverage the power of Azure Cognitive Search and OpenAI embeddings to provide relevant and natural responses to the user’s queries.

By using components – Azure Cognitive Search, OpenAI, and ConversationalRetrievalChain, we have been able to create a conversational search application that can understand the intent and meaning of the user’s query, rather than just matching keywords. We hope you have enjoyed this blog and learned something new. If you have any questions or feedback, please feel free to leave a comment below. Thank you for reading!

Additional References

- https://python.langchain.com/docs/integrations/vectorstores/azuresearch

- https://python.langchain.com/docs/integrations/chat/azure_chat_openai

- https://python.langchain.com/docs/integrations/vectorstores/azuresearch

- How to use Azure Blob Data and Store it in Azure Cognitive Search along with Vectors

- How to Scrape a Website and Extract its Content