What is a Data warehouse?

A Data warehouse is a concept that aggregates data from different sources into a single, central, consistent data store to provide meaningful business insights. It is typically used to connect and analyze heterogeneous sources of business data. To move data from one or more sources to the data warehouse, ETL is used (Extract, Transform, Load). However, the challenge in ETL is dealing with changes in data with respect to time, such as when a customer changes their address, or a product changes its price. This is where SCD (Slowly Changing Dimension) comes into play.

How SCD works?

An SCD is a technique that tracks changes to dimension tables over a period of time. Dimension tables contain descriptive information about business entities, such as customers, products, etc. SCD keeps track of changes to these entities gradually with time so that historical data can be analyzed correctly. The three broadly used SCD types are SCD1, SCD2, and SCD3.

Slowly Changing Dimension Type 2:

The Slowly Changing Dimension Type 2 (SCD Type 2) is one of the most acquainted dimensions in a data warehouse. SCD type 2 stores a record’s history in the dimension table. Now, in any ETL application, effective dates (such as start and end dates) and the flag approach are the dominant ways for SCD type 2. The concept of SCD type 2 is to identify the new records and insert them into the dimension table with the surrogate key and Current Flag as “Y” (stands for active indicator). Identify the changed records meaning those records already exist in the dimension table with changed values, re-insert them into the table and Current Flag as “Y” too. Update the existing records in the table and Current Flag as “N” for those records that consist of old values.

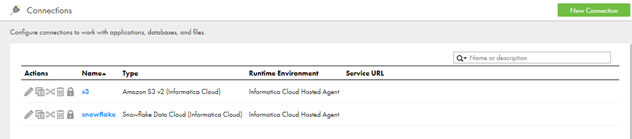

Connections:

In IICS, go to the Administrator service, and click on the connections to create connectors for s3 and Snowflake. Get the add-on connections if not available.

Metadata Structures:

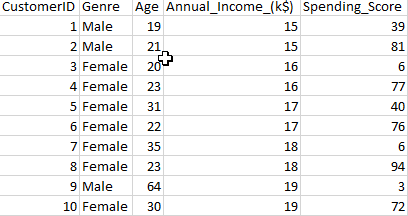

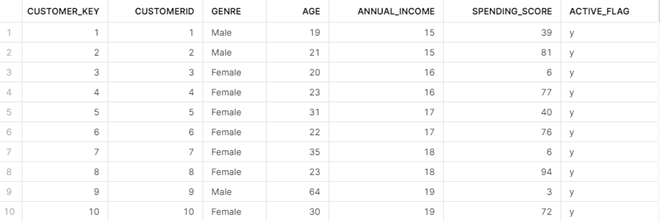

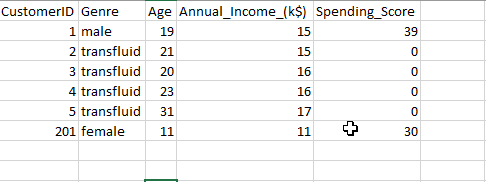

Source and target table structures are shown below:

Source file structure:

Target table structure:

Steps for Data Pipeline:

Enter IICS and Choose Data Integration Services

Go to New Asset-> Mappings-> Mappings

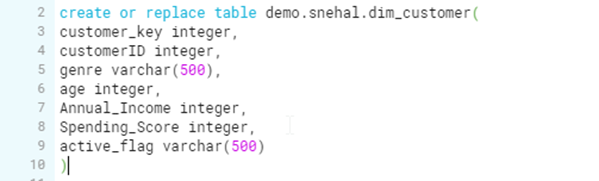

1. Drag the source and configure it with the source file.

2. Drag a lookup. Configure it with the target table and add the conditions below:

3. Create a new expression and connect both the source and the lookup to it as described above. If there are name conflicts, it may ask you to resolve them by using prefixes or suffixes. I used SQ_ as a prefix and LKP as a suffix. Add an output port o_new_record as a type string and configure it as IIF(ISNULL(CUSTOMER_KEY), true, false).

Moreover, Add another output port as o_update_record as a type string and configure it as IIF(not ISNULL(CUSTOMER_KEY), true, false). Also, two output ports of type string should be added, with old_flag as ‘n’ and new_flag as ‘y’.

4. Add a filter and connect the expression to the filter as mentioned. Furthermore, set the condition to o_update_record to filter out the records that need to be updated.

5. Drag the target (UPD_E) and connect the filter as mentioned. Select the operation as an update from the dropdown.

Most Importantly, select the Surrogate key (CUSTOMER_KEY) as the update column.

Go to the Field Mapping tab, map CUSTOMER_KEY with the CUSTOMER_KEY and ACTIVE_FLAG with the old flag.

6. Drag the target (INS_E) and connect the filter as mentioned. Select the operation as an insert from the dropdown. Map the ACTIVE_FLAG with the new_flag and other target fields using the field mapping tab.-

7. Create a sequence generator and connect with the target instances as mentioned, it helps to generate the surrogate key in the dimension table.

8. Also, Add another filter and connect the expression to the filter as mentioned. Set the condition to o_new_record to identify the new records and insert them into the dimension table.

9. Drag target(INS_N) and connect the filter with o_new_record condition to it as mentioned. Select the operation as an insert from the dropdown. Map the ACTIVE_FLAG with the new_flag and other target fields using the field mapping tab.

Execution step:

Save and close the mapping after you have completed the steps. Click on New Asset -> Tasks-> Mapping Task.

Enter your Task Name, choose the Runtime Environment, and select the Mapping you created. Click Next (Sequence) and Finish. As a result, Mapping Task will be saved, then run.

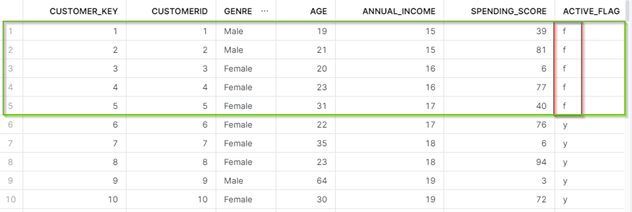

- In the first run, the target table i.e., DIM_CUSTOMER will look as follows: –

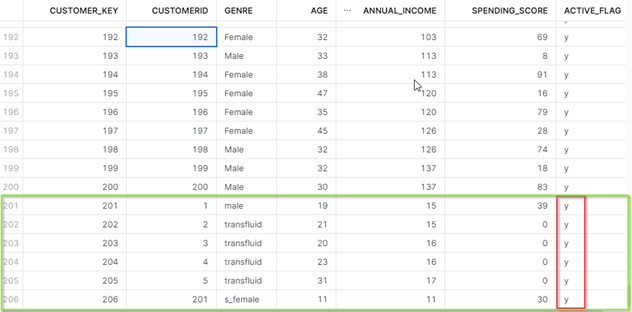

- Pick the same file and make some changes in the records. Insert it in s3.

- Afterward, add the changed source file in the source, then re-run the job to see if the historical records are being captured in the target table as per the SCD2 logic we have defined.

- As you can see in the figures above, a new record was inserted with the CUSTOMER_ID value of 201. Moreover, all old records with changed values i.e. (1-5 customer_id records) are added with ACTIVE_FLAG as ‘y’, and earlier records are flagged as ’f’.

Conclusion

Thank you for reading, I hope this blog will help you implement SCD2 logic in your project using IICS.

Please feel free to reach out in the comments sections if you have any questions or want to build the pipeline, I would be happy to help!

Good luck!`

A great post.Thanks for sharing it with us.It is very informative and true.

https://www.truckbazi.com/

Very informative

Nice Blog! Keep it up!!