Introduction

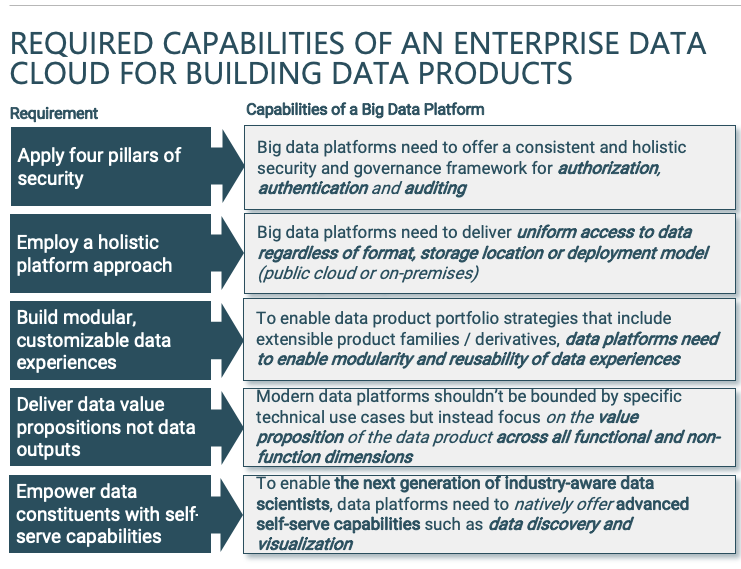

In the first part of this series, I outlined the prerequisites for a modern Enterprise Data Platform to enable complex data product strategies that address the needs of multiple target segments and deliver strong profit margins as the data product portfolio expands in scope and complexity:

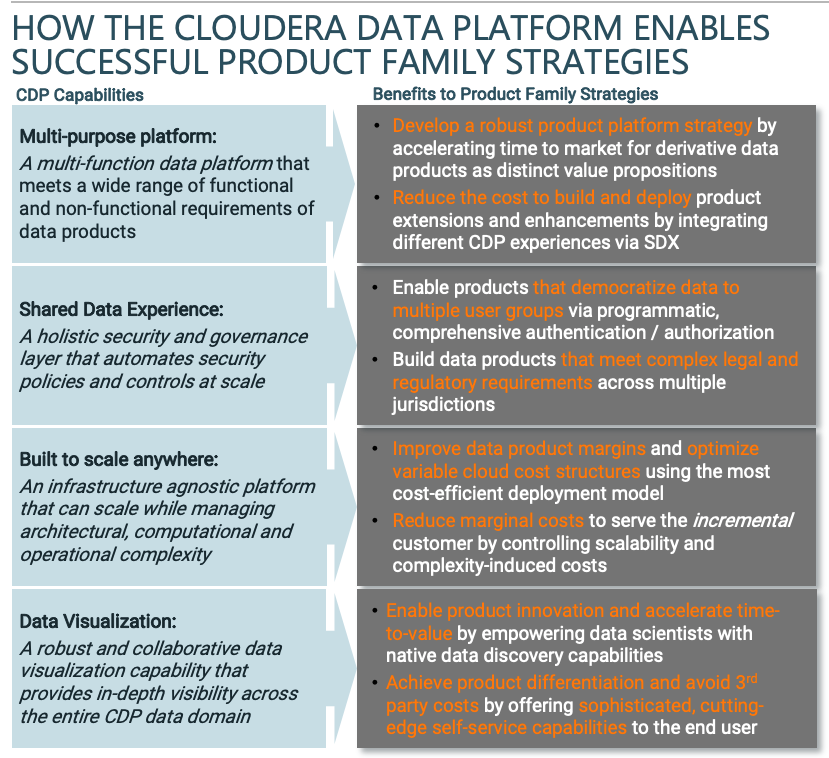

With this article, I will dive into the specific capabilities of the Cloudera Data Platform (CDP) that has helped organizations to meet the aforementioned prerequisite capabilities and fulfill a successful data product strategy vision.

How CDP Enables and Accelerates Data Product Ecosystems

A multi-purpose platform focused on diverse value propositions for data products

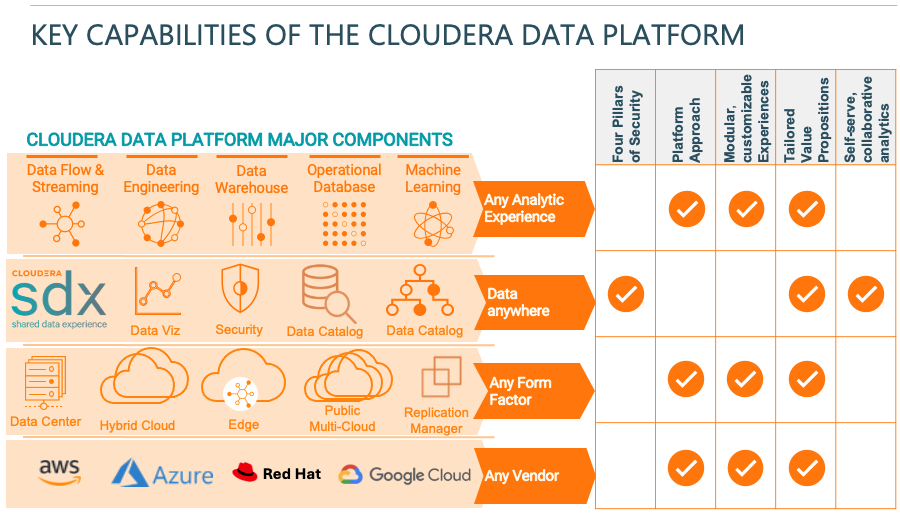

The Cloudera Data Platform comprises a number of ‘data experiences’ each delivering a distinct analytical capability using one or more purposely-built Apache open source projects such as Apache Spark for Data Engineering and Apache HBase for Operational Database workloads. It is both the superior technical characteristics of each individual data experience and the cohesive choreography between them that make CDP the ideal data platform for complex data products that include multiple stages of analytical processing to deliver differentiated value propositions.

All these different experiences leverage the same underlying data, security and governance layer via the Control Plane and the Shared Data Experience that enable a high degree of integration and modularity between components. As a result, CDP-enabled data products can meet multiple and varying functional and non-functional requirements that correspond to product attributes, each fulfilling specific customer needs. Those major functional and non-functional requirements include:

Analytical Outcome: CDP delivers multiple analytical outcomes including, to name a few, operational dashboards via the CDP Operational Database experience or ad-hoc analytics via the CDP Data Warehouse to help surface insights related to a business domain.

Analytical Velocity: CDP offers experiences that can meet different analytical velocity requirements e.g., batch or real-time analytics. For example, the Cloudera Data Flow experience offers an integrated event processing capability to deliver low-latency analytics by combining Flow Management (using Apache NiFi), Streams Messaging (using Apache Kafka) and Stream Processing / Analytics (using Apache Flink / SQL Stream Builder).

Environment Consumption Pattern: Replication Manager enables efficient delivery of various consumption patterns, both transient and persistent ones. For example, use of burst-to-cloud to replicate select data assets with the requisite security and governance controls, allows IQVIA clients to stand up a short-lived, PHI-secure environment for external and internal data consumers to perform analytical tasks without allowing any extraction of data.

Service Level Agreements (SLAs): The Shared Data Experience delivers an infrastructure abstraction capability that enables data products to meet different SLAs by leveraging a singular or a composite deployment model (e.g., private cloud only, public multi-cloud or hybrid). For example, in the case of a US data communications company that offers 911-related services to federal and county agencies, only a private cloud model meets the SLAs required by those entities.

Legal and Regulatory Requirements: CDP delivers data products to address complex and continuously evolving legal and regulatory requirements by offering a programmatic way to dynamically manage data permissions at a granular level by type of data asset and for different roles / users interacting with and manipulating those data assets.

Data Types and Sources: The multitude of data experiences enable efficient processing of different data types, such as structured and unstructured data collected from any potential source. As an example, Apache MiNiFi, a subproject of Apache NiFi, has been purposely built to enable data collection at the edge minimizing resource consumption and performance overheads.

A Robust Security Framework

Security has been a paramount consideration throughout the technology evolution of both the CDH and HDP runtimes, and CDP represents the next major step in that journey:

An automation-driven, security-by-default paradigm has been introduced for all data experiences and is enabled by the Cloudera Control Plane and the Shared Data Experience. Those CDP components enable a centralized and highly automated user authentication capability that propagates user identities across all relevant environments / data domains.

A fine-grained data permissioning mechanism enabled by Apache Ranger that provides a unified security layer for controlling user authorization for database elements at granular level (e.g., row-level and column-level authorization on database tables) and permissioning of users at folder level within a storage volume such as a cloud bucket (through the Ranger Authorization Service or RAZ).

A natively integrated audit mechanism enabled by Apache Ranger and Apache Atlas that is embedded into all persistent and transient clusters both on premises and in the public cloud. That audit mechanism enables Information Security teams to monitor changes from all user interactions with data assets stored in the cloud or the data center from a centralized user interface.

All these security capabilities deliver two important benefits to product strategies:

- First, they enable sharing of sensitive data across multiple user groups and large number of end users in a secure fashion via a programmatic, API-driven mechanism, thus accelerating client on-boarding and data product revenue realization.

- Second, they allow data products to quickly adapt to constantly evolving legal and regulatory requirements in different jurisdictions the data product is being commercialized to, reducing time-to-market in new regions.

Build Once, Scale Anywhere

Cloudera technology has met the performance / SLA requirements and processing volumes for some of the biggest data platform ecosystems deployed by leaders across many industry verticals. Having such a distinctive track record, we built CDP under three major scalability requirements that underpin complex data product strategies:

Architectural Scalability: Since the Shared Data Experience servers as the integration and abstraction layer across all data domains (edge, on-premises and public cloud) and data experiences, CDP doesn’t require additional components and point-to-point integrations to achieve seamless orchestration between intermediate data stages as the platform scales, an issue inherent in other architectural paradigms such as data mesh and data fabric models.

Operational Scalability: Learning from the operational challenges of the past to deploy and administer legacy, on-premies, CDH and HDP deployments, CDP has fully automated a lot of previously arduous and error-prone tasks that are related to environment provisioning, configuration, user authorization etc. ultimately reducing operational costs to manage the platform.

Processing Scalability: As we’ve previously demonstrated (e.g., in recent price-performance comparisons with other cloud data warehouses or other cloud-native services such as EMR and HDInsight), CDP would deliver typical big data analytical workloads in the public cloud at a much lower cost. In addition, it offers the optionality to execute the same workload in the optimal deployment model (private, hybrid or public cloud) that minimizes total cost of ownership.

The benefits of delivering architectural, operational and processing scalability become relevant as product families become more complex (to include a large number of product extensions or derivative value propositions) and when the number of end-users for a given product grows substantially. All these scalability advantages that CDP has, enable organizations to manage the cost structure for product derivatives / enhancements as product complexity grows (by adhering to the five principles of modular product families: commonality of modules, combinability of modules, functional binding, interface standardization, and loose coupling of components ) and also reduce the incremental or marginal costs to service the end-user as higher product adoption increases workload consumption that CDP can support in the most cost efficient way by using the optimal infrastructure deployment model.

A Holistic Visual Exploration of Data

The new visualization and data cataloging capabilities introduced with CDP including the CDP Data Catalog and Visual Applications, break down barriers in data exploration and discovery that exist with disparate and heterogeneous data ecosystems:

The data lineage capabilities that come with CDP Data Catalog, enable data participants to gain full visibility into the origin, relationships and changes of data as it flows through the different CDP experiences.

A single pane for visual analytics is being delivered by bringing together analytical outputs produced by teams using different data experiences. For example, a user can combine a pie chart from a data warehouse instance and predictive results from a machine learning model in a single Data Visualization dashboard.

An intelligent data exploration mechanism using Cloudera’s Natural Language Search capabilities, that helps uncover relationships between data by asking questions in plain language. Additionally, CDP will automatically select and display the most applicable visual presentation format for a particular analytical query.

A visual exploration capability unconstrained by cluster-specific boundaries that were present in legacy CDH and HDP implementations due to the division of a big data deployment in multiple, isolated clusters for specific technical use cases or Business Unit needs. Contrary to that architecture, the Shared Data Experience acts as a logical integration layer between different CDP environments, thus delivering a truly holistic data exploration capability to users who access multiple environments / domains of data products across e.g., different geographic regions.

The integrated data discovery and visualization capabilities of CDP deliver value to both internal product development activities and data product functionality:

- For internal data constituents, CDP accelerates product development efforts by enabling multi-disciplinary teams to collaborate with advanced self-serve visualization functionalities and deliver compelling value propositions

- In terms of data product functionality, CDP-enabled data products deliver cutting-edge data discovery and exploratory analytics capabilities by exposing the CDP Data Catalog and Visual Applications to the end-user. That functionality eliminate the need to build those capabilities internally or leverage a 3rd party offering (e.g., via an OEM relationship) for a given data product

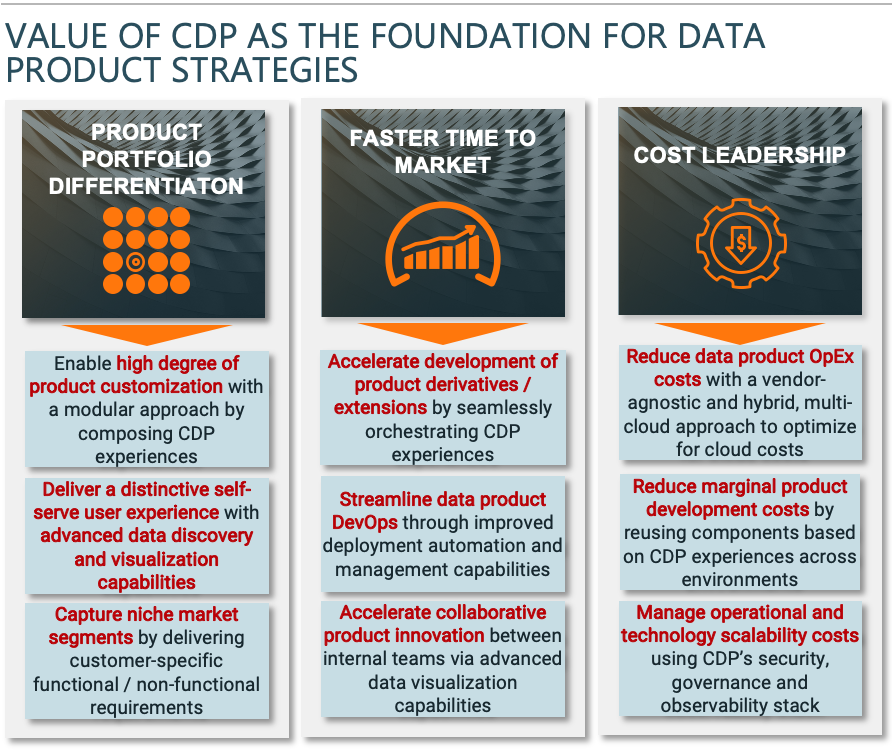

Conclusion

Conclusion

All the capabilities that I presented above articulate the value of the Cloudera Data Platform in enabling organizations to build, operationalize and scale product families that comprise a broad range value propositions to target multiple market segments and capture a greater share of the total addressable market:

However, and as I have observed, many organizations have a singular focus on a specific value proposition (delivered by means of a generic data product), without taking a ‘product platform’ approach, thus ignoring, as Michael Porter suggested, an inescapable truth in highly competitive domains: Organizations that cannot build and sustain a competitive advantage by means of cost leadership or product differentiation end up in a ‘stuck in the middle’ strategy that results in weak profitability and market presence. As a result, I always encourage organizations to think beyond their short-term product plans (and the technology decisions they make as a result of that thinking) and shift towards a longer-term mindset where a sustained product differentiation strategy will inform the right technology choices.

Cloudera has helped organizations across the entire data product development process. Starting with our Value Management team, we’ve helped clients design the Go-To-Market strategy for emerging data products (including market sizing / segmentation) and later on we have helped develop the technology architecture of data product ecosystems with our Professional Services organization and SMEs. I would be more than happy to further describe our process and explain our perspectives on data product development. To learn more about the Cloudera Data Platform and the different capabilities please visit: https://www.cloudera.com/products/cloudera-data-platform.html