“New is always better.”

Barney Stinson, a fictional character from the CBS show How I Met Your Mother

No matter how overblown it may sound, the famous quote is applicable to the technology world in many ways. In the last few decades, we’ve seen a lot of architectural approaches to building data pipelines, changing one another and promising better and easier ways of deriving insights from information. There have been relational databases, data warehouses, data lakes, and even a combination of the latter two.And whenever we started thinking, “Hey, that’s it. That’s exactly what every data-driven organization has been trying to find for years," someone would come up with a new, better solution. Data mesh is another hot trend in the data industry claiming to be able to solve many of its predecessors' issues.

This post explains the data mesh, how it works, what organizations may benefit from its implementation, and how to approach this new data management unicorn.

What is a data mesh?

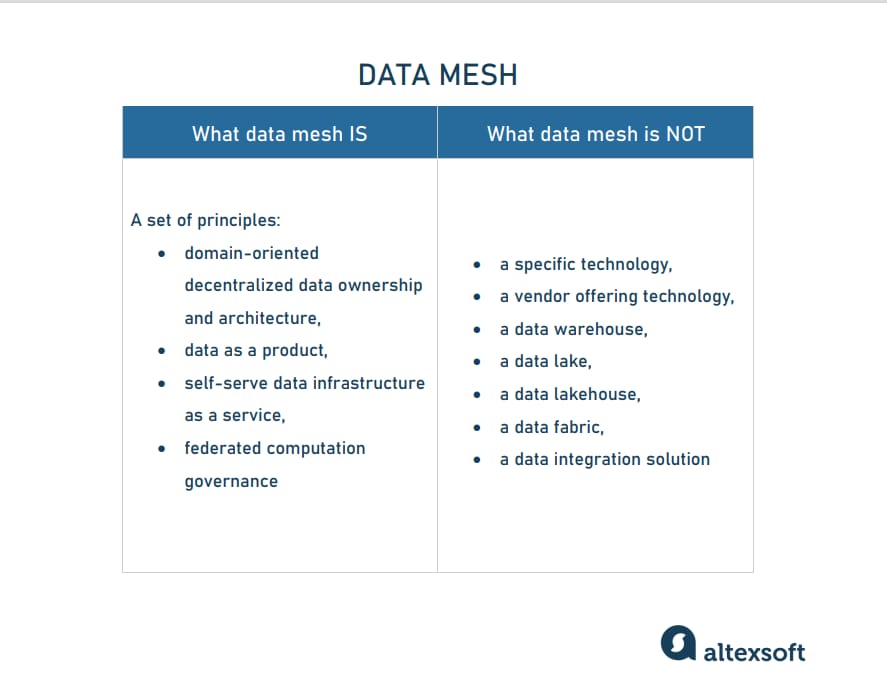

The first thing you should know is that a data mesh is a really abstract concept in its early days, so the learning and discovering processes are still going on. That’s why there’s so much confusion as to what it is and is not. We’ll try to draw a line of distinction so you know the difference.

What data mesh is and is not

What a data mesh IS

A data mesh is a set of principles for designing a modern distributed data architecture that focuses on business domains — not the technology used — and treats data as a product.For example, your organization has an HR platform that produces employee data. In the data mesh ecosystem, this will be a separate HR domain that owns its data, which is a product of the company. This is a huge change of thinking as most human resources departments neither view themselves as a product team nor as owners of datasets that they need to provide for the rest of the company.

So, the HR domain may include a few sources from which information comes like the recruiting app, a benefits platform, and a payroll app. The HR team will manage all of this data and generate datasets to be consumed by other users in the company like the marketing team. They also own the governance of their domain. The same will be done by other domains around the company, e.g., marketing domain, sales domain, logistics domain, etc.

Credit for coining the term and creating the concept of a data mesh goes out to Zhamak Dehghani, the director of emerging technologies at Thoughtworks North America, who sees a data mesh as “a paradigm shift in how we approach data: how we imagine data, how we capture and share it, and how we create value from it, at scale and in the field of analytics and AI [...] within or across organizations.”

She formulated the thesis in 2018 and published her first article “How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh” in 2019. Since that time, the data mesh concept has received a lot of attention and appreciation from companies pioneering this idea. Her book Data Mesh: Delivering Data-Driven Value at Scale was published three years later in March 2022.

The easiest way to understand the concept of a data mesh is by looking at the core principles behind it, which we’re going to uncover more extensively later on. The core principles are

- domain-oriented, decentralized data ownership and architecture;

- data as a product;

- self-serve data infrastructure as a service; and

- federated computation governance.

- DevOps (development and operations) is a practice that aims at merging development, quality assurance, and operations (deployment and integration) into a single, continuous set of processes. This is a methodology and a natural extension of Agile and continuous delivery approaches.

- Domain-driven design (DDD) essentially means designing software in a manner that aligns as closely as possible to a business domain. So, you’re trying to make the software itself reflect the underlying business as opposed to those things being completely separate.

- Microservices represent the architectural approach to building an application backend as a composition of multiple, loosely coupled, and independently deployable smaller components, or services. Each service — for example, an order processing service, a product catalog, or an email subscription manager — runs its own process and has its own codebase, CI/CD pipelines, and DevOps practices.

What data mesh is NOT

Perhaps you have stumbled upon something like “Is data mesh similar to data fabric?” or “How is data mesh different from a data lake?” and a bunch of other, sometimes reasonable, sometimes ridiculous questions. People get confused with new terms and often think that this is just old wine in new bottles.So, to avoid any confusion, please be aware that data mesh is NOT

- a specific technology or a vendor offering it;

- a data warehouse, which is a traditional way of storing structured data for analytical purposes;

- a data lake, which is a central repository for storing any sort of data whether it is structured or unstructured;

- a data lakehouse, which is a combined version of the previous two stores, capable of storing varied data but bringing structure to it; or

- a data fabric, which is a single environment consisting of a unified architecture, and services or technologies running on that architecture. It pulls together data from legacy systems, data lakes, data warehouses, SQL databases, and apps, providing a holistic view of business performance.

Data mesh shifts

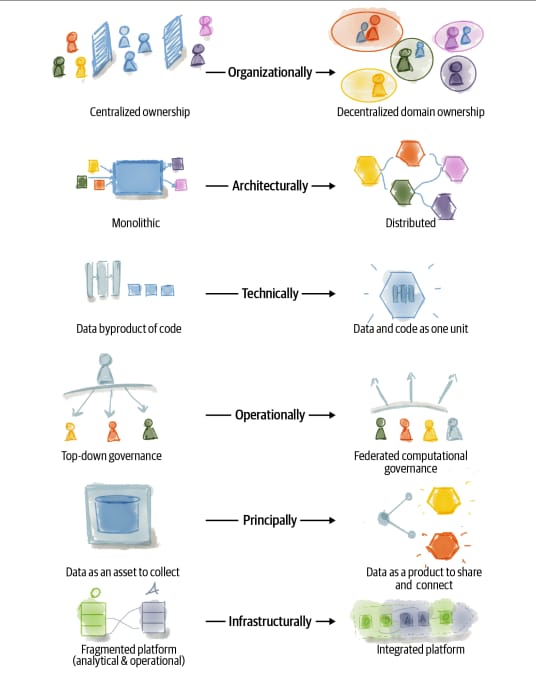

Data mesh is an approach that introduces multidimensional technical, cultural, organizational, and other paradigm shifts for companies that formerly used a centralized data management architecture.

Or more data buzzwords are coming… Who can tell

Data meshes require significant shifts in thinking about how data should work in a company. You don’t have to be scared though. They are quite understandable… Mostly.

Data mesh dimensions of change.

Source: Data Mesh: Delivering Data-Driven Value at Scale

The organizational shift means switching from centralized ownership of data by specialists who run the data platform technologies to a decentralized model pushing ownership and accountability of the data back to the business domains where data is produced or used. Some organizational approaches to analytics ownership are explained in our article about data science teams.The architectural shift means connecting data through a distributed mesh of data products accessed through standardized protocols instead of collecting data in monolithic warehouses and lakes.

The technological shift means moving to solutions that treat data and code as one autonomous unit.

The operational shift means changing top-down governance where human interventions are needed with an automated federated model.

The principal shift means switching the focus from data as an asset to collect to data as a product for all users to share and connect to.

The infrastructural shift means going from a fragmented platform with separate operational and analytical planes to an integrated infrastructure for both operational and data systems.

Through all these shifts, data mesh is called on to solve the problems of centralized data platforms by giving more flexibility and independence, agility and scalability, cost-effectiveness, and cross-functionality.

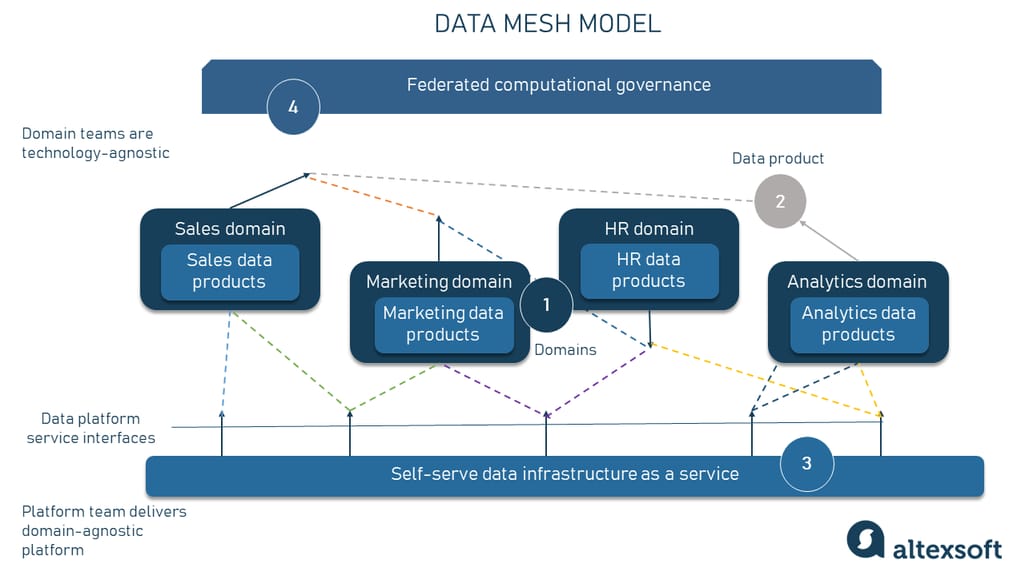

Data mesh can be utilized as an element of an enterprise data strategy and can be described through four interacting principles.

Data mesh principles and architecture

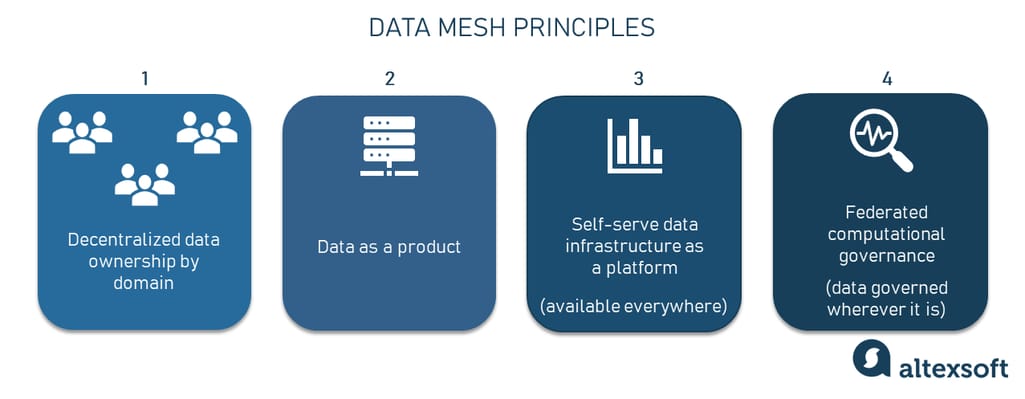

Regardless of the technology, there are four principles that capture what underpins the data mesh’s logical architecture and operating model. We’re going to peer at them in a bit more detail.

How a data mesh may look like

These principles are designed to progress us toward the data mesh objectives: increase value from data at scale, sustain agility as an organization grows, and embrace change in a complex and volatile business context.

Four principles of a data mesh architecture

Decentralized data ownership by domain

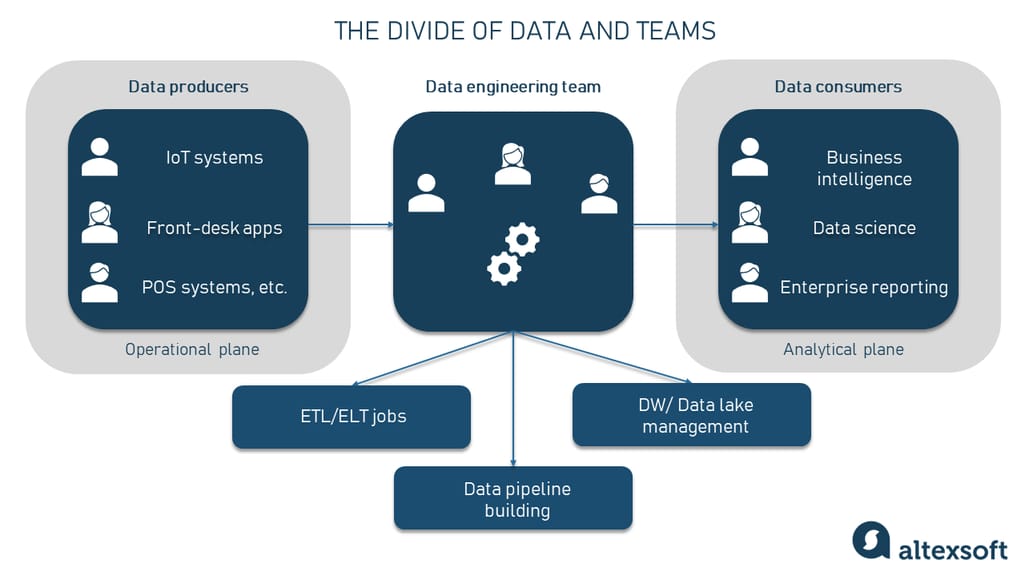

Zhamak Dehghani divides the data into the “two planes”:- The operational plane presents the data from the source systems where it originates — for example, front-desk apps, IoT systems, point of sales systems, etc. This data describes daily operations like purchasing materials from suppliers, hiring new employees or shipping products to customers. It’s often used by internal apps managing business processes — ERPs, accounting software, and medical practice management systems, to name just a few.

- The analytical plane embraces data that is collected and transformed for analytical purposes such as enterprise reporting, business intelligence, data science, etc. This data is aligned with specific end-customer use cases that often require data from different domains. The examples of business use cases consuming data from the analytical plane are dynamic pricing, sentiment analysis, and product and content recommendations.

The divide of data and teams

As the picture above clearly shows, organizations have data producers and operational data on the left side and data consumers and analytical data on the right side. In between, there are data engineers managing the data infrastructure with ETL/ ELT processes and tools required to transform operational data into analytical data.The problem is that all three parts of the puzzle have existed in silos for years. Data producers lack ownership of the information they generate which means they are not in charge of its quality. Nor do they typically understand how exactly their data will be used in the analytical plane. Data engineers, in turn, may know little to nothing about the specificity of a business domain. All this results in situations when data consumers acquire data that doesn’t fit their business use case. Sooner or later the issue should have been solved.

Just like the tech community came up with the idea of how to remove the wall between developer and operations and created DevOps specialists, the data mesh is focused on removing the wall between operational data teams, data engineering teams, and analytical data teams. How? By distributing the ownership of analytical data to business domains closest to the data — either the source of the data or its main consumers.

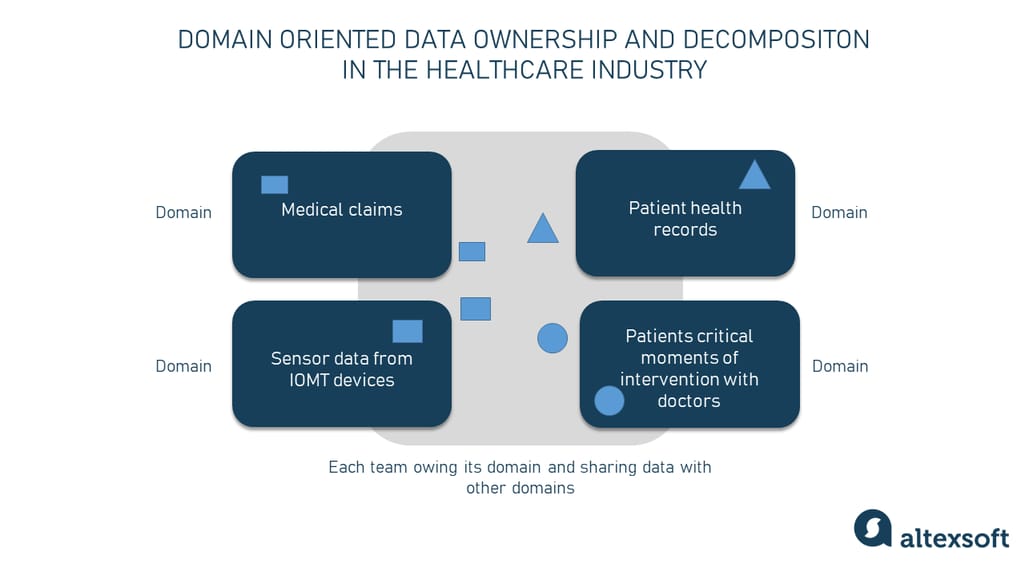

Domain-oriented data ownership and decentralization in the healthcare industry

The domain is basically a certain area of business with clearly defined borders of what should constitute it in an organization. Once a domain is defined, the data mesh principle of data ownership within that domain can be applied.There are two main roles here:

- A data product owner is a person whose main tasks are to map out data products (more in the second paragraph of this section). This role focuses on satisfying the demands of data users, e.g., data scientists who are building an ML model. Data product owners have a clear picture of their customers, how these people are going to use the data, and how they will access it. And it’s their job to guarantee data quality.

- A data engineer is a role inside the domain that is responsible for developing a data product relying on their competency in both data engineering tools and software engineering best practices.

The communication between the domains can be approached through data sharing APIs or event-streaming backbone with technologies like Kafka, for example.

With this sort of architecture, your first concern is data domains with data pipelines taking the back seat yet not forgotten.

Data as a product

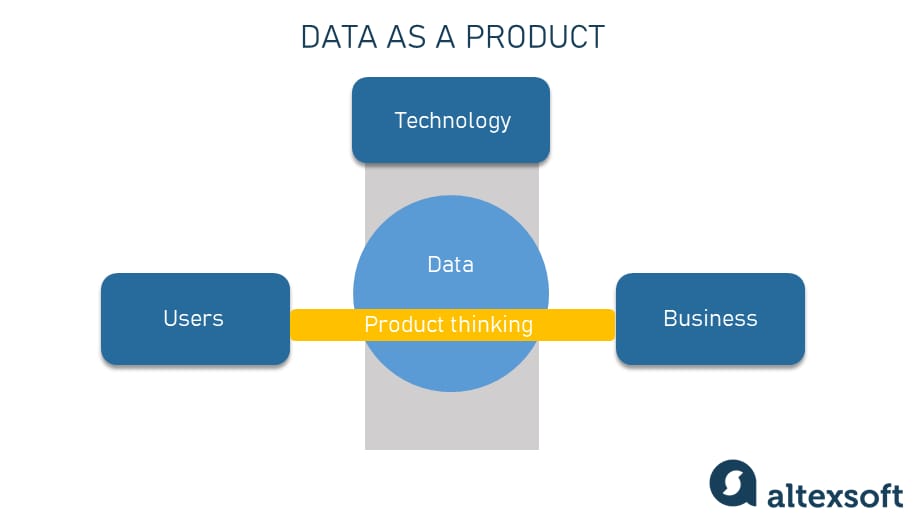

The second yet really important idea is that domains own their data as a product. A data product is a self-contained unit of information created to serve a particular business function and end-user needs. For example, this can be a dataset with metrics taken from a heart rate monitoring device or analytics dashboard showing how the company performed during the first quarter.According to Zhamak Dehghani, "Data as a product introduces a new unit of logical architecture called data quantum, controlling and encapsulating all the structural components needed to share data as a product — data, metadata, code, policy, and declaration of infrastructure dependencies — autonomously."

Data as a product principle

To apply product thinking here, the data product of a domain must meet certain quality criteria. Conceptually, a product is an intersection between business, users, and technology. It works like this: A user has a problem that the product should solve and a business provides that solution. So, when we bring product thinking to data, we see it as a solution to what a user looks for. Data is no longer treated as raw material, sourced and cleaned via ETL, and then delivered in a structured way.For data to be called and treated as a product it must be

• discoverable,

• valuable on its own,

• understandable,

• trustworthy,

• natively accessible,

• interoperable, and

• secure.

If we take the sales team, the data should be grouped by the sales contract, using the contract ID as the key, for example, to structure the contract details in an understandable way. The sales domain team must guarantee the quality of the data as well as the rest of the characteristics.

Self-serve data infrastructure as a service

A data mesh requires a self-serve data platform that helps various domain teams create products, share them with users, and manage their full lifecycle while abstracting from the technological complexity.For example, there might be some sort of menu of available options that are provided centrally like a relational database, object storage, a NoSQL database, etc. And the domain can choose the storage type that’s most effective for their data and they don’t have to deal with all the tech details behind it.

This idea can be realized through popular cloud services supporting data mesh architecture including AWS, Microsoft Azure, Google Cloud, and IBM cloud (the latter enables implementation of data mesh via data fabric solutions.)

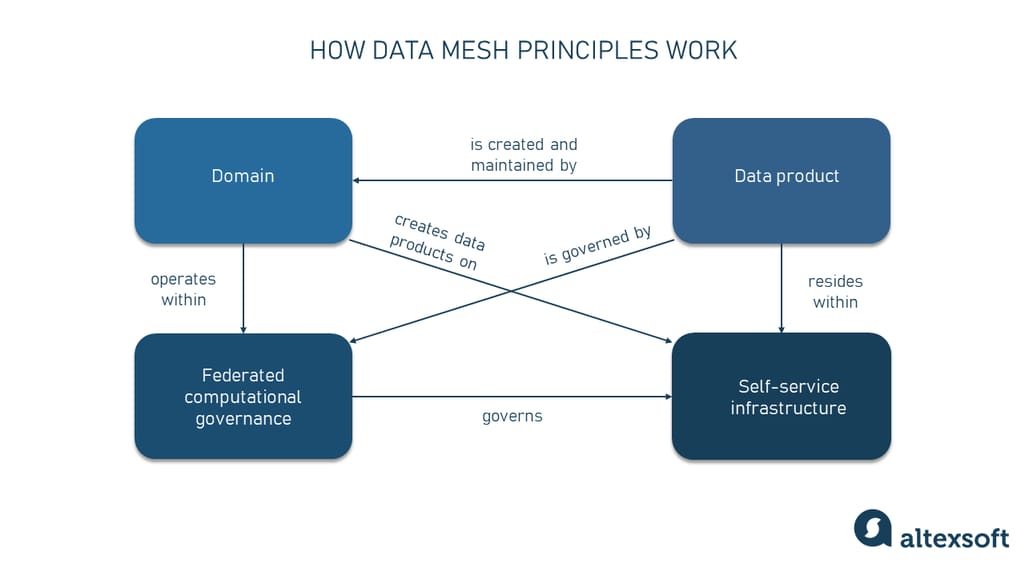

Federated computation governance

In order for the first three principles to work together seamlessly, compliance with certain standards is required. They are discussed and enforced by a cross-domain committee. This includes requirements for the structure and interfaces of the data products, encryptions, data versioning, documentation, and data sensitivity. Policies secure access to the data.The governance execution model heavily relies on codifying and automating the policies at a fine-grained level for every data product via the platform services.

How data mesh principles work

The four principles enable different domain teams to take ownership of their data and share it with one another. The benefit of the existing data can be increased by providing data products that are of good quality and can be correlated.Zhamak Dehghani highlights, “While I expect the practices, technologies, and implementations of these principles to vary and mature over time, these principles remain unchanged.”

Data mesh implementation: How to approach it and who needs it

The concept of a data mesh infrastructure is still being defined and that presents challenges to data teams when laying out a step-by-step plan for implementation.One of the scenarios to approach a data mesh may be as follows.

- Define a domain team that will be responsible for working on the creation of an ETL/ELT job and a central data platform.

- Make sure each domain dataset is stored separately and has an owner.

- Enable data to be available on demand as a data product.

- Nominate owners of the data product responsible for writing the logic to combine all the datasets.

- Configure what connectors will be used to reach databases.

- And finally build a central user interface.

In 2019, Zalando — one of the biggest retail companies in Europe — opted for data mesh principles and switched to a strategy of distributing data throughout the company and transferring ownership to the business group that created it. Data scientists and engineers have been tasked with collaborating with business leaders to structure data so it can be easily shared. The data mesh allowed both technical and operational teams to work hand in hand to achieve shared success.

But we shouldn’t forget that for every successful story, there are three that failed. At the moment, there are few companies out there claiming they have started making data mesh shifts. The question is, “Should you be one of them that does?” Unfortunately, we can’t answer it for you. Data mesh is hyped at the moment, but it shouldn’t be taken as a silver bullet that will magically solve your current challenges with centralized, monolithic architectures.

You can look in the direction of adoption of the data mesh concept if you are

- a large or rapidly maturing organization with different teams highly reliant on data and

- you need self-service analytics and/or your data infrastructure requirements are complex and demanding.

- your data ecosystem is small and unidimensional and

- your company operates on data that doesn’t change frequently or doesn't experience bottlenecks with implementing new data products.