Color Detection for Android Mobile Apps - A computer vision application.

If you'd like to explore what are the Advantages and disadvantages of Computer Vision read this article here.

The following information and code example was obtained from the following from http://opencv.org/. If you are not familiar with OpenCV and want to learn more, please visit the link. All steps were made using MAC OS 10.14.4

Color detection Tutorial using OpenCV in Android

This is one computer vision applications; you must already have installed and configured Android Developer Tools, and the Android NDK. Here's the list of steps and will dive deeper on each further down the tutorial.

- Download OpenCV Android Library

- Setup Android Project

- Import OpenCV Module

- Add the OpenCV Dependency

- Add Native Libraries

- Add Required Permission

- Update Gradle properties

- Setup our Android App

- Test the App

Let's begin with the tutorial.

Step 1: Download OpenCV Android Library

Go to the OpenCV Android Sourceforge page and download the latest OpenCV Android library. As at the time of writing this post, the latest available version was 4.1.0. When the download completes, you should extract the contents of the zip file into a folder.

Step 2: Setup Android Project

Create a new Android project using Android Studio only if you have not created one already for your computer vision project.

Note: Skip this step if you already have an Android project you want to use the OpenCV library in.

Step 3: Import OpenCV Module

After successfully creating an Android project, it is time to import the OpenCV module into your Android project. Click on File -> New -> Import Module

It should bring up a popup like an image below where you can select the path to the module you want to import.

Browse to the folder where you extracted the OpenCV Android library zip file contents. Select the java folder inside of the SDK folder.

After selecting the correct path and clicking OK, you should get a screen like an image below.

Click on Next to go to the next screen. On the next screen (the image below) you should leave the default options checked and click on Finish to complete the module import.

Step 4: Add the OpenCV Dependency

To work with the OpenCV Android library, you have to add it to your app module as a dependency. To easily do this on Android Studio, open the app gradle paste this “implementation project(path: ':openCV')”, then click on Sync Now.

Step 5: Add Native Libraries

On your file explorer, navigate to the folder where you extracted the content of the OpenCV Android library zip file. Open the SDK folder and then the native folder (Use the image below as a guide).

Copy the libs folder in the native folder over to your project app module main folder (Usually ProjectName/app/src/main).

Rename the libs folder you just copied into your project to jniLibs.

Step 6: Add Required Permission

To successfully use OpenCV, your app should have the camera permission added to its AndroidManifest.xml file. Tip: Don’t forget to request for the camera permission at runtime on Android 6 and above.

Step 7: Update Gradle properties

Step 8: Setup our Android App

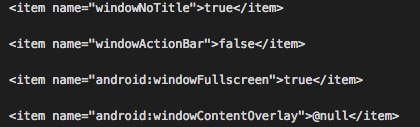

Add the following lines to the styles.xml to use the app on fullscreen mode.

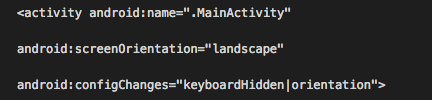

Update Manifest to initialize the app on landscape mode.

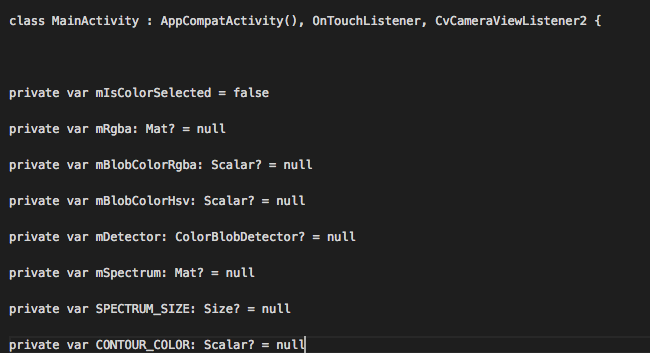

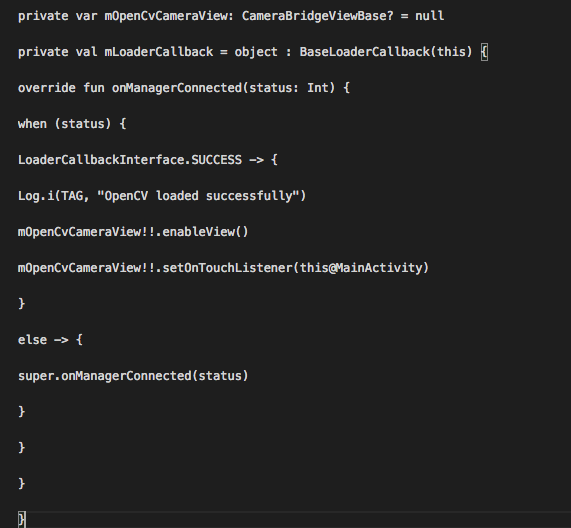

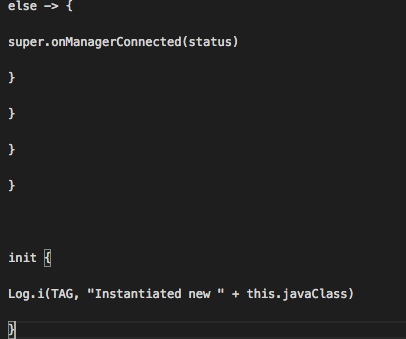

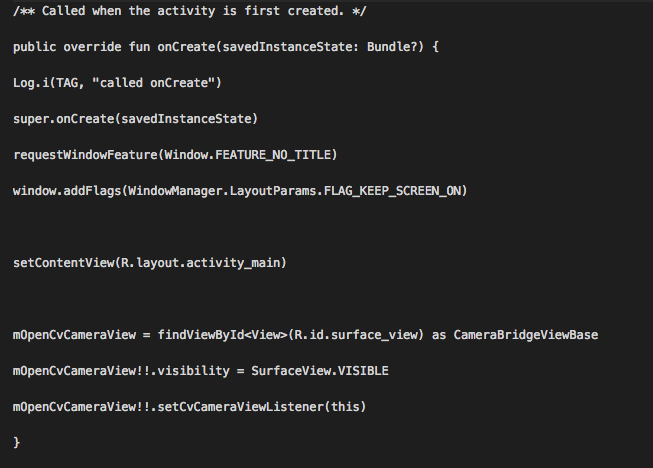

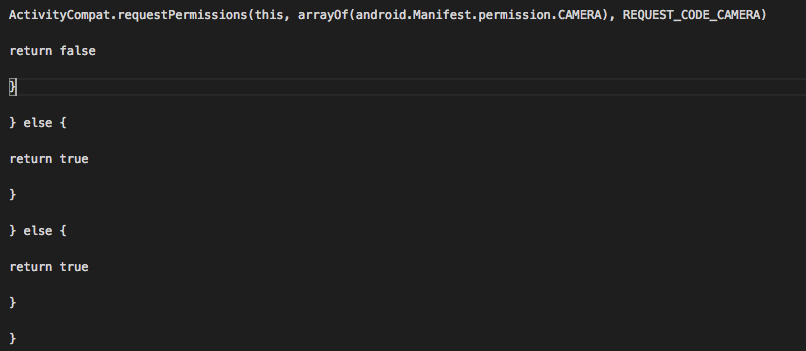

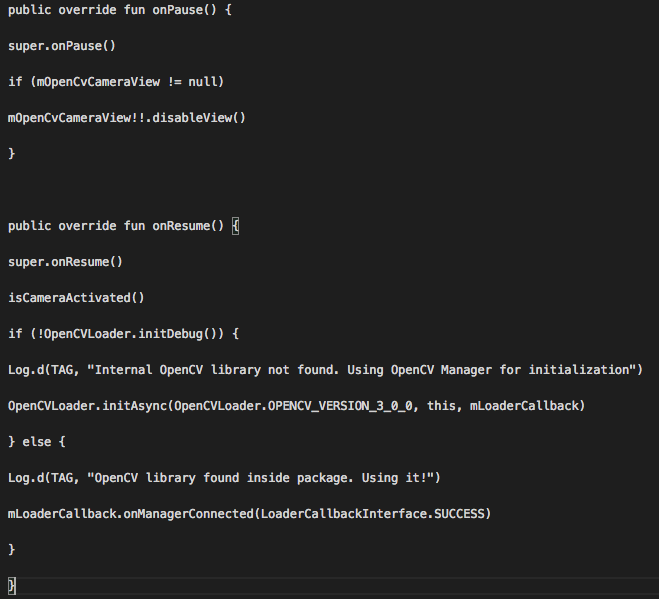

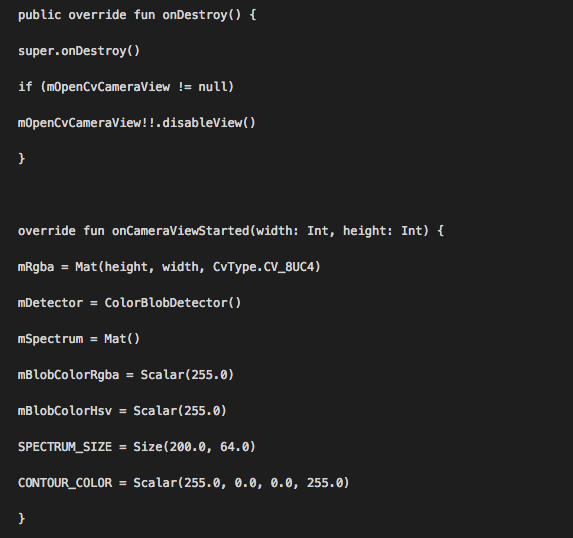

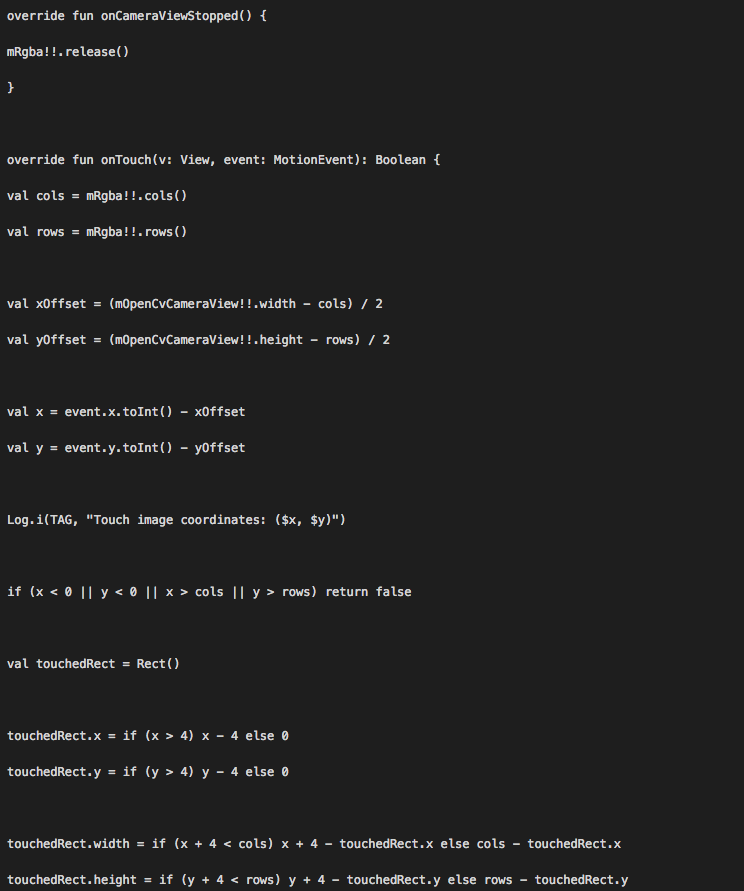

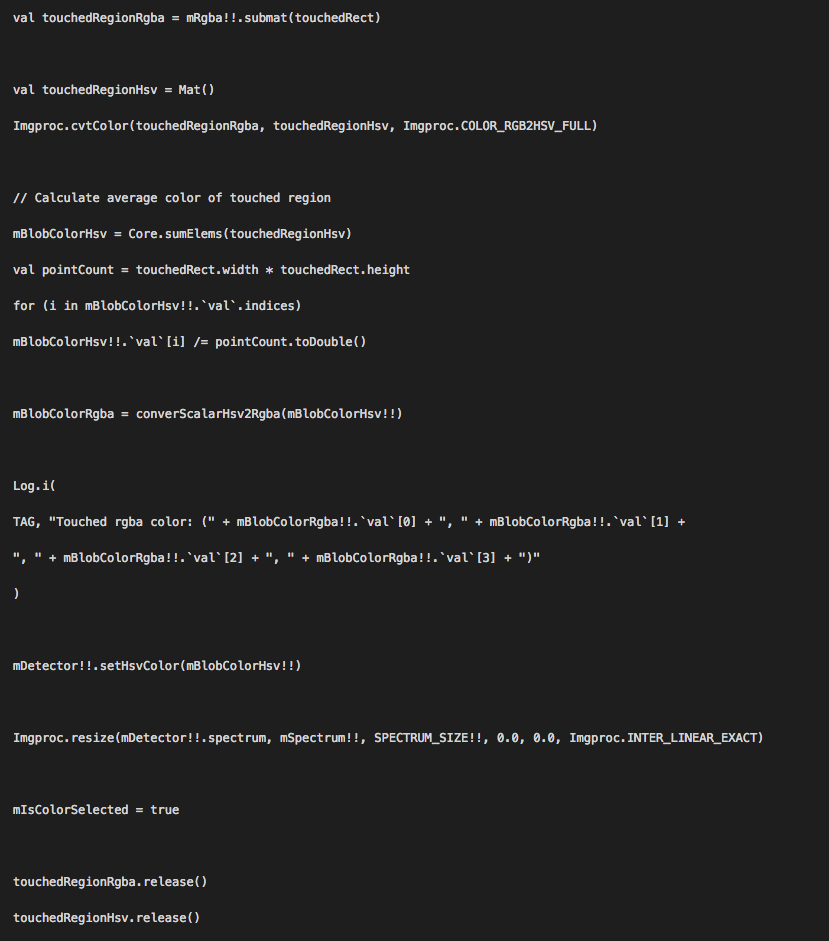

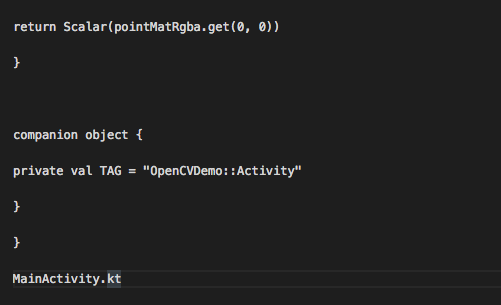

Quickly update your app main activity with the code below.

//No explanation needed, we can request the permissions.

Then create a new class called ColorBlobDetector and copy the code below into it.

// Filter contours by area and resize to fit the original image size

Finally, update your app main activity layout file with the layout code below.

Step 9: Test the App

If you'd like to explore, find this project available on the iTexico GitLab

Post Your Comment Here