A recent Kubernetes Adoption Report showed that 68% of surveyed IT professionals adopted containers more during the pandemic. Among their goals were accelerating deployment cycles, increasing automation, reducing IT costs, and developing and testing artificial intelligence (AI) applications.

But, what role does container technology play in this? This guide shares what containers are, how container orchestration works, and more.

What Is Container Orchestration?

Container orchestration is the automated process of coordinating and organizing all aspects of individual containers, their functions, and their dynamic environments. The process involves deploying, scaling, networking, and maintenance are all aspects of orchestrating containers.

But, let’s not get ahead of ourselves here. Let’s start with what a container is and does.

What is a container in cloud computing?

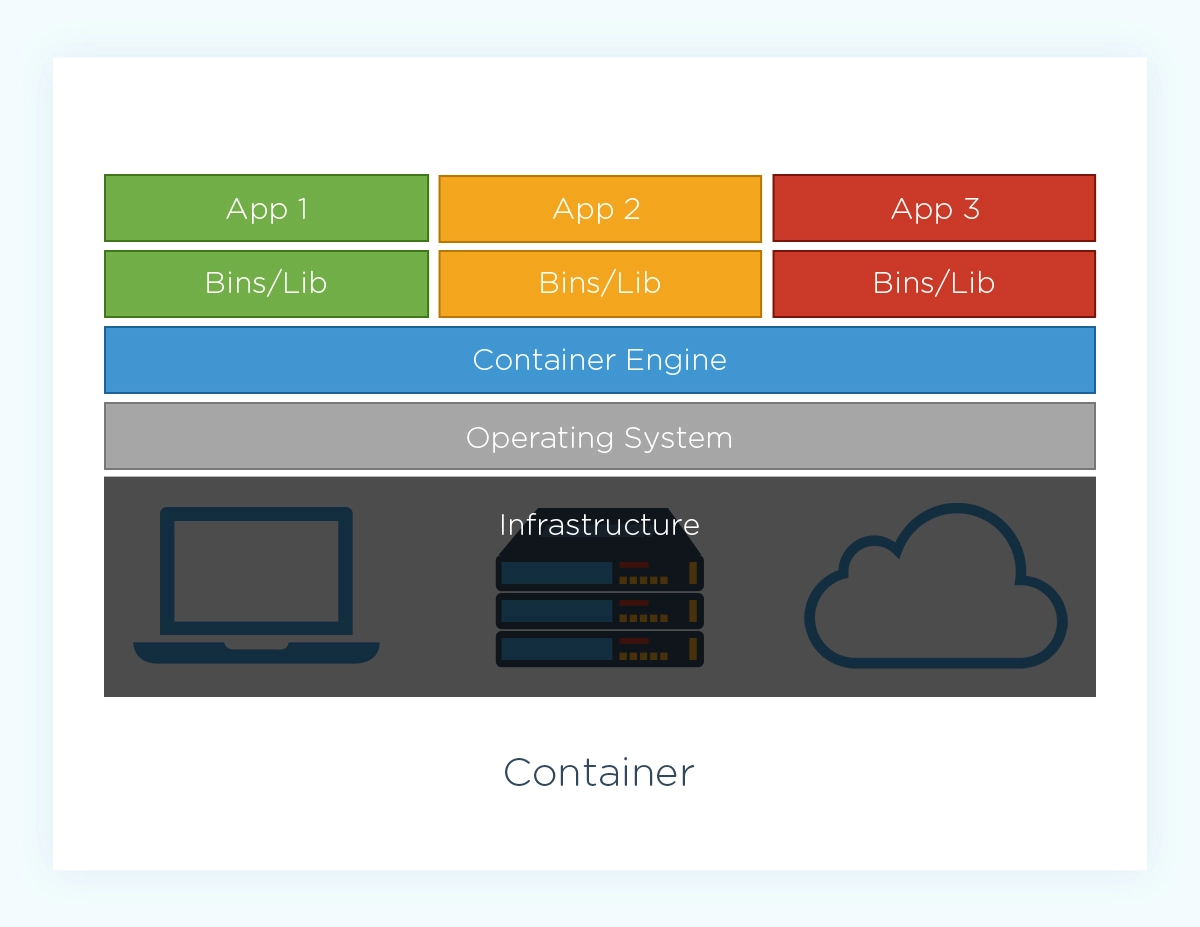

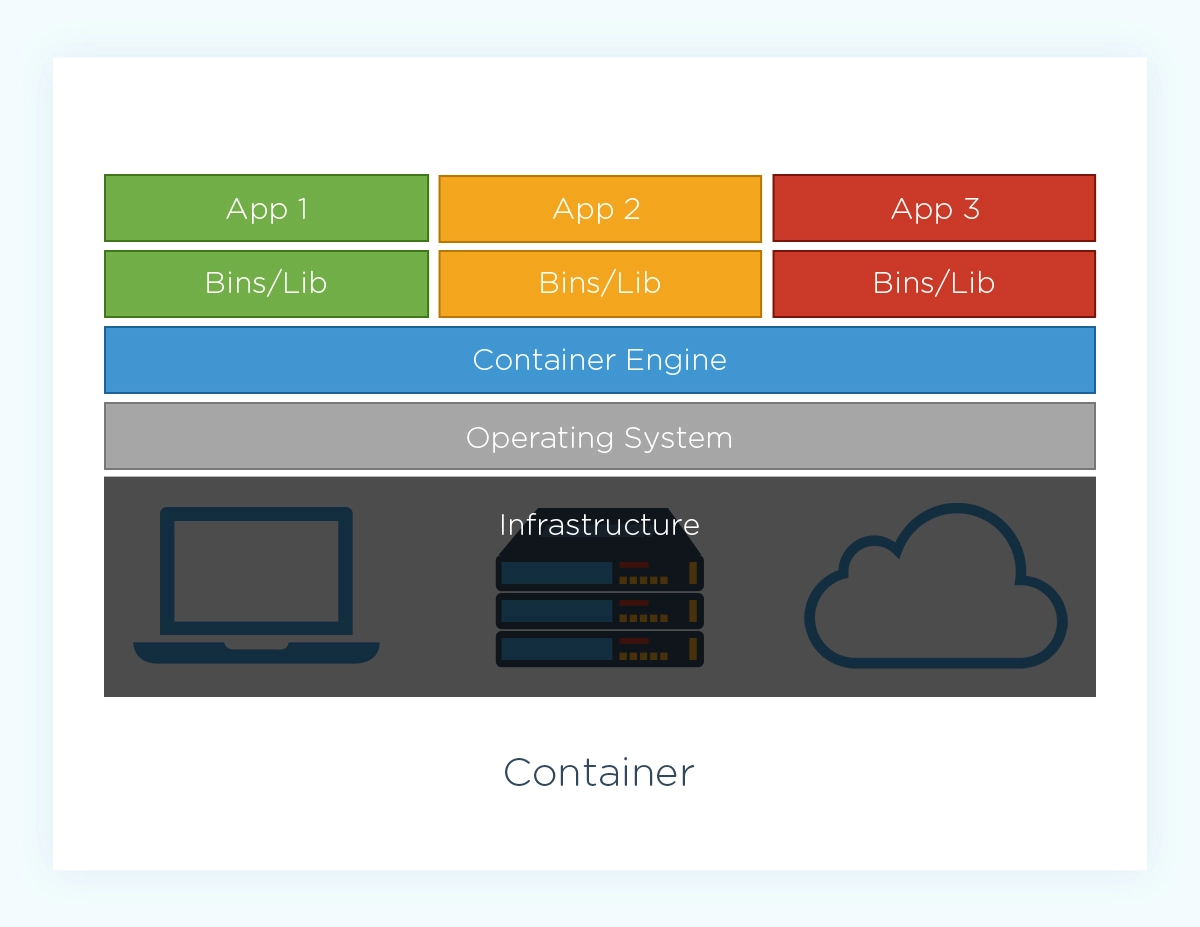

A container is an executable unit of software that helps package and run code, libraries, dependencies, and other parts of an application so they can function reliably in a variety of computing environments.

Containerized apps can run as smoothly on a local desktop as they would on a cloud platform or portable laptop.

Containerization is the process of developing, packaging, and deploying applications in containers.

Engineers can containerize separate parts of an app in a container. They can also contain an entire app.

A single application can have hundreds of containers. The number of containers you use could be thousands if you use microservices-based applications.

Managing all of these containers manually is challenging. So DevOps engineers use automation to ease and optimize container orchestration.

How do containers work?

Containers leverage virtualization technology to accomplish this level of portability, performance, and consistency across varying environments.

In a nutshell, virtualization involves configuring a single computer’s hardware to create multiple virtual computers. Each of the virtual machines (VM) can use a separate operating system to perform different computing tasks from the next VM.

Containers sit on top of the host server’s hardware, allowing multiple containers to share the server’s OS. The containers share the OS kernel, as well as libraries, binaries, and different software dependencies.

That means containers offer several benefits.

- With containers, you can run several tasks on one operating system, which removes complexity from every stage of software engineering.

- You can move a containerized app to a new environment, and it will work as well as before.

- Containers are usually lightweight, deploying faster than virtual machines (VMs) which run different OSs.

- All these make containers better at resource utilization and saving hardware costs.

These advantages make containers ideal for certain use cases.

Container use cases

Organizations use containers for a variety of reasons. The following are several uses of containers in cloud computing.

- Ensure that applications can be ported from one environment to another with minimal changes to their code.

- Enable microservices-based applications to run in a cloud environment.

- Optimize old applications to work flawlessly with cloud architecture after refactoring them.

- Migrate legacy or on-premises applications to a cloud environment using the Lift and Shift cloud migration strategy, which helps modernize applications without changing their code much.

- Use the same container images to help engineering teams implement continuous integration and continuous development in a DevOps culture. Developing, deploying, and testing tasks are faster as an improvement to the code can be done over time.

- Ease deployment of repetitive tasks in the background.

- Reduce the cost of cloud computing by reducing the amount of hardware needed to virtualize applications (versus virtual machines).

- Unlike virtual machines, containers are easier to create, deploy, and destroy after a task is completed, which reduces computing costs.

- Containers are also excellent for organizations that use a hybrid cloud or a multi-cloud strategy to run computing needs across cloud platforms and their local data center.

Containers vs. microservices

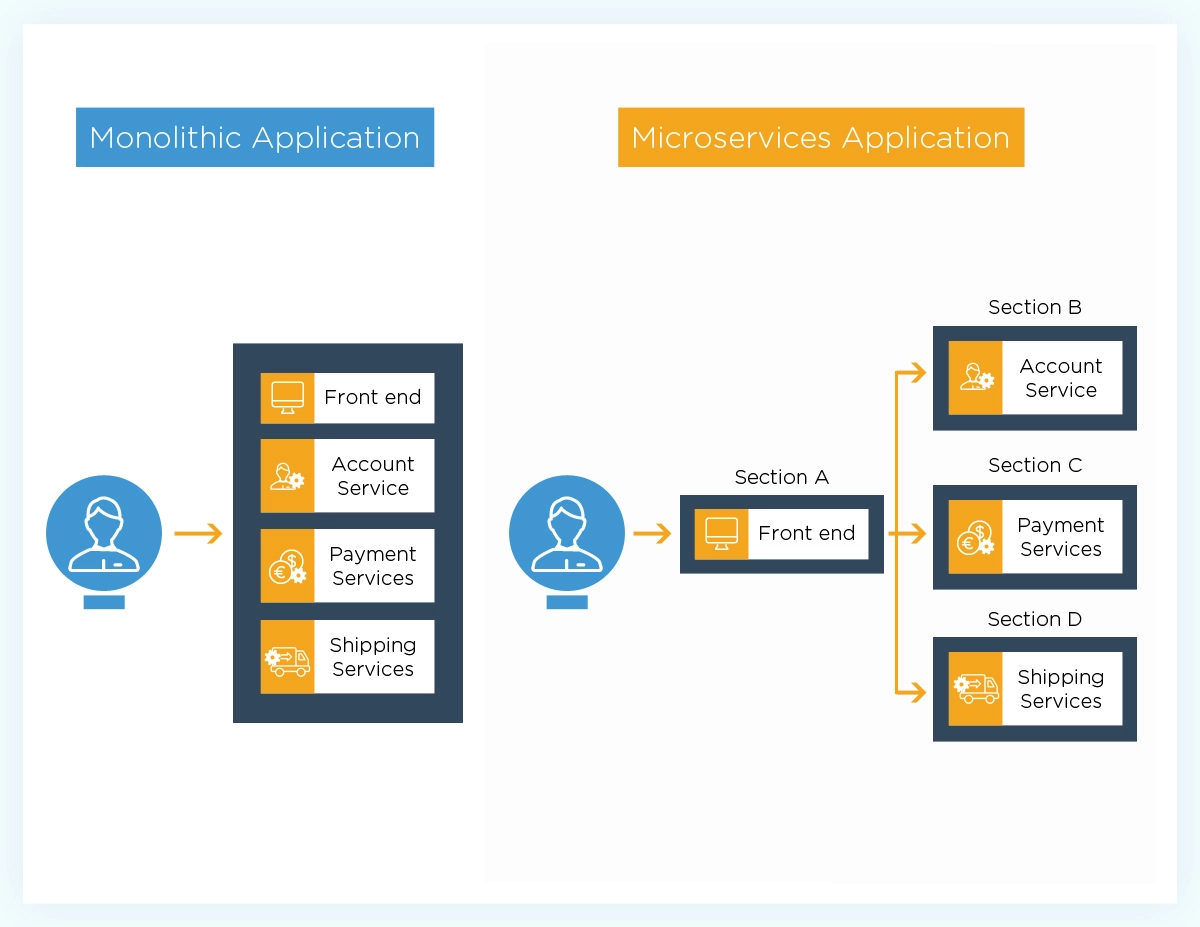

A container is an executable unit of software packaged to contain everything it needs to run. Microservices refers to the technology that makes it possible to split up a large (monolithic) application into smaller, multiple services, each performing a specific function.

Here’s a quick summary of the differences between containers and microservices.

A note to remember here: Containers and microservices are not mutually exclusive and are often used together. Containers provide an efficient way to deploy and manage microservices by encapsulating each service in a separate container environment.

|

Containers |

Microservices | |

|

Description |

Containers are a virtualization method that packages an app’s code, libraries, and dependencies into a single object. They isolate the application from its environment to ensure consistency across multiple development and deployment settings. |

Microservices are a design approach that structures an app as a collection of loosely coupled services, each performing a specific business function. |

|

Scope |

Focuses on application deployment and management. |

Focuses on the architectural design of an application. |

|

Granularity |

Containerization involves packaging an application or parts of an application. |

Microservices architecture splits an application into smaller, independent services. |

|

Interdependency |

Containers can run multiple components or microservices but are typically independent in their operation. |

Microservices are highly independent and communicate with others through well-defined APIs. |

|

Scalability |

Containers can be quickly scaled up or down, but scalability applies to the entire container. |

Microservices can be individually scaled, allowing for more granular resource management. |

|

Management and orchestration |

Managed through tools like Docker and Kubernetes, which handle deployment, scaling, and networking tasks. |

Managed through service mesh and API gateways, with a focus on service discovery, load balancing, and failure recovery. |

|

Complexity |

Relatively less complex in inter-service interactions. |

The distributed nature and the need to manage multiple service interactions make them more complex. |

|

Use case |

Ideal for consistent deployment environments and application dependency isolation. |

Ideal for complex applications that require agility, scalability, and decentralized development. |

The Netflix architecture is an excellent example of how to use microservices and containers to achieve your goals.

Containers vs. virtual machines

The main difference between containers and virtual machines is that containers are lightweight software packages containing application code and dependencies. In contrast, virtual machines are digital replicas of physical machines, each running its own operating system.

Containers share the physical server’s operating system, are more agile, portable, and require less overhead than virtual machines (VMs). But VMs have their advantages over containers as well.

|

Containers |

Virtual machines (VMs) | |

|

Description and Isolation |

Provide lightweight isolation from the host and other containers. Not as strong as VMs but can be enhanced with Hyper-V isolation. |

Provide complete isolation from the host operating system and other VMs, offering a stronger security perimeter. |

|

Deployment |

Typically deployed using tools like Docker and Kubernetes. |

Deployed individually via interfaces like Windows Admin Center or Hyper-V Manager, or in multiples using tools such as PowerShell. |

|

Operating system (OS) |

Run on top of the host operating system’s kernel. Contains only apps and some lightweight OS APIs and services. |

Run a complete operating system including its own kernel, which requires more system resources (CPU, memory, storage, etc). |

|

Operating system updates |

Involves updating the container image’s build file and redeploying using an orchestrator. |

Requires you to download and install OS updates on each VM. New OS versions often necessitate new VMs. |

|

Load balancing |

Container orchestration tools automatically manage container load, starting or stopping them as needed. |

Requires moving running VMs to other servers in a cluster. |

|

Scalability |

Highly scalable and resource-efficient. Suitable for microservices architecture. |

Can be more complex and costly to scale due to larger size and hardware resource requirements. |

|

Size |

More lightweight, typically measured in megabytes. |

The size of a complete operating system often requires gigabytes of storage. |

|

Networking |

Uses network namespaces |

Uses virtual network adapters for networking |

|

Fault tolerance |

Containers on a failed node are rapidly recreated by the orchestration tool on another node. |

VMs can failover to another server in a cluster. The OS restarts on the new server. |

|

Guest compatibility |

Typically run on the same OS version as the host. |

Can run a variety of OSs inside the VM. |

|

Persistent storage |

Uses storage volumes and filesystems mounted as files on the host OS. |

Uses virtual hard disks (VHD) for storage. Can use shared storage via NFS or SMB file shares. |

|

Resource consumption |

Uses fewer system resources. |

Uses more system resources. |

|

Flexibility |

More flexible and compatible, enabling easier migration between environments. |

Less flexible; can have migration issues. |

|

Speed |

Fast in deployment and operation. |

Slower deployment and operation due to needing to load and run complete OS components. |

|

Use case |

Ideal for DevOps practices that demand efficiency and high scalability, such as microservices and cloud migrations. |

Suitable for workflows requiring complete isolation and security, such as sandboxing and running legacy applications. |

Containers and virtual machines are both forms of virtualization but are unique approaches.

Virtualization lets you run several operating systems on the same hardware. Each VM can run its operating system (a guest OS). That way, each VM can service different applications, libraries, and binaries from the ones next to it.

VMs enable engineers to run numerous applications with ideal OSs on a single physical server to increase processing power, reduce hardware costs, and reduce operational footprint. They no longer need to run a single application per entire server.

This frees up computation resources for use elsewhere other than development.

But VMs are not perfect. Because each VM runs an OS image, binaries, and libraries within it, it can gain weight quickly, turning into several gigabytes-heavy fast.

VMs typically take minutes instead of seconds to start. That is a performance bottleneck because minutes add up to hours when running complex applications and disaster recovery efforts.

VMs also have trouble running software smoothly when moved from one computing environment to another. This can be limiting in an age where users switch through devices to access services from anywhere and anytime.

Enter containers.

As discussed earlier, containers are lightweight, share a host server’s resources, and, more uniquely, are designed to work in any environment — from on-premise to cloud to local machines.

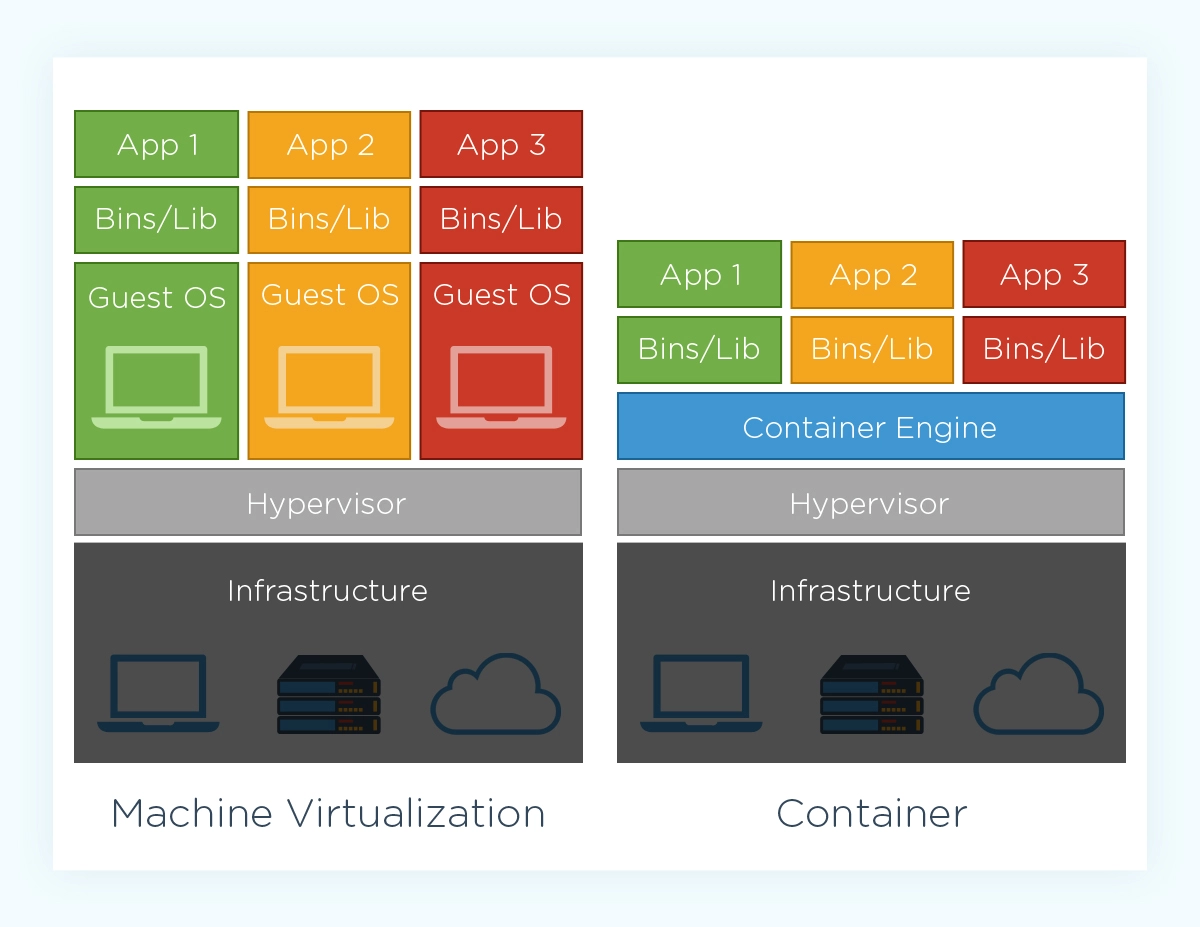

Here’s an image showing the design difference between containers and virtual machines:

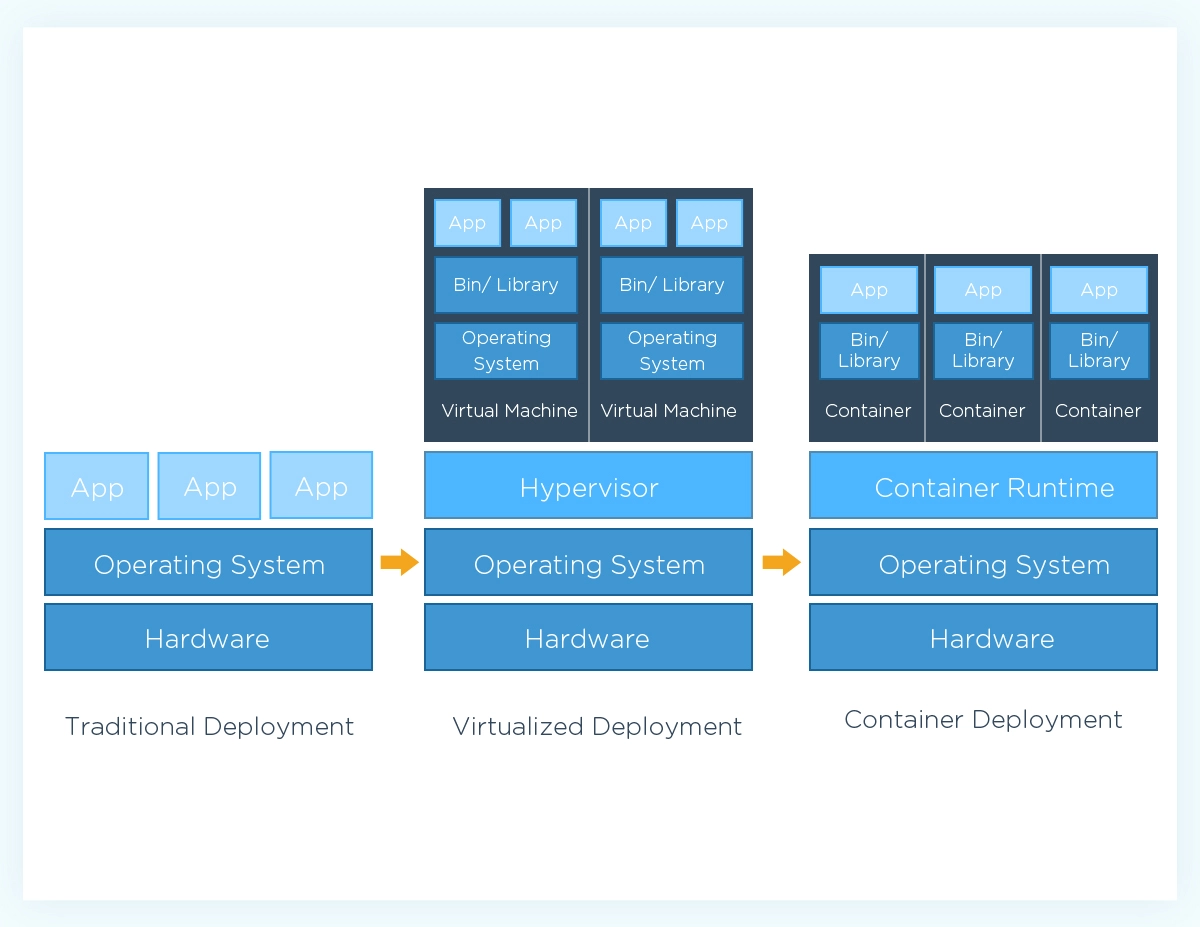

Now here is the full extent of the differences between traditional deployment vs. virtualization vs. containerization in one image.

With that in mind, here’s how managing containerized applications works.

How Does Container Orchestration Work?

Container orchestration enables engineers to manage when and how containers start and stop, schedule and coordinate component activities, monitor their health, distribute updates, and perform failovers and recovery procedures.

DevOps engineers use container orchestration platforms and tools to automate that process.

Modern orchestration tools use declarative programming to ease container deployments and management. This is different from using imperative language.

The declarative approach lets engineers define the desired outcome without feeding the tool with step-by-step details of how to do it.

Think about how you order an Uber.

You do not need to instruct the driver on how to drive his car, what shortcuts to take, and how to get to a particular destination. You just tell them you are in a hurry and, well, that you need to arrive at your destination in one piece. They know what to do next.

By contrast, an imperative approach requires engineers to specify how containers will be orchestrated to achieve a specific goal. The complexity of this method reduces the advantages of containers over virtual machines.

Using our Uber analogy, an imperative approach would be similar to taking a ride to an unfamiliar destination the driver is unfamiliar with. It is crucial that you know precisely how to get there and clearly explain all the turns and shortcuts to the driver, or else you may get lost in an unfamiliar neighborhood.

What Is Container Orchestration Used For?

Orchestrating containers has various uses, including:

- Configure and schedule

- Load balancing among containers

- Allocate resources among containers

- Monitor the health of containers and hosts

The Benefits Of Container Orchestration

Orchestration simplifies container management. In addition, orchestration tools help determine which hosts are the best matches for specific pods. That further eases your engineers’ job while reducing human error and time used. Orchestrating also promotes optimal resource usage.

If a failure occurs somewhere in that complexity, popular orchestration tools restart containers or replace them to increase your system’s resilience.

Now, let’s talk about container orchestration tools or platforms.

4 Top Container Orchestration Tools

Several container orchestrators are available on the market today.

The following are the top four container orchestrators.

1. Kubernetes

Kubernetes is an open-source container orchestration platform that supports both declarative automation and configuration. It is the most common container orchestrator today. Google originally developed it before handing it over to the Cloud Native Computing Foundation.

Kubernetes orchestrates containers using YAML and JSON files. It also introduces the notion of pods, nodes, and clusters.

What are the differences between pods, nodes, clusters, and containers?

Some container orchestration platforms do not run containers directly. Instead, they wrap one or more containers into a structure known as pods. Within the same pod, containers can share the local network (and IP address) and resources while still maintaining isolation from containers in other pods.

Since pods are a replication unit in the orchestration platform, they scale up and down as a unit, meaning all the containers within them scale accordingly, regardless of their individual needs.

A node represents a single machine, the smallest computing hardware unit that pod instances run on. When several nodes pull resources together, they make up a cluster, the master machine.

Several Kubernetes-as-a-Service providers are built on top of the Kubernetes platform.

They include:

- Amazon Elastic Container Service (ECS)

- Azure Kubernetes Services (AKS)

- Google Kubernetes Engine (GKE)

- RedHat OpenShift Container Platform

- VMware Tanzu

- Knative

- Istio

- Cloudify

- Rancher

Booking.com is one example of a brand that uses Kubernetes to support automated deployments and scaling for its massive web services needs.

While Kubernetes’ extensive nature can make it challenging to manage and allocate storage, it can also expose your containerized apps to security issues if one container is compromised.

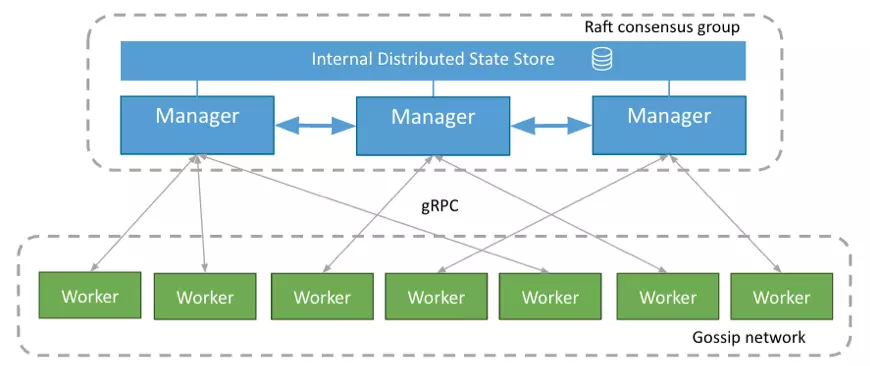

2. Docker Swarm

Credit: Docker

Docker Swarm is also a fully integrated and open-source container orchestration tool for packaging and running applications as containers, deploying them, and even locating container images from other hosts.

The Docker container orchestration method came in 2003, almost ten years before Kubernetes arrived. It popularized orchestrating containers for organizations that wanted to move away from using virtual machines.

It is ideal for organizations that prefer a less complex orchestrator than Kubernetes for smaller applications.

But it also integrates with Kubernetes in its Enterprise Edition if you want the best of both worlds.

A challenge with Docker is it runs on virtual machines outside the Linux platform (i.e., Windows and MacOSX). It can also have issues when you want to link containers to storage. Adobe, PayPal, Netflix, AT&T, Target, Snowflake, Stripe, and Verizon are among the enterprises that use Docker.

3. Apache Mesos

The University of California at Berkeley originally developed Mesos.

Apache Mesos offers an easy-to-scale (up to 10,000 nodes), lightweight, high-availability, and cross-platform orchestration platform. It runs on Linux, Windows, and OSX, and its APIs support several popular languages such as Java, Python, and C++.

Mesos offers only cluster-level management, unlike Kubernetes and Docker Swarm. However, Marathon provides container orchestration as a feature. It is also ideal for large enterprises as it might be overkill for smaller organizations with leaner IT budgets.

Uber, PayPal, Twitter, and Airbnb are some brands that use the Mesos container orchestration platform.

4. Nomad

Like the others here, Nomad is an open-source workload orchestration tool for deploying and managing containers and non-containerized apps across clouds and on-premises environments at scale.

You can use Nomad as a Kubernetes alternative or a Kubernetes supplement, depending on your skills and application complexity. It is a simple and stable platform that is ideal for both small and enterprise uses. Cloudflare, Internet Archive, and Navi are some of the brands that use Nomad.

Understand, Control, And Optimize Your Container Costs The Smarter Way

Containers allow you to deploy and manage containerized apps at scale. You can do this with greater precision and automatically reduce errors and costs using a container orchestration platform.

While orchestration tools offer the benefit of automation, many organizations have difficulty connecting container orchestration benefits to business outcomes. It’s tough to tell who, what, and why your containerized costs are changing and what that means for your business.

This is where a cloud cost intelligence platform, like CloudZero, can help.

With CloudZero, you get:

- Best-in-category Kubernetes cost visibility: Allocate 100% of your Kubernetes spend regardless of the complexity of your containerized environment or how messy your labels are.

- Unit cost intelligence: Access immediately actionable cost insights such as Cost per Customer, Cost per Team, Cost per Feature, Cost per Environment, among others.

- Maximize Kubernetes efficiency: View your containerized costs in both technical terms (Cost per cluster, per node, per pod, per namespace, etc) and business terms (Cost per customer, per team, per service, per feature, per deployment, etc), putting things into perspective.

- Granular cost intelligence: Receive hourly cost insights instead of just once every 24 hours as most cost tools do.

- Unify K8s spend with non-K8s spend: View containerized and non-containerized costs in one platform. It eliminates the complexity and cost blindspots that can arise from using multiple cost tools.

Drift saved $2.4 million with CloudZero. Upstart has reduced its cloud spending by $20 million, and Remitly now allocates 50% more costs without tagging. Even so, reading about CloudZero is nothing compared to experiencing it for yourself.  to see CloudZero in action for yourself.

to see CloudZero in action for yourself.