Most organizations use containers nowadays. They are a solution to the problem of how to make an application run faithfully in any environment when it gets deployed. It could be from development to production or the testing environment (Paul Rubens, 2017, ref.2). It eases the continuous development and deployment lifecycle. For security, they are extremely helpful in preserving deterministic application behaviour if done correctly. That’s why let’s start with the basics.

Containers vs Virtual Machines

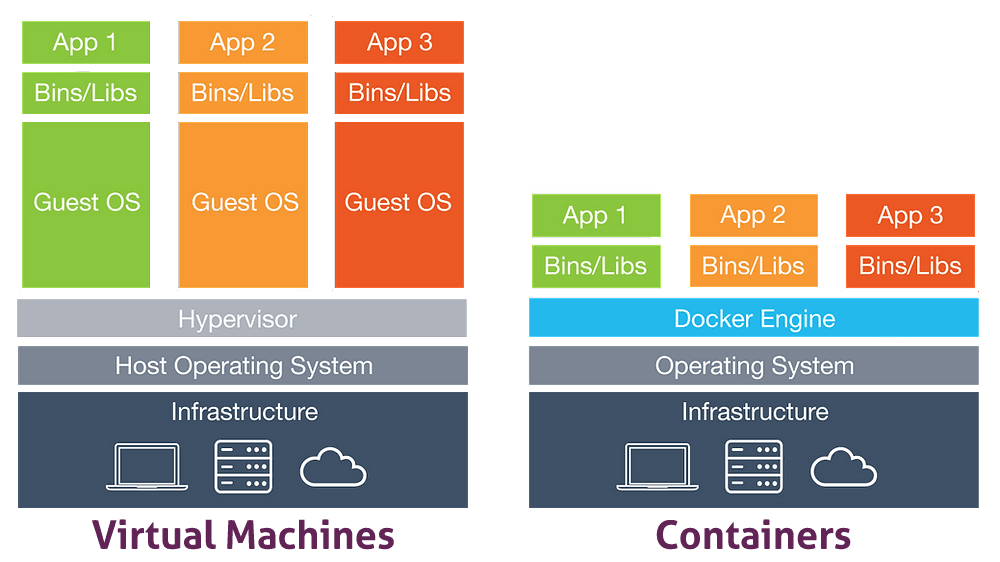

Before containers were invented, most of the applications were hosted on VMs. A VM is the virtualization/emulation of a physical computer with its operating system, CPU, memory, storage and network interface, which are provisioned virtually. Hypervisor software separates the virtual machine’s resources from the host hardware. Using it, multiple VMs can run on the same host. With VMs, running the same application across multiple environments (VMs) on the same host or different hosts became possible. It saves costs compared to the past when one application was running on a single server.

Compared with containers, VMs are slower to spin up and less portable. They also require more resources because they need a full guest operating system.

Containerization

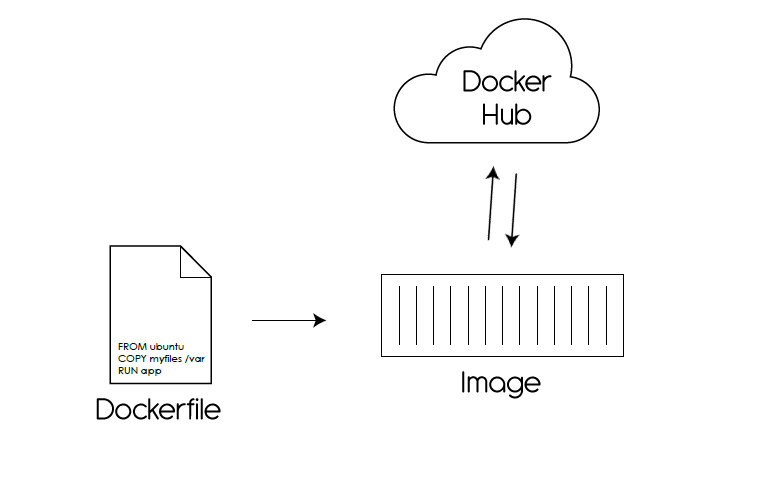

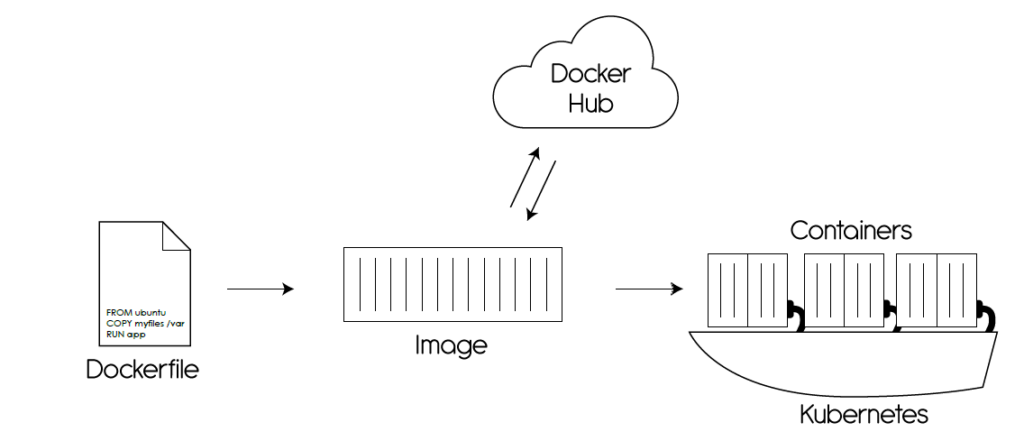

Containerization is a technology that allows packaging up application code, its dependencies and configurations into a container image. A running image is a container. The starting point for building a custom image is a base image (like Alpine Linux), usually created by vendors or communities and uploaded to an image registry (a repository that stores container images, e.g. Docker Hub).

Container Runtimes

Containers run using a container runtime. It is a software component that can run containers on a host OS. Some container runtimes are compatible with Linux and Windows, making the containers cross-platform. Containers isolate the software from the environment and make it run identically despite the differences in the environment, for example, staging and production.

The most popular container technology is Docker. It became the synonym for containers for most people. The goal of the container runtime is to virtualize system resources like the filesystem or network. This is done using Linux namespaces, and the limitation of resources like CPU and memory is done using cgroups (Ian Lewis, 2017, ref.1).

There are two types of container runtimes, low level and high level. The low-level container runtime is responsible for running the container. It sets up the namespaces and cgroups for the containers. The high-level runtime is responsible for managing containers. It creates the container image, pulls it from a registry, and passes it to the low-level runtime.

The Docker engine is a lightweight open-source containerization technology that implements high-level and low-level runtimes. It is combined with a workflow for building and containerizing applications. It can be installed on a large variety of operating systems.

Container History

Container technology was developed over ten years ago for Linux in the form of LXC. Other vendors offer their system virtualization, such as Solaris Containers, FreeBSD jails, and AIX Workload Partitions (Paul Rubens, 2017, ref.2).

In 2015 a company called CoreOS created its version of containers that could potentially fragment the container industry. They were the competitor of Docker. But the rocket (rkt) runtime project from CoreOS was closed in February 2020. The rkt runtime remains a significant piece of the history of container technology (Iucab, 2018, ref. 3).

In the same year, 2015, a Linux Foundation project called Open Container Project, later renamed Open Container Initiative (OCI), was created to define industry standards for the container format and container runtime software for every platform. This project is sponsored by companies like VMware, AWS, Google, Microsoft, Red Hat, Oracle, IBM, HP, Twitter, Docker, and CoreOS. This makes it a considerable authority in defining container technology standards (Paul Rubens, 2017, ref.2).

The starting point for OCI standards was Docker, which donated 5 percent of its codebase to launch this project. This project made companies focus on developing container orchestration tools for existing container technology instead of developing competitive container technologies. Currently, several companies compete with each other in developing orchestration tools. However, they respect OCI standards, meaning a container will run identically on any orchestration tool (Paul Rubens, 2017, ref.2).

Container Advantages

- Cross-platform compatibility;

- Use lower resources in comparison with VMs (ex: storage, computing power);

- Boot faster with the application than VMs;

- They can be destroyed quickly, freeing up resources for their host;

- They allow for modularity. Instead of running an entire application in a container, it can be split into multiple containers. A web application can be split into a database, front-end, and back-end container. The application is divided into microservices. It is easier to change the application because only the updated component should be rebuilt.

Container Disadvantages

- If the host kernel is vulnerable, then all the containers are vulnerable;

- Some legacy software is impossible to switch to run in containers. It can run only on VMs;

- Containers provide less isolation compared to VMs because they share the host kernel.

Container Orchestrator

Containers are usually run in a container orchestration environment. Its goal is to automate container deployment, scalability, monitoring, and management. Orchestration is essential for individuals and companies running multiple containers simultaneously because it is almost impossible to manage them manually. There are many vendors of container orchestration tools, but the most popular is Kubernetes. It is an open-source tool initially designed by Google to manage its infrastructure. Now it is maintained by Cloud Native Computing Foundation.

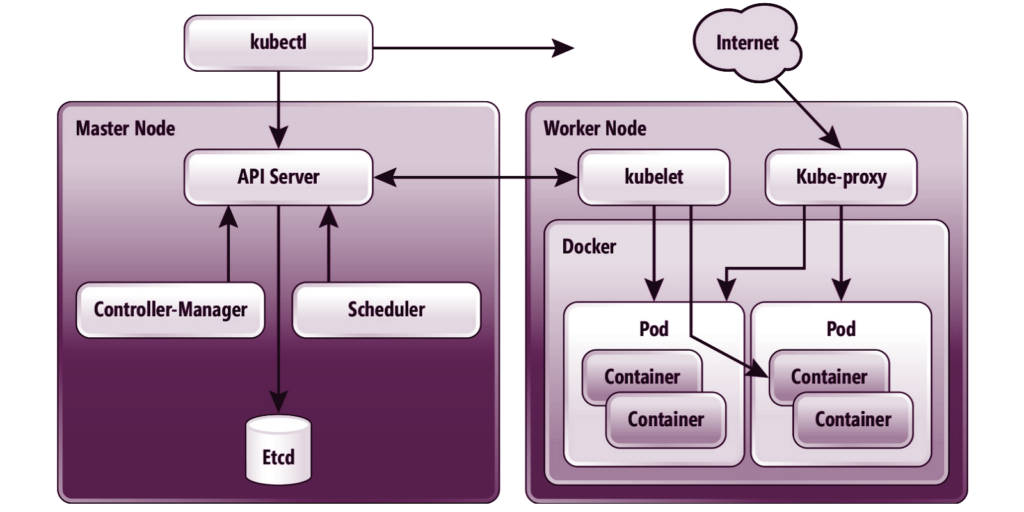

In the image below, a more detailed architecture of a Kubernetes cluster and how each component communicates with each other can be found:

Source: redmondmag.com

A Kubernetes cluster comprises a master node and multiple worker nodes, where all the workloads are hosted. The master node serves as a management node. It can also host workloads, but it is considered a bad practice. If the workload on the master node is compromised, the whole cluster will probably be compromised.

Kubernetes Components

Kubectl is a bash tool that is used to communicate to the API server of Kubernetes and manage the cluster;

API Server is a RESTful web server that handles the management of the whole cluster. Moreover, it collects the requests from the client that are authenticated, authorized, processed, and then stored in ETCD for further processing and use;

Etcd is a key-value store that stores the most sensitive data within the cluster. Access to it should be restricted only to as few users as possible. Full access to ETCD is considered root access to the cluster;

Controller Manager is a daemon that listens to specific updates in the API Server and runs controllers within the cluster that keeps its state consistent;

The scheduler is a component that “listens” to pod creation requests on the API Server, reviews the node list for potential resource allocation, and sends the request to Kubelet;

Kubelet is a worker component that handles the pod creation, logging, and status. It is also the endpoint that the API Server calls to get the status and logs of the pods;

Kube-proxy is a component that, along with Container Networking Interface (CNI), makes sure that all containers, Pods, and nodes can communicate with each other as if they were on a single network;

Docker is explained in the paragraphs above;

A Pod is a group of one or more containers that share the same storage and network;

The container is explained in the paragraphs above.

References

Ian Lewis (06.12.2017), “Container Runtimes Part 1: An Introduction to Container Runtimes”,

Container Runtimes Part 1: An Introduction to Container Runtimes – Ian Lewis

Paul Rubens (27.06.2017), “What are containers and why do you need them?”,

lucab (16.04.2018), “Releases rkt”,