Today Salesforce.com announced Database.com at Dreamforce. I realized that many could be wondering why they decided to do this and more so, why now?

The answer is Data Gravity.

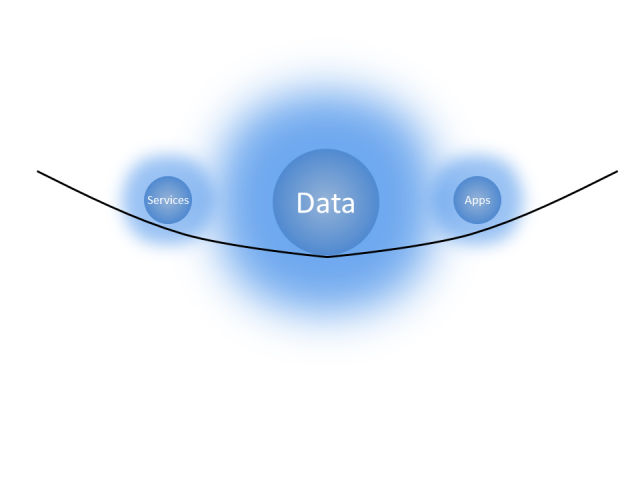

Consider Data as if it were a Planet or other object with sufficient mass. As Data accumulates (builds mass) there is a greater likelihood that additional Services and Applications will be attracted to this data. This is the same effect Gravity has on objects around a planet. As the mass or density increases, so does the strength of gravitational pull. As things get closer to the mass, they accelerate toward the mass at an increasingly faster velocity. Relating this analogy to Data is what is pictured below.

Services and Applications can have their own Gravity, but Data is the most massive and dense, therefore it has the most gravity. Data if large enough can be virtually impossible to move.

What accelerates Services and Applications to each other and to Data (the Gravity)?

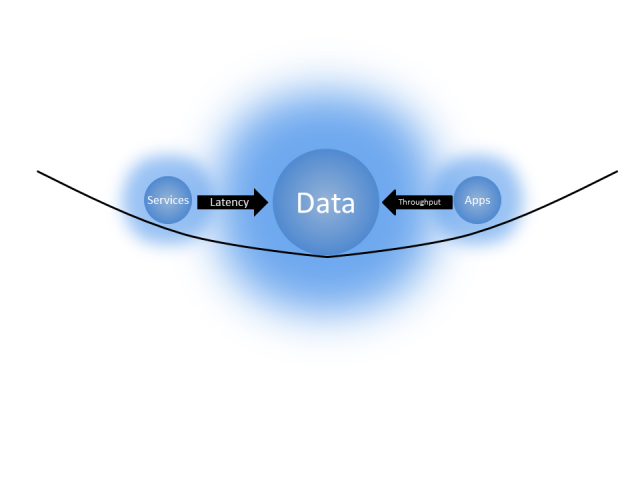

Latency and Throughput, which act as the accelerators in continuing a stronger and stronger reliance or pull on each other. This is the very reason that VMforce is so important to Salesforce’s long term strategy. The diagram below shows the accelerant effect of Latency and Throughput, the assumption is that the closer you are (i.e. in the same facility) the higher the Throughput and lower the Latency to the Data and the more reliant those Applications and Services will become on Low Latency and High Throughput.

Note: Latency and Throughput apply equally to both Applications and Services

How does this all relate back to Database.com? If Salesforce.com can build a new Data Mass that is general purpose, but still close in locality to its other Data Masses and App/Service Properties, it will be able to grow its business and customer base that much more quickly. It also enables VMforce to store data outside of the construct of ForceDB (Salesforce’s core database) enabling knew Adjacent Services with persistence.

The analogy holds with the comparison of your weight being different on one planet vs. another planet to that of services and applications (compute) having different weights depending on Data Gravity and what Data Mass(es) they are associated with.

Here is a 3D video depicting what I diagrammed at the beginning of the post in 2D.

More on Data Gravity soon (There is a formula in this somewhere)

Excellent post Dave!

I’d like to add the 3rd dimension of your model is clearly cost. No doubt Latency and Throughput can be had at a cost. The cloud services vendors which successfully balance (i.e. likely minimize) all three will, become the data “black holes” of the cloud universe…consuming the most stars (aka cloud customers).

…I have a strange desire to watch a few NOVA episodes with Neil deGrasse Tyson again. 😉

Keep the great posts coming!

Software has mass both in the physics sense (it is made up of bits that are stored somewhere, these have mass) and in the analogous sense in that software consumers resources including storage.

No, software is an algorithm. Algorithms are Data, so that algorithm must be put somewhere to have any use, wherever that place is, it is consuming some measurable mass (even a punch card or abacus implementing the algorithm has mass).

Reblogged this on Maynk Chandk- All ’bout me and commented:

Today I learnt –

Reblogged this on i4t.org and commented:

Data gravity is a real issue for cloud solution providers, as businesses do not want their sensitive data to leave their network. The means that the cloud based solutions have to meet the data where it resides, providing ondemand access to data as it is required. Ideally the data is never stored by the cloud providers but is aware of the data and its content in a secure way and is able to pass-on that data.