Practical Steps for Enhancing Reliability in Cloud Networks - Part I

Summary

Delivering on network reliability causes an enterprise’s data to become more distributed, introducing advanced challenges like complexity and data gravity for network engineers and operators. Learn concrete steps on how to implement cloud reliability and the trade-offs that come with it.

When evaluating solutions, whether to internal problems or those of our customers, I like to keep the core metrics fairly simple: will this reduce costs, increase performance, or improve the network’s reliability?

It’s often taken for granted by network specialists that there is a trade-off among these three facets. If a solution is cheap, it is probably not very performant or particularly reliable. Does a solution offer an impressive degree of reliability? Then it is unlikely to be both inexpensive, performant, and so on. Balancing these trade-offs across the many components of at-scale cloud networks sits at the core of network design and implementation.

While there is much to be said about cloud costs and performance, I want to focus this article primarily on reliability. More than anything, reliability becomes the principal challenge for network engineers working in and with the cloud.

What is cloud network reliability?

Reliability is the degree to which a network is able to behave as expected, even in the presence of stress or failures. Accounting for these stresses and failures in highly scaled, distributed cloud networks can be particularly challenging, as many network components and services are temporary, highly elastic, and outside the complete control of network operators.

The components of reliability

With such a broad mission, reliability is almost a catch-all for several related network health components. Each of these components provides a critical aspect of delivering a reliable network:

- Availability. Simply put, availability is uptime. Highly available networks are resistant to failures or interruptions that lead to downtime and can be achieved via various strategies, including redundancy, savvy configuration, and architectural services like load balancing.

- Resiliency. Resiliency is a network’s (or network component’s) ability to recover in the presence of stressors or failure. Resilient networks can handle attacks, dropped connections, and interrupted workflows. Resiliency can be contrasted against redundancy, which replaces, as opposed to restores, a compromised network component.

- Durability. When the network has been subjected to interrupted service, durability measures ensure that network data remains accurate and whole. This can mean redundant and more complex data infrastructure or properly accounting for more nuanced concepts like idempotency and determinism in the presence of failure.

- Security. Vulnerabilities enable malicious actors to compromise a network’s availability, resiliency, and durability. Even the most detailed reliability engineering can be easily undermined in an insecure network.

Implementing cloud reliability

Making a cloud network reliable is, as of the writing of this post, more than a matter of checking a few boxes in a GUI. It takes cross-departmental planning to envision, create, test, and monitor the best-for-business version of a reliable network. If implemented poorly, many organizations find themselves wasting resources on arbitrary monitoring and persistently vulnerable systems.

Whatever your organization’s best-fit version of reliability, there will still be some consistent features and challenges.

Redundancy

While not always the most affordable option, one of the most direct reliability strategies is redundancy. Be it power supplies, servers, routers, load balancers, proxies, or any other physical and virtual network components, the horizontal scaling that redundancy provides is the ultimate safety net in the presence of failure or atypical traffic demands.

Besides the direct costs associated with having more instances of a given component, redundancy introduces additional engineering concerns like orchestration and data management. Careful attention needs to be paid here as these efforts can complicate other aspects of network reliability, namely durability.

Monitoring

An essential part of my definition of reliability is “as expected,” which implies that there are baselines and parameters in place to shape what reliable performance means for a given network. Direct business demands like SLAs (service level agreements) help define firm boundaries for network performance. However, arriving at specs for other aspects of network performance requires extensive monitoring, dashboarding, and data engineering to unify this data and help make it meaningful.

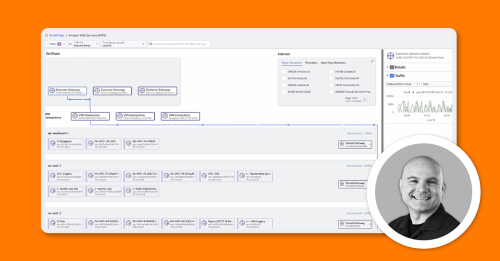

By collecting and analyzing network telemetry, including traffic flows, bandwidth usage, packet loss rates, and error rates, NetOps leverage monitoring to detect and diagnose potential bottlenecks, security threats, and other issues that can impact network reliability, often before end users even notice a problem. Additionally, monitoring becomes critical for network optimizations by identifying areas where resources are under or overutilized.

Network reliability engineers (NREs)

The scale, transient nature of resources and topology, and extensive network boundaries make delivering on network reliability a complex and specialized task. Much the way containerization and cloud computing called for the advent of site reliability engineers (SREs) in application development, the complexity of cloud networks has led to the rise of network reliability engineers (NREs).

Their role involves working closely with network architects, software developers, and operations teams to ensure consistent networking priorities and implementations. NREs typically have a strong background in network engineering and are well-versed in technologies such as routing protocols, switching, load balancing, firewalls, and virtual private networks (VPNs). They also have expertise in automation, scripting, and software-defined networking (SDN) to streamline network operations and reduce manual errors.

The trade-offs of cloud network reliability

To close, I’d like to bring the discussion back to the trade-offs in cost and performance that network operators have to make when prioritizing reliability.

Costs

Redundancy isn’t cheap. No matter how you slice it, additional instances, hardware, etc., will simply cost more than having fewer. And while redundancy isn’t the only way to ensure reliability in the cloud, it will be a significant part of an organization’s strategy.

Thankfully, cloud networks give us a lot of options for tuning reliability up (or down):

- VPN or private backbone

- Load balancer at network tier or application tier

- Application-focused load distribution and degraded failures instead of total failures

- Availability zone allocation and multiple networks and paths

- Diversity of WAN providers (two or more providers)

- Multipath choices

- LAG

- ECMP

- Routing path selection (even if sub-optimal)

- Diversity of geography

- Diversity of naming resolution (DNS), which can choose from the above choices

- Caching of content (CDN) to reduce the volume of data being delivered to an endpoint

- Applying quality of service for preferred applications

- Examples of preferred applications typically thrown into this category are Voice and Video (Zoom, WebEx, etc.), accounting, cash registers, customer-facing sitesExamples of deferred applications are backups, data replication (if it can catch up after peak business loads daily), backend staffing needs like housekeeping or HR

Optimizing against these choices is going to be a shifting challenge for network operators, but if handled correctly, networks can achieve reliability that fits both cost restraints and business goals.

Performance

If not adequately accounted for, the additional data infrastructure, monitoring efforts, and redundancy measures can soak up bandwidth available in data centers and cloud contracts, leading to network issues like cascading failures that can be difficult to place.

Top talkers are often the cause of outages for application stacks. Top talkers can be something like customers on a Black Friday sale. But sometimes, a top talker is a back operation that simply grew in size, and the backup process now exceeds the “projected/estimated” backup window.

When backup operations occur during staffing, customer visits, or partner-critical operations, contention occurs. However, as we move into the cloud, some organizations take much of their customer receipts, invoicing, and other components as a system of record (SOR) and drop the records into a cloud storage location. This is something of a backup for the business operations but does not meet the typical definition of a “backup operation.”

Working with customers, I have seen bandwidth consumption patterns of 4:1, where four 10GB links are needed to store customer records in cloud storage, but only a single 10GB link is necessary for typical application processing for day-to-day business needs. In this case, choosing to separate the storage traffic from the normal business traffic enhances both performance and reliability. However, deploying four more 10GB circuits for the cloud storage becomes the cost of achieving performance and reliability.

Conclusion

Delivering on network reliability causes an enterprise’s data to become (more) distributed, introducing advanced challenges like complexity and data gravity for network engineers and operators.

In my next article, we will take a closer look at how these challenges manifest and how to manage them.