What is Prompt Engineering & Why is it Important for LLM?

The world has changed so much technology wise that for many tasks, we hardly interact with humans for which we used to heavily rely on earlier. Chatbots are intuitive, smart and easy to access as they are prompt based and have all their answers ready to serve, most companies have adopted the chatbot model for their customer service which directly affected the load over human workforce. As many companies are transitioning into chatbots, it definitely is a positive and impactful decision. The only thing you should be thinking about as a business executive is you able to get the most out of this intelligent tool for your organization.

Prompt engineering is how you design your chatbot to respond and understand the user better to give smarter and more accurate responses with time. The technique of improving prompts that a user can enter a generative AI (Artificial Intelligence) service to produce text or graphics is known as prompt engineering.

For generative AI models to comprehend not only the language but also the specifics and purpose of the question, prompt engineers are essential in creating effective query structures. In turn, the quality of AI-generated content-whether it be text, photos, code, data summaries, or summaries—is influenced by a prompt that is excellent, comprehensive, and informative.

For example, most chatbots use LLMs and you need a caption for your company’s new LinkedIn post, it will quickly provide you one, but you may be dissatisfied with it due to its generic nature or how it does not resonate with your brand tone, then you will reply how it needs to be modified or refined for a more specific output, this way it learns more about the user specifics and will keep this in its data for future prompt responses.

Hacking the Prompt Principles

Prompting is simple task for the final user, but the refinement process it goes through with developer is something to be taken care of, there are certain principles the developer needs to follow to get the best out of your LLM powered application, it can be a conversational bot or data retrieval app, it has many uses throughout the industries.

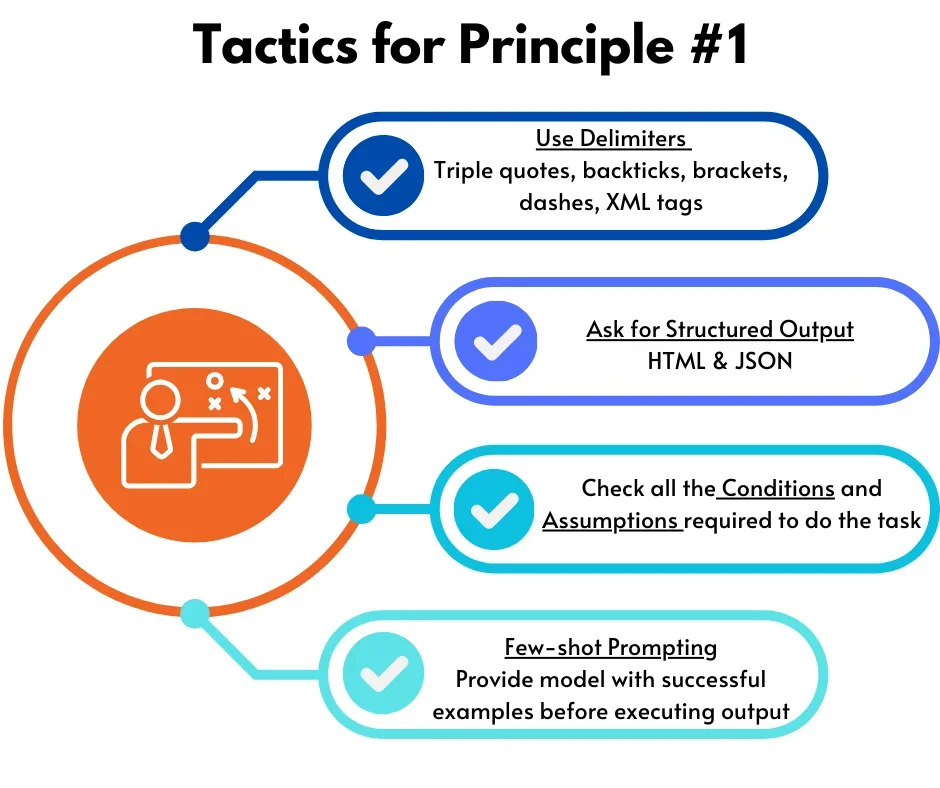

- Principle #1- Write clear and precise instructions.

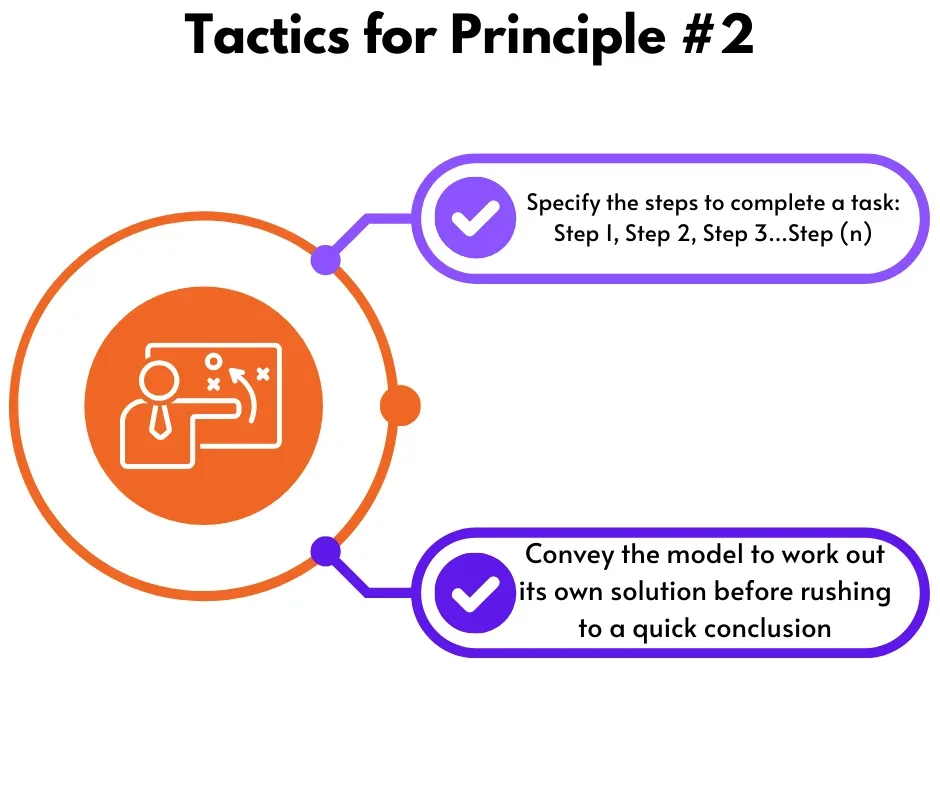

- Principle #2- Give model enough time to analyze and think for the most accurate output.

Giving Instructions

While we write the instructions, one must keep the following tactics in their mind for the best result, your prompt engineers could be doing everything right but if they did not implement these tactics, they are missing a trick.

Redefining Swiftness

Yes, LLM and prompts have made it quick and easy to get all your responses, but what one may forget is that they need time to process all the programing codes and data before giving you an ideal output. Use the following tactics to redefine how your LLM powered applications can be quick and yet accurate.

Cardinals of Prompt Engineering and LLMs

Prompt engineering is vital in many aspects of LLM powered generative AI applications, let us see how they affect all the fundamental uses of LLM uses and how they drastically improve the use cases for which they are used.

Iterative Prompt Development

Prompt development is an iterative process, we continuously provide them the data in form of prompts and instructions, a process which makes it smarter and accurate with time, the wrong outputs or errors in the data analyzing by your application can be refined with an iterative solution.

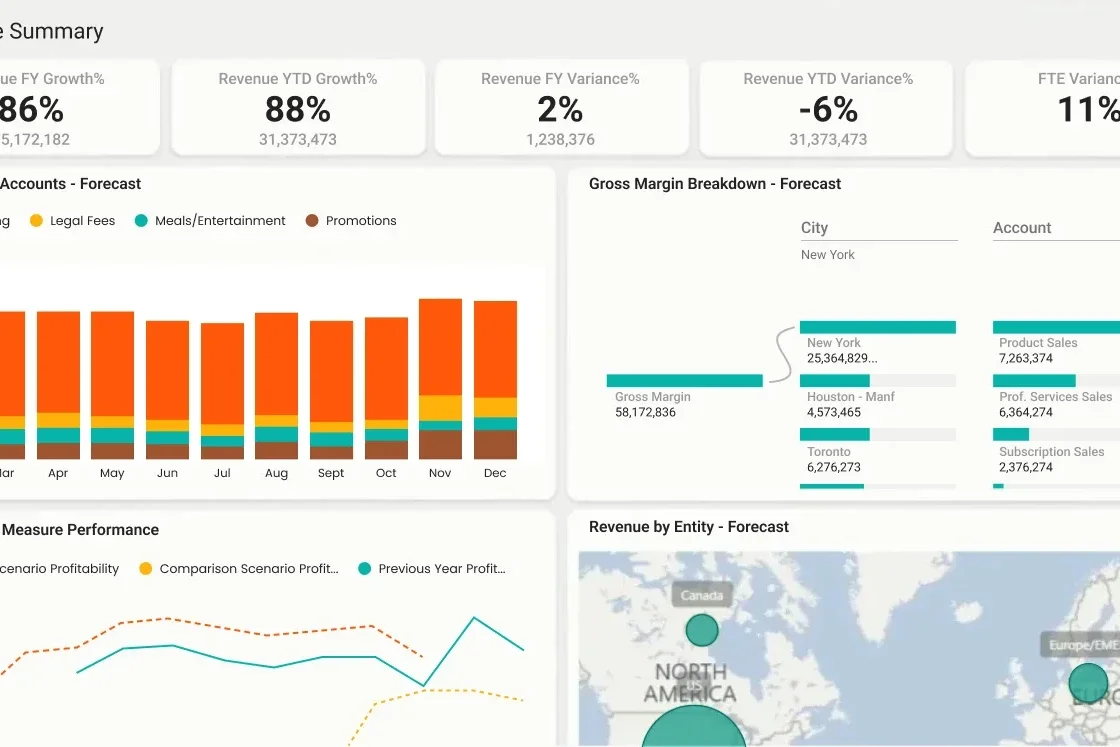

Summarizing Impactfully

One of the most popular uses of this technology is drawn over by the ability to summarize huge volumes of texts into short form so that it can be consumed in a small pan of time without missing out on the important information or data in it. As most of the business executives are not able to find time to read detailed content or updates, they look for ways to get all the data in the shortest form possible, this gets applied in the business uses as well, helping organizations to read or analyze consumer data better.

Inferring Targets

LLMs are trained with large amounts of data, they have been given instructions of what elements indicate emotions of the user, what are the entities which are important for this organization and collecting important labels.

Transforming Beyond Boundaries

This ability of an LLM model enables transforming your data into different languages and forms. With OpenAI made GPT 3.5 model, the translation of any information takes place quite easily and quickly, what makes it more enhanced is that it can handle multiple translations for the same prompt. Let us see some of the abilities it possesses:

- Formal to Informal language and vice versa.

- Data type conversion (e.g.: JSON to HTML)

- Grammar & Spelling check (for instance it can compare the original data and corrected data by highlighting the errors, which can be initiated by importing the ‘redline’ in Python)

Expanding

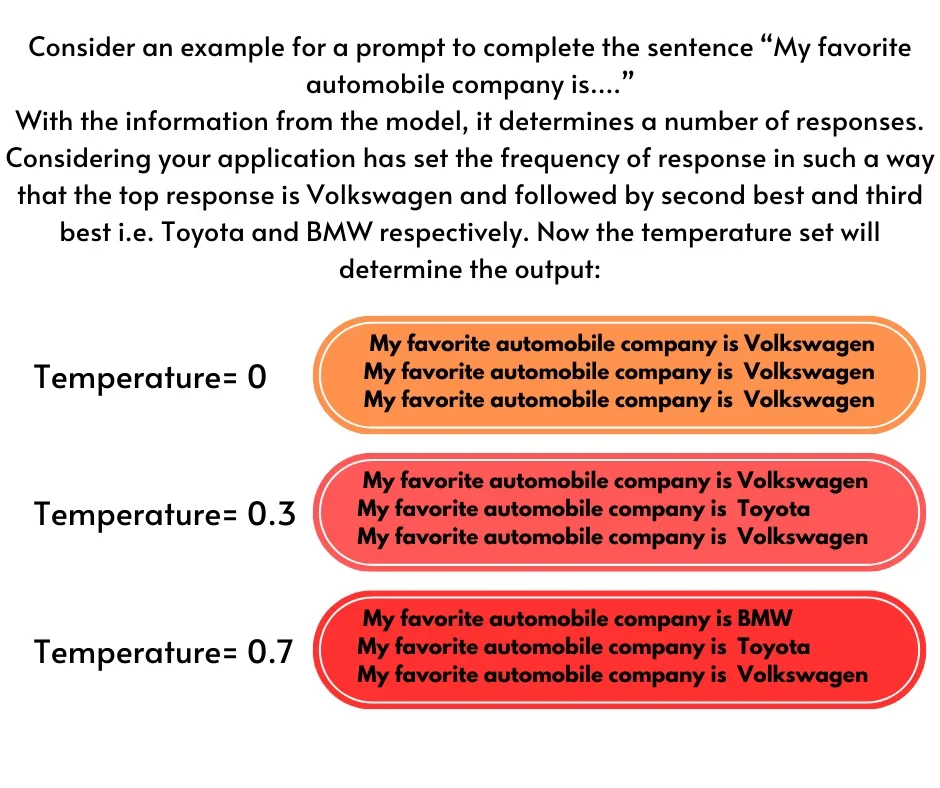

Finishing the prompt or a sentence with a machine’s capability is on the rise, with a large amount of data it has analyzed the preferences and predictability of the language, with the ‘expanding’ the user through prompt can get machine generated outputs which can even expand a brief information to detailed one using the data from the LLM.

Temperature is an essential element to be kept in mind while you develop the possible prompts, this determines the degree of randomness the output will generate through the model.

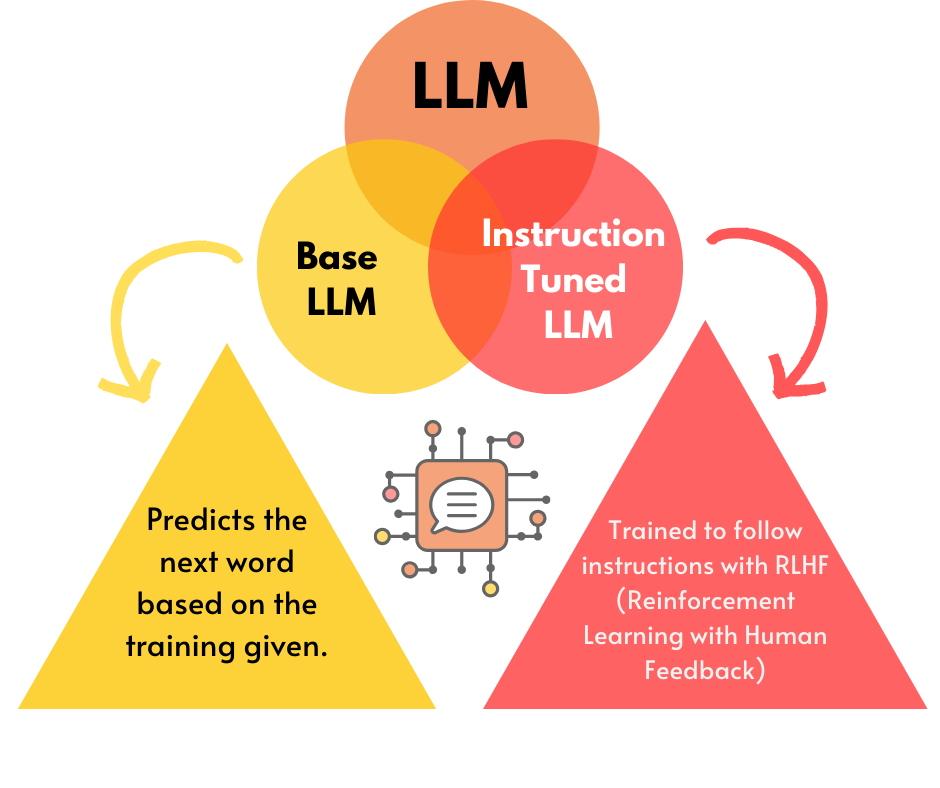

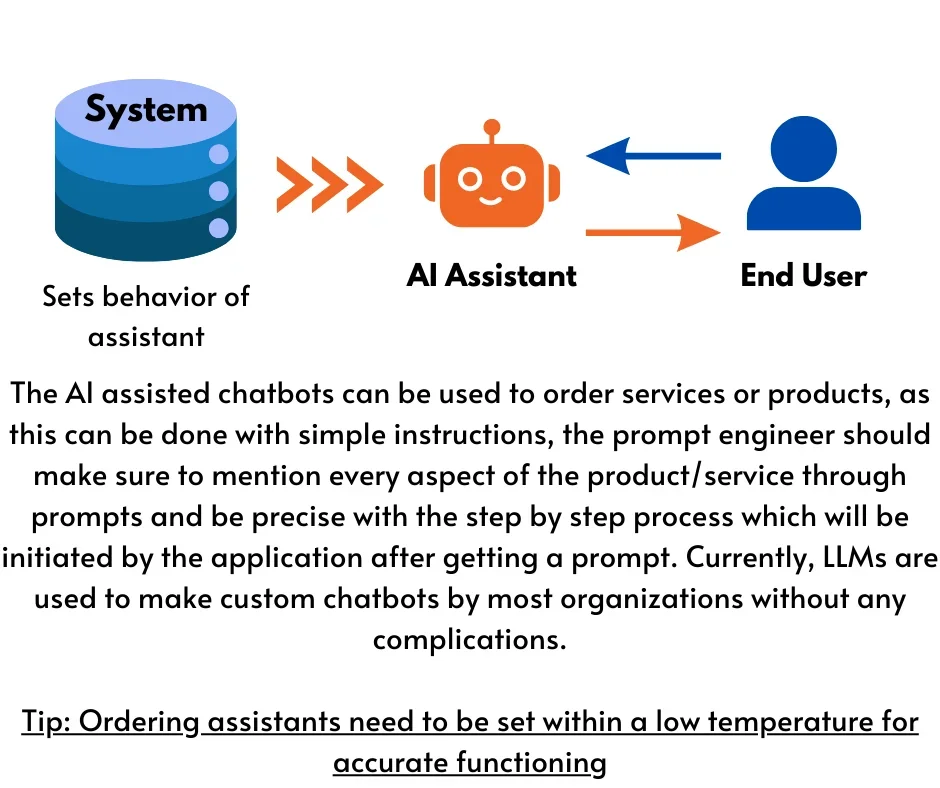

Knowing the inside of a Chatbot

Conclusion

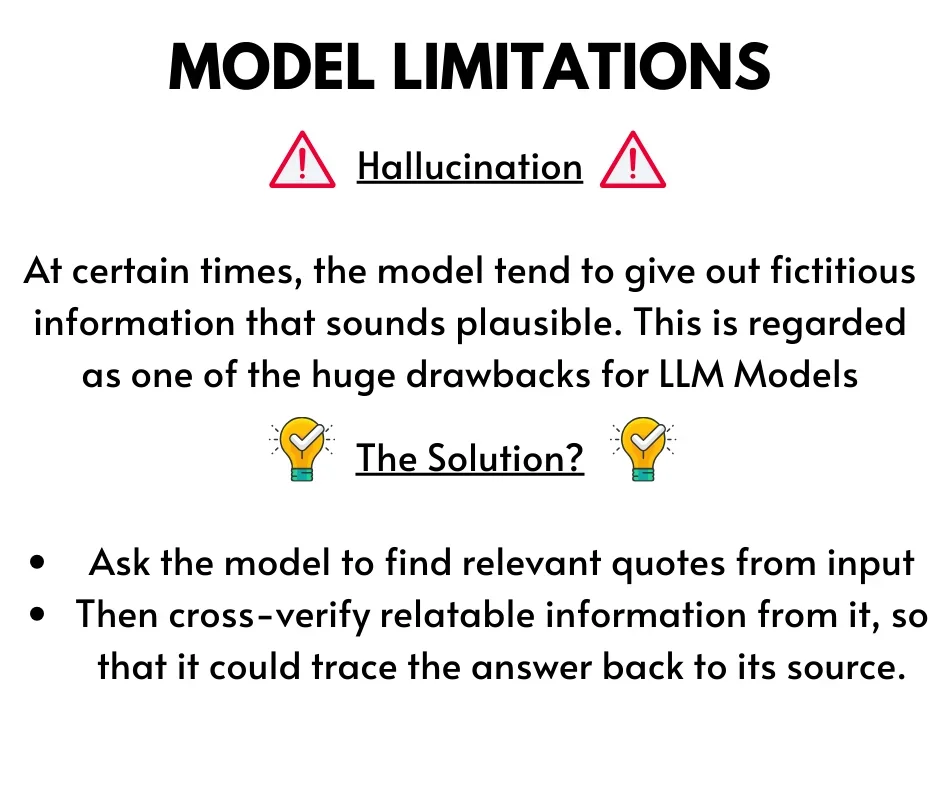

We have now established how important prompt engineering is for any organization going forward, building an AI application is just the beginning, utilizing and programming it smartly is very essential to know the range and limits of Artificial Intelligence. Sources like OpenAI have made it easy for anyone to develop their own chatbot, it would make a drastic impact in the digital world, but as a business owner, it has become important to be aware of what is important even in these technologies, like here we discussed the value of prompt engineering in the LLM models and applications using it.

Our experts at Sunflower Lab have continuously worked in this area, discovering and refining the entire development process to create more intuitive and useful applications for all industries. Making a difference in your organization through digital services is what we thrive on, our experts are always there to guide you and even make custom AI/ML applications. Contact our Experts Today.

Drive Success with Our Tech Expertise

Unlock the potential of your business with our range of tech solutions. From RPA to data analytics and AI/ML services, we offer tailored expertise to drive success. Explore innovation, optimize efficiency, and shape the future of your business. Connect with us today and take the first step towards transformative growth.

You might also like

Stay ahead in tech with Sunflower Lab’s curated blogs, sorted by technology type. From AI to Digital Products, explore cutting-edge developments in our insightful, categorized collection. Dive in and stay informed about the ever-evolving digital landscape with Sunflower Lab.