Quick Guide to Building an ETL Pipeline Process

The Crazy Programmer

MAY 21, 2022

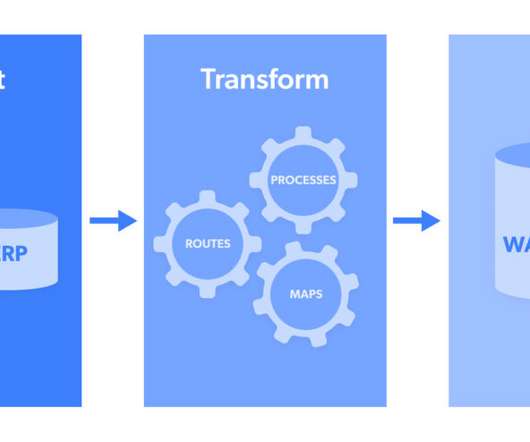

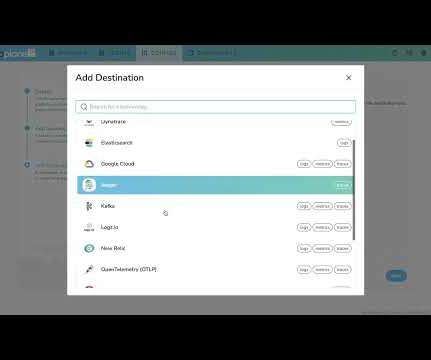

ETL (Extract, Transform and Load) pipeline process is an automated development. ETL pipelines are designed to optimize and streamline data collection from more than a source and reduce the time used to analyze data. This article gives a quick guide on the necessary steps needed to build an ETL pipeline process.

Let's personalize your content