Data contracts and schema enforcement with dbt

Xebia

AUGUST 15, 2023

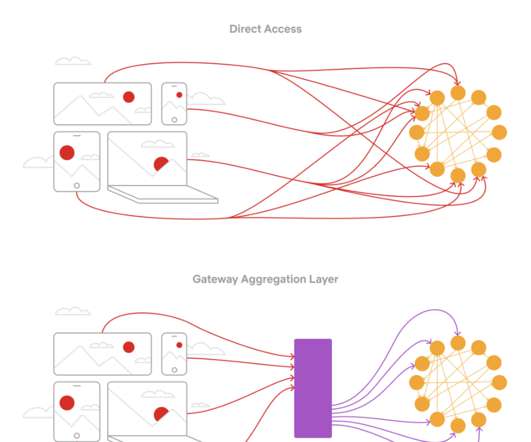

This is where data contracts come into play, providing the tools for ensuring data quality and consistency. In this article, we will explore the concepts of data contracts and how they can be effectively implemented using dbt. Data contracts, much like an API in software engineering, serve as agreements between producers and consumers.

Let's personalize your content