As site reliability engineering (SRE) becomes more commonplace across IT organizations, what lessons can you learn from Google, one of the originators of SRE? I have recently been helping several organizations understand and adopt SRE practices; as part of this, I spoke to David Ferguson, EMEA lead for customer reliability engineering at Google, to understand how SRE actually works at the firm.

Many organizations face challenges when building reliable services. They often find that just renaming an operations team "SRE" doesn't meaningfully solve their problems. And even if they have staff with SRE skills, they need to create an organizational environment to set them up for success.

Google's SRE guidelines and procedures provide useful insights for other organizations about how they might approach SRE deployment in their own environment. Here are five that your team should pay attention to.

1. A separate SRE team is always optional

One of the most important aspects of SRE at Google is that only some services get SRE involvement. That's correct: SRE is optional. Software development teams cannot assume they will get SRE support for their software.

"Make it a privilege to have SRE involvement, not mandatory," Google's Ferguson said.

Think of it like this: A software engineering team should expect to build and run its software entirely by itself, possibly forever. That is the default position at Google.

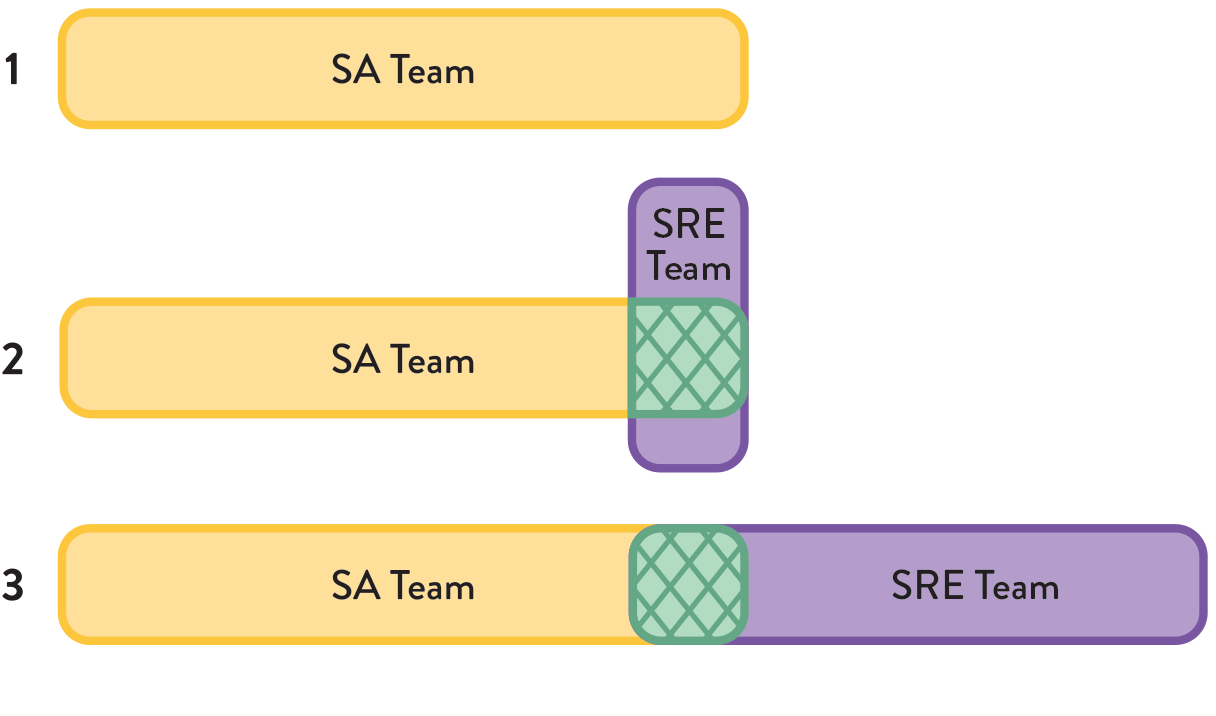

If the service never reaches sufficient scale to merit SRE involvement, then the software engineering team must continue to build and run the software in production. This is shown as Scenario 1 in the diagram below (where "SA" stands for "stream-aligned," the name for cross-functional software engineering teams we use in the book I co-authored with Manuel Pais, Team Topologies).

As a software service begins to scale and needs some operational expertise to help improve its resilience, an SRE team might help the stream-aligned software engineering team to understand scaling, reliability, observability, and how to use platform components that it manages. This is Scenario 2 in the diagram below. However, at this point, the SRE team is not yet running the software in production.

Finally, if the stream-aligned software engineering team can persuade the SRE team that its software is operationally ready, the SRE team might choose to partner with it to run the software in production, ensuring reliability as the software scales. (This is Scenario 3 below.)

Scenario 1 shows the default position of a stream-aligned (SA), cross-functional software engineering team building and running software by itself. Scenario 2 shows an SRE team helping the stream-aligned software engineering team to understand operability better. Scenario 3 shows the SRE team taking responsibility for running the software in production. Source: Team Topologies, by Matthew Skelton and Manuel Pais.

Software engineering teams must demonstrate a high degree of operability and service readiness before an SRE team will partner with them to help improve the software's reliability. SRE teams are free to turn down a request for help from a software engineering team if they think the operational burden would be too high or if there are no clear opportunities for engineering projects.

Is your SRE team empowered to do that?

2. Software product teams must care about operability from day one

A software engineering team must demonstrate that its software is ready for production by continuously focusing on operability. Only by showing that the software is operationally ready will an SRE team be persuaded to partner with the software engineering team to help with reliability as the software scales.

As part of this focus on operability, it's important to make sure that organizational incentives (including pay and career progression) help to drive the right behaviors.

At Google, "we incentivize software engineers to get things into and used in production" and not just checked in and passing tests, Ferguson said. The company also rewards SREs when they "actually identify and do engineering work on services," he said.

And at Google, software engineers still need to have some contact with production—through looking after things in early development, business hours on call, and in other ways.

With this approach, Google avoids a "hard boundary" between software engineering and SRE teams. Software engineers have incentives to understand production, and SREs have incentives to understand the business context of the software, thus encouraging shared ownership.

3. You can achieve many of the benefits of the SRE approach without a separate SRE team

A key part of SRE-based discipline is the use of the error budget to control when to focus on improving reliability and operability. But the error budget model can work without a separate SRE team. It just needs discipline from the software team and product owner to play by the rules of the error budget and stop deploying new features when service availability has been breached for that month.

Google's Ferguson called the error budget mechanism "an appropriate control process for agile teams" that "want to go fast." He describes the error budget discipline like this:

- Define what matters to your users.

- Measure it and define the guardrails that you care about.

- Decide what you are going to do when you hit the guardrails.

- When you hit the guardrails, actually do the thing you said you would.

- Be transparent with your data and your actions.

When outlined in this way, it's clear that a single stream-aligned software team can be empowered to enact error budgets without needing a separate SRE team. What you're doing here is keeping a laser-like focus on what matters to the end user and making sure that you know when the end-user experience is starting to degrade.

4. Choose business-relevant service-level objectives

At the heart of the SRE approach is the service-level objective (SLO) for the application or service that is being run by the SRE team. An SLO is a performance or availability target for that service, a degree of performance or availability that meets business expectations at an acceptable cost.

Conformance to SLOs is measured through the use of a service-level indicator (SLI), or perhaps several SLIs. An SLI is a single quantitative measure of some aspect of the behavior or performance of a service or system. SLIs are generally closely tied to characteristics that users of the service care about: response time (for web applications), durability (for data persistence), error rate, or perhaps the availability of a multi-step flow.

Synthetic transaction monitoring is good to have in place because the monitoring is driven from external locations, generating a similar experience to that seen by end users. However, teams at Google often go beyond synthetic monitoring, as Ferguson explains. "We are actually scoring each and every interaction with the service."

Synthetic monitoring is useful because it represents an expected load on the system but rarely covers the full breadth of interactions that matter. This is particularly true with horizontally scaled components, where the synthetics may tend to probe only one instance of each clustered service.

This focus on the ways in which users experience the running services and applications is a key aspect of the SRE approach. Instead of simply monitoring uptime (whether a process is running or a webpage is present), SLOs driven by SLIs encourage attention to the quality of the interaction experienced by the user. Ultimately, quality is one of the most important criteria for successful software.

5. Reliability is about more than just avoiding downtime

If a service is down, it’s fairly straightforward to understand the impact on users: They cannot use the system at all. However, end users can be affected by services performing slowly or intermittently, or in unusual ways at certain times. These more nuanced aspects of reliability are crucial to measure as part of SRE.

"Our approach isn't just about hard downtime," Ferguson said. An SLO of 99.9% over a month means that around 1 in a 1,000 requests fail (or go too slow) over that period.

The Google SRE approach weights in the real-time aspects of reliability, such as temporary slowness during garbage collection or peak time. It also biases toward busy-hour; if 10% of your traffic is at busy hour, then you will burn error budget much faster if you have an outage during that time.

It also makes it clearer that everyone has a part to play. When you focus just on outages, it tends to look like operations fault and we can convince ourselves that it can't/won't happen again. When you look at request-by-request performance, you'll quickly see that you never really have 100%, and you don't want to spend the time or money getting to 100%

Modern large-scale software needs high-quality metrics on performance—covering request/response time, latency, throughput, variability, outliers, data persistence, and more. These things all contribute to the reliability of the software as seen by end users, so we need to measure and understand all these dimensions.

Ferguson said, "Done right, [with SRE] we are just helping the organization keep to the promises/decisions it made about how good it wanted its products to be."

Leverage the underlying dynamics of SRE, not just the name

The SRE approach can clearly be a key part of success with large-scale cloud software. However, simply adding a separate SRE team misunderstands how Google implements the concept. In fact, SRE teams are optional at Google and software engineering teams must work hard to persuade SRE teams that their software has good operability.

"If you don't care about your reliability, they shouldn't have to, either," Ferguson said.

So, keep SRE teams as a privilege for the most deserving services, define your error budget and use that as a control mechanism for software teams, keep a relentless focus on the operability of the software you're building, and choose SLIs and SLOs wisely to make sure that you're measuring what actually users really care about.

To improve your approaches to SRE and operability, see The Site Reliability Workbook from Google, which includes practical tips on SRE implementation based on work with Google's customers, and the Team Guide to Software Operability from Conflux Books, which contains practical, team-focused techniques for enhancing operability in modern software.

Keep learning

Choose the right ESM tool for your needs. Get up to speed with the our Buyer's Guide to Enterprise Service Management Tools

What will the next generation of enterprise service management tools look like? TechBeacon's Guide to Optimizing Enterprise Service Management offers the insights.

Discover more about IT Operations Monitoring with TechBeacon's Guide.

What's the best way to get your robotic process automation project off the ground? Find out how to choose the right tools—and the right project.

Ready to advance up the IT career ladder? TechBeacon's Careers Topic Center provides expert advice you need to prepare for your next move.