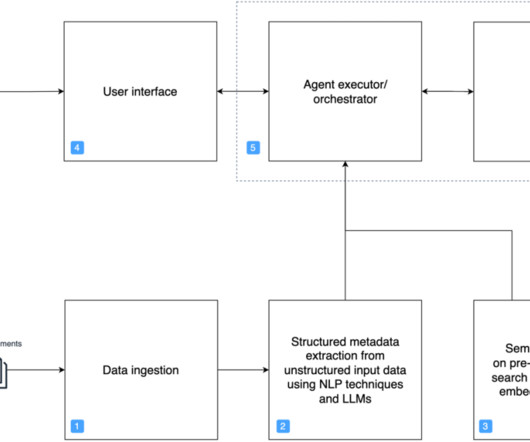

Introducing the LLM Mesh: A Common Backbone for Generative AI Applications

Dataiku

SEPTEMBER 26, 2023

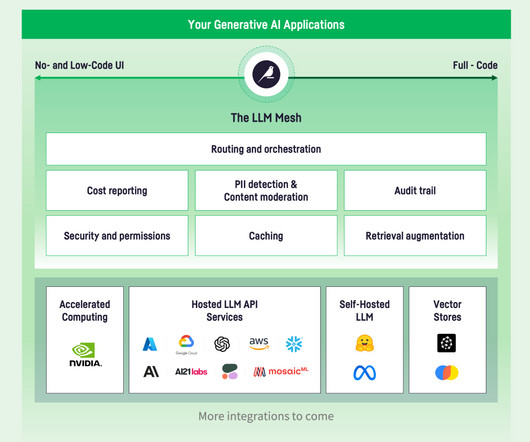

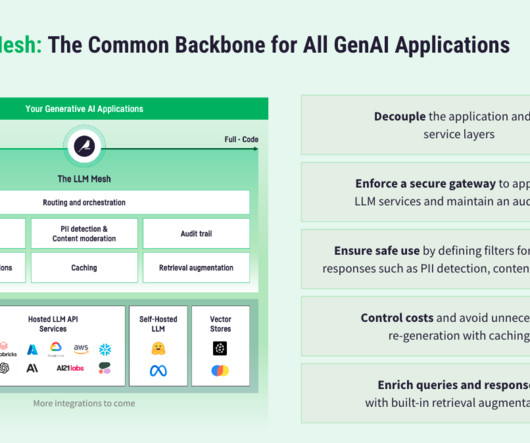

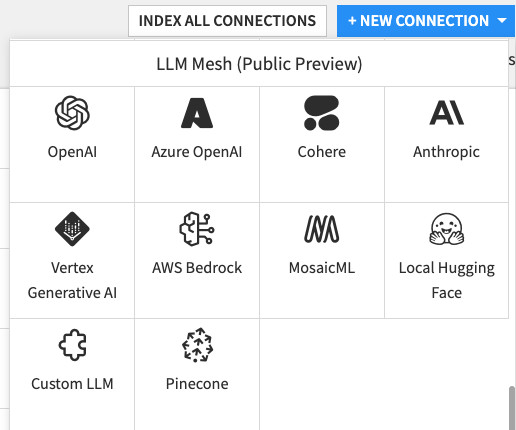

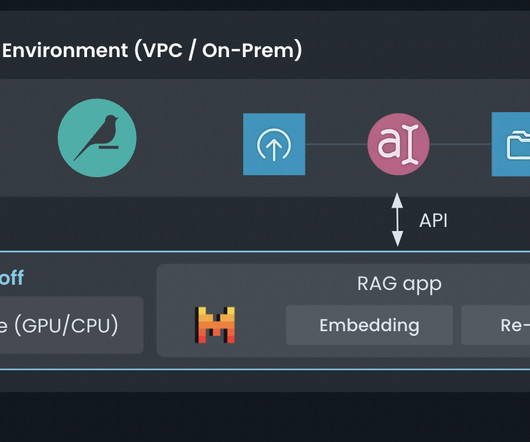

The answer is yes, via the LLM Mesh — a common backbone for Generative AI applications that promises to reshape how analytics and IT teams securely access Generative AI models and services.

Let's personalize your content