Artificial intelligence promises to help humans carry out everyday tasks faster and quickly solve problems that they have been too big for humans to tackle. But ironically, the building that AI can take a long time because of the data crunching needed to train the models.

That’s given rise to a wave of startups aiming to speed up that process.

In the latest development, V7 Labs, which has built tech to automate notations and other categorizing of data needed for AI training models, has raised $33 million in funding after seeing strong demand for its services.

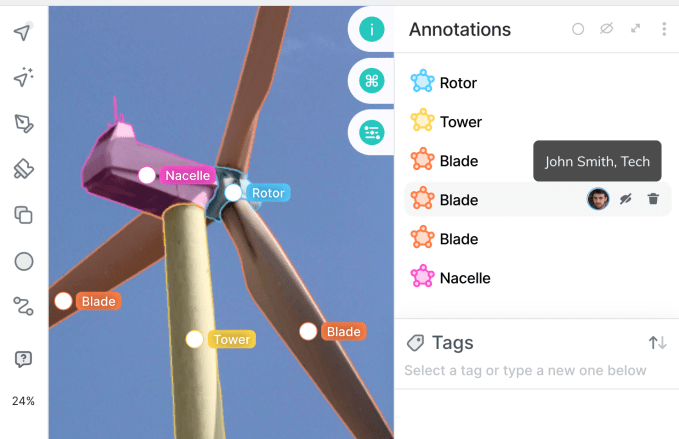

V7’s focus today is on computer vision and automatically identifying and categorizing objects and other data to speed up how AI models are trained. V7 says it needs just 100 human-annotated examples to learn what it needs to do.

It currently has strong traction in the fields of medicine and science, where its platform is being used to help train AI models to identify, for example, how cancers and other issues are identified on scans. V7 is also starting to see activity with tech and tech-forward companies looking at how to apply AI in a wide variety of other applications, including companies building engines to create images out of natural language commands and industrial applications. It’s not disclosing a full list of customers and those evaluating its tech but the list numbers more than 300 clients and includes GE Healthcare, Paige AI and Siemens, alongside other Fortune 500 companies and larger privately held businesses.

Radical Ventures and Temasek are co-leading this round, w1ith Air Street Capital, Amadeus Capital Partners and Partech (three previous backers) also participating, along with a number of individuals prominent in the world of machine learning and AI.

They include Francois Chollet (the creator of Keras, the open source Python neural network library), Oriol Vinyals (a principal research scientist at DeepMind), Jose Valim (creator of the Elixir programming language), Ashish Vaswani (a co-founder of Adept AI who had previously been at Google Brain, where he invented Transformers) and unnamed others from OpenAI, Twitter and Amazon.

CEO Alberto Rizzoli said in an interview that this is the largest Series A funding round in this category to date, and it will be used both to hire more engineers as well as to build out its business operations to take on a new wave of customer interest with an emphasis on the U.S.

He declined to comment on valuation, but the startup has now raised around $36 million, and from what I understand the valuation is now around $200 million.

Rizzoli also declined to talk about revenue figures, but said that ARR grew three-fold in 2022.

There have been a number of other startups that have emerged to help improve the efficiency of training AI data and to address the wider area of AI modeling.

SuperAnnotate, which has raised about $18 million per PitchBook, is one of V7’s closer rivals. (One example of that: V7 lays out how the two services compare on its site, and SuperAnnotate has been in touch to explain how the comparison is not accurate.)

Others include Scale AI, which initially focused on the automotive sector but has since branched into a number of other areas and is now valued at around $7 billion; Labelbox, which works with companies like Google and others on AI labeling; and Hive, which is now valued at around $2 billion.

As with these companies, V7 — named in reference to AI being the “seventh” area for processing images after the six areas in the human brain that form its visual cortex (V1 through V6) — is building services to solve a specific challenge: the concept of the training model and how data is fed into it is inefficient and can be improved.

V7’s specific USP is automation. It estimates that around 80% of an engineering team’s time is spent on managing training data: labeling, identifying when something is incorrectly labeled, rethinking categorizations and so on, and so it has built a model to automate that process.

It calls the process it has come up with “programmatic labeling”: using general-purpose AI and its own algorithms to segment and label images, Rizzoli (who co-founded the company with its CTO Simon Edwardsson) says that it takes just 100 “human-guided” examples for its automated labelling to kick into action.

Investors are betting that shortening the time between AI models being devised and applied will drive more business for the company.

“Computer vision is being deployed at scale across industries, delivering innovation and breakthroughs, and a fast growing $50 billion market. Our thesis for V7 is that the breadth of applications, and the speed at which new products are expected to be launched in the market, call for a centralised platform that connects AI models, code, and humans in a looped ecosystem,” said Pierre Socha, a partner at Amadeus Capital Partners, in a statement.

V7 describes the process as “autopilot” but co-pilot might be more accurate: The idea is that anything flagged as unclear is routed back to humans to evaluate and review. It doesn’t so much replace those humans as makes it easier for them to get through workloads more efficiently. (It can also work better than the humans at times, so the two used in tandem could be helpful to double check each other’s work.) Below is an example of how the image training is working on a scan to detect pneumonia.

Considering the many areas where AI is being applied to improve how images are processed and used, Rizzoli said the decision to double down on the field of medicine initially was partly to keep the startup’s feet on the ground, and to focus on a market that might not have ever built this kind of technology in-house, but would definitely want to use it.

“We decided to focus on verticals that are already commercializing AI-based applications, or where a lot of work on visual processing is being done, but by humans,” he said. “We didn’t want to be tied to moonshots or projects that are being run out of big R&D budgets because that means someone is looking to fully solve the problem themselves, and they are doing something more specialized, and they may want to have their own technology, not that of a third party like us.”

And in addition to companies’ search for “their own secret sauce,” some projects might never see the light of day outside of the lab, Rizzoli added. “We are instead working for actual applications,” he said.

In another regard, the startup represents a shift we’re seeing in how information is being sourced and adopted among enterprises. Investors think that the framework that V7 is building speaks to how data will be ingested by enterprises in the future.

“V7 is well-positioned to become the industry-standard for managing data in modern AI workflows,” said Parasvil Patel, a partner with Radical Ventures, in a statement. Patel is joining V7’s board with this round.

“The number of problems that are now solvable with AI is vast and growing quickly. As businesses of all sizes race to capture these opportunities, they need best-in-class data and model infrastructure to deliver outstanding products that continuously improve and adapt to real-world needs,” added Nathan Benaich of Air Street Capital, in a statement. “This is where V7’s AI Data Engine shines. No matter the sector or application, customers rely on V7 to ship robust AI-first products faster than ever before. V7 packages the industry’s rapidly evolving best practices into multiplayer workflows from data to model to product.”

Comment