Keeping up with an industry as fast-moving as AI is a tall order. So until an AI can do it for you, here’s a handy roundup of the last week’s stories in the world of machine learning, along with notable research and experiments we didn’t cover on their own.

YouTube has begun experimenting with AI-generated summaries for videos on the watch and search pages, though only for a limited number of English-language videos and viewers.

Certainly, the summaries could be useful for discovery — and accessibility. Not every video creator can be bothered to write a description. But I worry about the potential for mistakes and biases embedded by the AI.

Even the best AI models today tend to “hallucinate.” OpenAI freely admits that its latest text-generating-and-summarizing model, GPT-4, makes major errors in reasoning and invents “facts.” Patrick Hymel, an entrepreneur in the health tech industry, wrote about the ways in which GPT-4 makes up references, facts and figures without any identifiable link to real sources. And Fast Company tested ChatGPT’s ability to summarize articles, finding it . . . quite bad.

One can imagine AI-generated video summaries going off the deep end, given the added challenge of analyzing the content contained within the videos. It’s tough to evaluate the quality of YouTube’s AI-generated summaries. But it’s well established that AI isn’t all that great at summarizing text content.

YouTube subtly acknowledges that AI-generated descriptions are no substitute for the real thing. On the support page, it writes: “While we hope these summaries are helpful and give you a quick overview of what a video is about, they do not replace video descriptions (which are written by creators!).”

Here’s hoping the platform doesn’t roll out the feature too hastily. But considering Google’s half-baked AI product launches lately (see its attempt at a ChatGPT rival, Bard), I’m not too confident.

Here are some other AI stories of note from the past few days:

Dario Amodei is coming to Disrupt: We’ll be interviewing the Anthropic co-founder about what it’s like to have so much money. And AI stuff too.

Google Search gains new AI features: Google is adding contextual images and videos to its AI-powered Search Generative Experience (SGE), the generative AI-powered search feature announced at May’s I/O conference. With the updates, SGE now shows images or videos related to the search query. The company also reportedly is pivoting its Assistant project to a Bard-like generative AI.

Microsoft kills Cortana: Echoing the events of the Halo series of games from which the name was plucked, Cortana has been destroyed. Fortunately this was not a rogue general AI but an also-ran digital assistant whose time had come.

Meta embraces generative AI music: Meta this week announced AudioCraft, a framework to generate what it describes as “high-quality,” “realistic” audio and music from short text descriptions, or prompts.

Google pulls AI Test Kitchen: Google has pulled its AI Test Kitchen app from the Play Store and the App Store to focus solely on the web platform. The company launched the AI Test Kitchen experience last year to let users interact with projects powered by different AI models such as LaMDA 2.

Robots learn from small amounts of data: On the subject of Google, DeepMind, the tech giant’s AI-focused research lab, has developed a system that it claims allows robots to effectively transfer concepts learned on relatively small datasets to different scenarios.

Kickstarter enacts new rules around generative AI: Kickstarter this week announced that projects on its platform using AI tools to generate content will be required to disclose how the project owner plans to use the AI content in their work. In addition, Kickstarter is mandating that new projects involving the development of AI tech detail info about the sources of training data the project owner intends to use.

China cracks down on generative AI: Multiple generative AI apps have been removed from Apple’s China App Store this week, thanks to new rules that’ll require AI apps operating in China to obtain an administrative license.

Inworld, a generative AI platform for creating NPCs, lands fresh investment

Stable Diffusion releases new model: Stability AI launched Stable Diffusion XL 1.0, a text-to-image model that the company describes as its “most advanced” release to date. Stability claims that the model’s images are “more vibrant” and “accurate” colors and have better contrast, shadows and lighting compared to artwork from its predecessor.

The future of AI is video: Or at least a big part of the generative AI business is, as Haje has it.

AI.com has switched from OpenAI to X.ai: It’s extremely unclear whether it was sold, rented, or is part of some kind of ongoing scheme, but the coveted two-letter domain (likely worth $5 million to $10 million) now points to Elon Musk’s X.ai research outfit rather than the ChatGPT interface.

Other machine learnings

AI is working its way into countless scientific domains, as I have occasion to document here regularly, but you could be forgiven for not being able to list more than a few specific applications offhand. This literature review at Nature is as comprehensive an accounting of areas and methods where AI is taking effect as you’re likely to find anywhere, as well as the advances that have made them possible. Unfortunately it’s paywalled, but you can probably find a way to get a copy.

A deeper dive into the potential for AI to improve the global fight against infectious diseases can be found here at Science, and a few takeaways can be found in UPenn’s summary. One interesting part is that models built to predict drug interactions could also help “unravel intricate interactions between infectious organisms and the host immune system.” Disease pathology can be ridiculously complicated, so epidemiologists and doctors will probably take any help they can get.

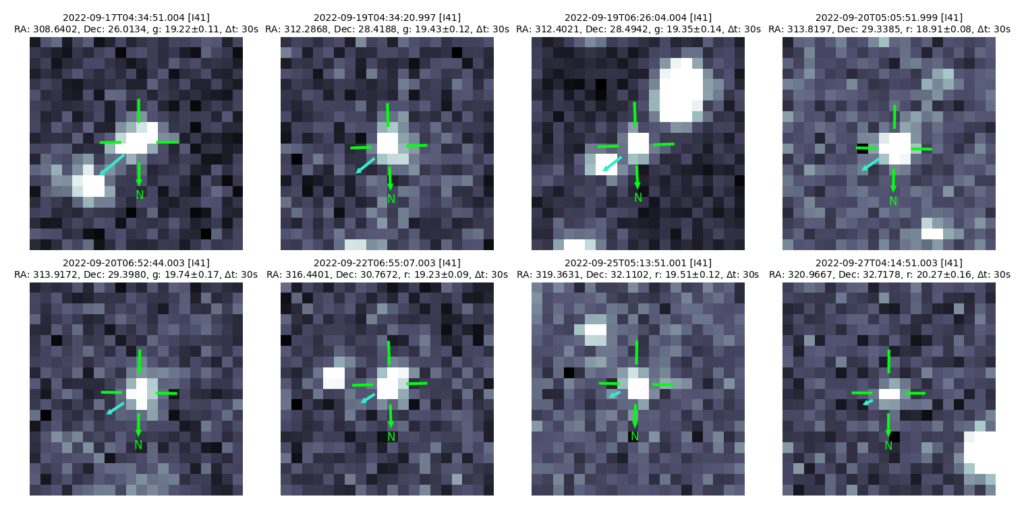

Another interesting example, with the caveat that not every algorithm should be called AI, is this multi-institutional work algorithmically identifying “potentially hazardous” asteroids. Sky surveys generate a ton of data and sorting through it for faint signals like asteroids is tough work that’s highly susceptible to automation. The 600-foot 2022 SF289 was found during a test of the algorithm on ATLAS data. “This is just a small taste of what to expect with the Rubin Observatory in less than two years, when HelioLinc3D will be discovering an object like this every night,” said UW’s Mario Jurić. Can’t wait!

A sort of halo around the AI research world is research being done on AI — how it works and why. Usually these studies are pretty difficult for non-experts to parse, and this one from ETHZ researchers is no exception. But lead author Johannes von Oswald also did an interview explaining some of the concepts in plain English. It’s worth a read if you’re curious about the “learning” process that happens inside models like ChatGPT.

Improving the learning process is also important, and as these Duke researchers find, the answer is not always “more data.” In fact, more data can hinder a machine learning model, said Duke professor Daniel Reker: “It’s like if you trained an algorithm to distinguish pictures of dogs and cats, but you gave it one billion photos of dogs to learn from and only one hundred photos of cats. The algorithm will get so good at identifying dogs that everything will start to look like a dog, and it will forget everything else in the world.” Their approach used an “active learning” technique that identified such weaknesses in the dataset, and proved more effective while using just 1/10 of the data.

A University College London study found that people were only able to discern real from synthetic speech 73% of the time, in both English and Mandarin. Probably we’ll all get better at this, but in the near term the tech will probably outstrip our ability to detect it. Stay frosty out there.

Comment