Hot off the press: the schedule for POSETTE: An Event for Postgres is now available. Big welcome 🤝 to our speakers!

This post by Thomas Munro about recovery prefetching in PostgreSQL 15 was originally published on the Microsoft Tech Community Blog.

Reducing replication lag with IO concurrency in Postgres 15

PostgreSQL 15 improves crash recovery and physical replication performance of some large and very busy databases by trying to minimise I/O stalls. A standby server might now have an easier time keeping up with the primary.

How? The change in PostgreSQL15 is that recovery now uses the maintenance_io_concurrency setting (default is 10, but you can increase it) to decide how many concurrent I/Os to try to initiate, rather than doing random read I/Os one at a time. With big and busy databases, when I/O concurrency increases, replication lag can be reduced.

In this blog post, you'll learn how recovery prefetching minimises I/O stalls and reduces replication lag in PostgreSQL 15—as well as how this work fits into a larger set of proposals to move PostgreSQL from traditional UNIX style I/O to efficient modern I/O APIs in the future. Let's walk through:

- Recovery prefetching in PostgreSQL 15

- How WAL is used for recovery

- Recovery can stall on I/O

- The goal: ideal I/O in recovery

- A bit of history about synchronous I/O

- PostgreSQL 15: Adding more concurrency, but not yet truly asynchronous

- The future: true asynchronous I/O

- We've only scratched the surface

- Acknowledgements and thanks

Trying out recovery prefetching in PostgreSQL 15

Let's start with a quick example of the best case improvement possible with recovery prefetching.

You can see a perfect demonstration of this new recovery prefetching feature by running pgbench at a scale that doesn't fit in RAM. Why? Because the random data blocks involved are unlikely to be in PostgreSQL's buffer pool or in the kernel's page cache.

Effects on real world data will range from dramatic acceleration as seen in this test through to no change at all if they are already well cached in memory. The main beneficiaries of recovery prefetching will be:

- very large busy databases with a working set that exceeds memory, and

- systems running with full_page_writes set to off (usually not recommended).

In later releases, we aim to build on this infrastructure to improve even well-cached workloads.

Test configuration, here is what I did:

- install PostgreSQL 15

- set up a scale 1000

pgbenchTCP-B test with 32 clients on a fairly basic developer system - run a streaming replica, with maintenance_io_concurrency set to 0 to disable recovery prefetching, that is, to behave like PostgreSQL 14

- set

full_page_writesto off, to make the effect visible immediately (otherwise the speed-up potential would vary over time in a saw-tooth pattern due to checkpoints)

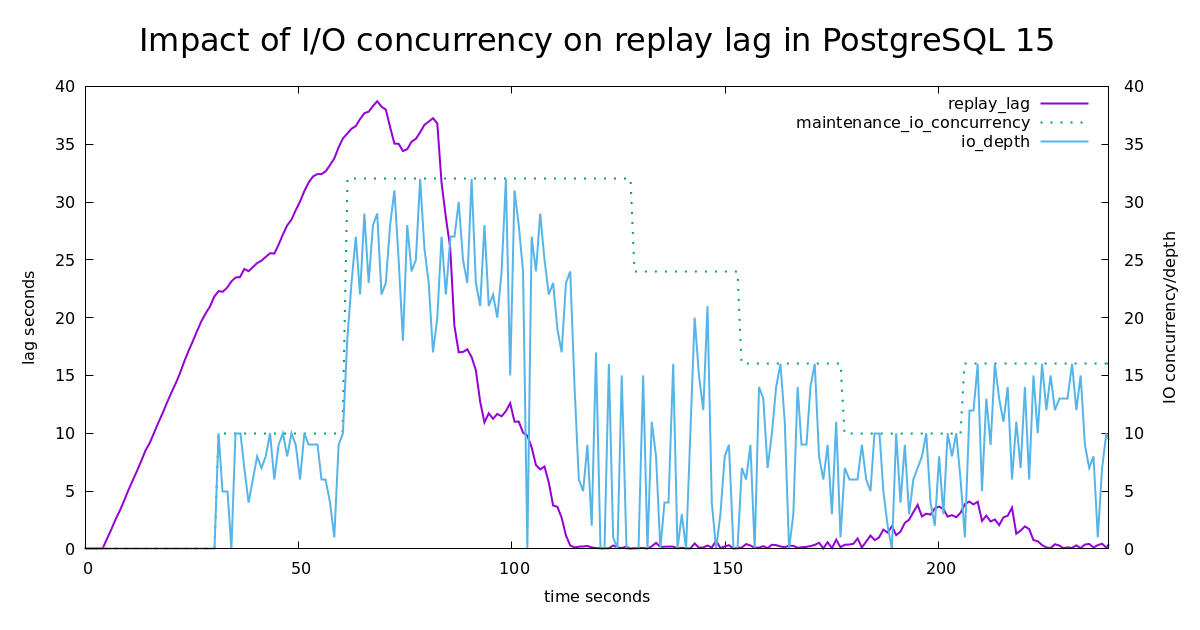

On this particular system, the replication lag—shown in the chart below in purple—begins to climb because the replica can't keep up with all the random I/O required to replay the WAL. (Replication lag, or replay lag, is a measure of how far behind the primary server the replica has fallen, in seconds; close to zero and stable is good.)

The graph shows the moment that maintenance_io_concurrency, shown as a green dotted line, steps up to 10 (which is the default value), because I ran:

ALTER SYSTEM SET maintenance_io_concurrency = 10;

SELECT pg_reload_conf();

This caused the rate of increase in replication lag to slow down, as a result of the concurrent I/O requests shown in blue. It's still not quite enough though, so I tried turning up maintenance_io_concurrency to 32. That brought the lag back down to zero. Then I tried a few lower values: 24, and then 16, and then 10 again. At 10 you see the replication lag begin to creep up again. 10 is not quite enough to replay this contrived test workload at full speed, but 16 is.

If trying this experiment at home, note also that the current advice-based scheme is only known to work on Linux with ext4 and xfs (it might also work on other systems, and a future release of zfs is expected to work). It doesn't yet work on other operating systems. In contrast, the true asynchronous I/O proposal being worked on for the next generation will work everywhere, via various kernel APIs.

What problem were we trying to solve?

Background: How write-ahead logging (WAL) is used for recovery

PostgreSQL, like many other database systems systems, records changes in a write-ahead log or WAL. Changes to the permanent relation files that hold tables and indexes are eventually written to disk in the background, but users only have to wait for the log to be written out to disk when committing transactions.

This arrangement gives good performance, because the user only has to wait for sequential writes, and allows for reliable recovery. The WAL will be replayed in the following scenarios:

- after a crash, when all changes since the last checkpoint are replayed

- continously, on physical replicas

The work of replaying the WAL in both of those scenarios is referred to as recovery. (The WAL is also used for logical replication and other purposes, but those uses are not affected by the changes discussed here.)

Recovery can stall on I/O

The WAL consists of very simple instructions that do not require much CPU to replay—much less than they took to generate originally—so the fact that they are replayed sequentially is very far from the top of the list of problems to be solved, at this point.

I/O stalls, on the other hand, can be a serious problem. The instructions in the WAL represent the work of potentially many concurrent sessions that are working with:

- potentially well cached data—or with

- many I/O stalls happening concurrently (our 32 pgbench clients)

Whereas in recovery, we replay the same changes sequentially. Our test clients were running streams of queries of the form:

postgres=# update pgbench_accounts set bid = $1 where aid = $2;

UPDATE 1

PostgreSQL logs the following WAL records (according to pg_waldump, output heavily abbreviated to fit here):

Heap lsn: 0/50DC2A80, HOT_UPDATE; blkref #0: rel 1663/5/16396 blk 163977

Transaction lsn: 0/50DC2AC8, COMMIT 2022-09-29 16:45:20.175426 NZDT

We can see that the 8KB block 163977 of relation 1663/5/16396 needs to be modified. If that particular block is not already in PostgreSQL's buffer pool (aka, if that block is not already in memory), then, during recovery, it will have to be loaded with a pread() system call. If 32 clients are connected and concurrently running similar queries, there may be a large number of blocks to be modified one after another.

The problems with this were:

- recovery could go to sleep while data to be modified is retrieved from disk

- we might also need to transfer blocks from disk for future records too, but we would have to wait for them one at a time

Clearly we could do better! Which is what we embarked on doing in PostgreSQL 15...

Towards the solution, step by step

The Goal: Ideal I/O in recovery

When we begin to replay a WAL record, ideally we would already have every data page we'll need already in the buffer pool, so that we just need to adjust the relevant bytes without going off CPU (i.e. sleeping while we wait for a disk) or having to copy data from kernel space to our user space buffer. In order to have any chance of achieving that, we need some form of asynchronous I/O with just the right amount of lead time and concurrency, or as close as we can get within the constraints of our hardware. We'd also like to have asynchronous buffer replacement to write out dirty data in time to recycle buffer memory fast enough so we don't have to wait for that. That's a lot of separate and interrelated problems to solve.

When I first started working on IO concurrency in PostgreSQL 15, we were rather far from this ideal. To understand why, you need to know a bit of operating system history.

Historical background on synchronous I/O

One of the choices made by the designers of UNIX half a century ago was to hide the asynchronous nature of I/O with buffering and scheduling. Other operating systems of the time—notably Multics and VAX/VMS—optionally exposed the asynchronous nature of device I/O to user space programs, but UNIX always put your process to sleep until data was available, and you couldn't escape from that model. The best you could hope was that it was already in the kernel's cache, due to recent usage or simple read-ahead heuristics. It was a good choice for the vast majority of simple applications, but not for busy databases.

One of my favourite Stack Overflow questions asks "How should we interpret Dave Cutler's criticism of Unix?". Much like that amusing anecdote, PostgreSQL's read I/O is basically get-a-block, get-a-block, get-a-ran-dom-block. For synchronous I/O to have reasonable performance, we still rely on the same buffering and kernel heuristics that were present in ancient UNIX, plus a small amount of hinting/advice where possible.

PostgreSQL 15: Adding more concurrency, but not yet truly asynchronous

While sockets and pipes have a nonblocking mode that can be used with readiness based APIs like poll(), that doesn't work for multiplexing file I/O. The best we can do while sticking with traditional synchronous read calls is to tell the kernel that we'll soon be reading a certain region of a file, using posix_fadvise(POSIX_FADV_WILLNEED). The kernel can begin fetching the data from storage if necessary.

There are many drawbacks to posix_fadvise() that make it inferior to true asynchronous I/O:

- not all operating systems have it (Linux does)

- not all operating systems do anything useful with the hints (Linux does)

- not all file systems do anything useful with hints (Linux xfs and ext4 do)

- we don't know if the I/O is already in the kernel's buffers or an I/O is started

- we don't know when any I/O completes

- when we later call

pread(), we have to copy the data to user space buffer synchronously - all of this concerns only reads

Despite the above problems, PostgreSQL 15 manages to improve recovery performance in some situations with this kernel interface, as demonstrated with the above experiment. The basic idea is to assume that every posix_fadvise() call initiates an I/O, and the corresponding pread() call completes it, and then try to keep N I/Os in flight at a time. For maintenance_io_concurrency = 3, that produces a sequence of system calls the overlap like so:

posix_fadvise(1) ╮

posix_fadvise(2) │ ╮

posix_fadvise(3) │ │ ╮

pread(1) ╯ │ │

posix_fadvise(4) ╮ │ │

pread(2) │ ╯ │

posix_fadvise(5) │ ╮ │

pread(3) │ │ ╯

posix_fadvise(6) │ │ ╮

pread(4) ╯ │ │

posix_fadvise(7) ╮ │ │

pread(5) │ ╯ │

posix_fadvise(8) │ ╮ │

pread(6) │ │ ╯

posix_fadvise(9) │ │ ╮

pread(7) ╯ │ │

posix_fadvise(10) ╮ │ │

pread(8) │ ╯ │

posix_fadvise(11) │ ╮ │

pread(9) │ │ ╯

posix_fadvise(12) │ │ ╮

pread(10) ╯ │ │

pread(11) ╯ │

pread(12) ╯

In the best case, namely random reads of a data set that exceeds the size of RAM, this technique can achieve the targeted level I/O concurrency (or average queue depth according to tools like iostat). In the worst case, we generate extra system calls for data the kernel already had in its cache, or was already able to predict with simple sequential heuristics.

The longer-term motivation for building infrastructure around such poor kernel APIs is to provide a stepping stone to true asynchronous I/O.

The future: True asynchronous I/O

True asynchronous I/O is based not on readiness or hints, but instead on completion events.

Several completion-based APIs were introduced in the 1990s, but at least the POSIX APIs are not widely used. Perhaps that is because they are difficult to use, were incompletely implemented by popular systems, and are limited in scope. In recent years Linux's io_uring has caused a new wave of interest in completion based I/O by providing an elegant, efficient, and generalised interface for communicating with the kernel through submission and completion queues. An ongoing project exists to add support for io_uring and other asynchronous I/O APIs to PostgreSQL, or push I/O operations into background workers as a portable fallback option.

In the completion-based phase 2 of recovery prefetching—which currently exists in prototype form and depends on a large queue of other patches—data pages won't just be preloaded into kernel caches. Rather, they'll be asynchronously transferred all the way into PostgreSQL's buffers, and optionally using direct I/O to skip the kernel's buffers completely.

We've only scratched the surface in PostgreSQL 15!

We've only scratched the surface of the optimisations possible now that we can see further ahead into the WAL. The lowest hanging fruit is I/O stalls for large random workloads. While not all workloads will benefit from the first phase of functionality released in PostgreSQL 15, or even future completion-based I/O, early experiments show there is potential for speeding up even fully cached recovery workloads by consolidating the management of buffer pins, locks, and potentially re-ordering operations.

To experiment with the effects of recovery prefetching on your own replica servers, try:

- adjusting the

maintenance_io_concurrencysetting, and - examining the statistic in the pg_stat_recovery_prefetch view

- it may also be useful to increase the wal_decode_buffer_size setting

Acknowledgements, thanks, and notes on development process

I was originally inspired to work on this problem by Sean Chittenden's talk on pg_prefaulter at PGCon 2018. That's an external tool that showed how much recovery I/O performance we were leaving on the table; I know from other presentations that his team used ZFS and full_page_writes=off on a large scale, presumably making the problem more apparent. Then I began a long process of figuring out all the problems of WAL analysis and synchronisation required to move the logic into PostgreSQL in a tightly integrated way, with a pathway to true asynchronous I/O.

I'm grateful to all who participated in the long proposal thread that led to inclusion of recovery prefetching in PostgreSQL 15, as well as all the other follow-up discussions, exploration of the problem space, reporting of bugs, and testing of performance. Many thanks go to:

- Alvaro Herrera

- Andres Freund*

- Ashutosh Sharma

- Dagfinn Ilmari Mannsåker

- Daniel Gustafsson

- David Steele

- Dmitry Dolgov

- Jakub Wartak

- Julien Rouhaud

- Justin Pryzby

- Kyotaro Horiguchi

- Melanie Plageman*

- Noriyoshi Shinoda

- Sait Talha Nisanci

- Simon Riggs

- Stephen Frost

- Tom Lane

- Tomas Vondra

(*My colleagues on the PostgreSQL team at Microsoft). It was a bumpy ride because I originally tried to get the feature into the PostgreSQL 14 cycle, but I ran into problems, ran out of time, and decided to revert. One of the outcomes of that experience was increased automated testing of recovery.

The main feature commits in PostgreSQL 15 are: