Sand & Honey: Building Honeypots

Mark Manning (@antitree ) – Security Architect at Snowflake

Jared Stroud (@DLL_Cool_J) – Cloud Security Researcher

Takeaways

- Honeypot data collection can be achieved via mandatory access control/sandbox technology.

- Attack surface of API endpoints can be collected without building a honeypot per-API.

- The security boundary between container and underlying host should be hardened but continue to capture activities

The Need for Honeypots

In the big data, microservice world that constantly pushes new technology to increase speed to market, management of deployments and all things in between, it’s important to ask “what is the risk $TECHNOLOGY brings to my organization?”. More often than not the answer is “it depends”.As threat actors develop new capabilities to use against $TECHNOLOGY, defenders and researchers alike also leverage existing defense in-depth architecture with $TECHNOLOGY to create new detections, honeypots, and sandboxes for defense.

Lacework reached out to friends at Snowflake to share some security notes about isolating workloads.They’ve been building some expertise in this area over the last few years and shared some insights on a few ways we could better sandbox our processes for our use case. We demonstrate a method of creating a “Honeypot-esque” sandbox from the exact codebases that are being deployed in production environments by isolating its access, hardening process execution controls, and effectively converting your application into a sandboxed version of your production code.

API Honeypot Setup – The Docker Registry

The bulk of modern application development that’s being deployed in cloud environments relies on some API sitting somewhere eagerly awaiting to respond to a client’s request. Consider how many APIs your current workflow has when simply deploying your application. What happens if these APIs are subject to requests that are harmful? What does a harmful request look like? Let’s explore this concept with an API that most in DevOps have interacted with–the Docker registry.

Consider a simple Docker registry deployed in a cloud environment. A RESTful API runs on a given TCP port to receive requests to store images and deliver images. A container registry is a vital part of any organization’s CI/CD life cycle, and understanding what an attack against this resource looks like is the first step in understanding how to defend against it.

With this in mind, our imaginary business “RegistriesRUs” would like to collect data on how adversaries are abusing these services. To do so, we’d like to stand up container registry honeypots to simply log inbound commands but not allow any resource to be read. This is where we’ll invest some effort to lock down the service to detect and defend from attacks.

Limiting API Attack Surface for Collection

The Docker registry API provides a simple interface for pushing and pulling Docker images. Typically, this functionality is achieved via the Docker CLI after an end user has authenticated to a given registry. When pulling an image, the HTTP GET method requests the remote resource. Figure – 1 below shows our simple Docker client (10.10.1.21) and Docker registry server (10.10.1.20) topology.

Figure 1 – Basic Client/Registry Topology

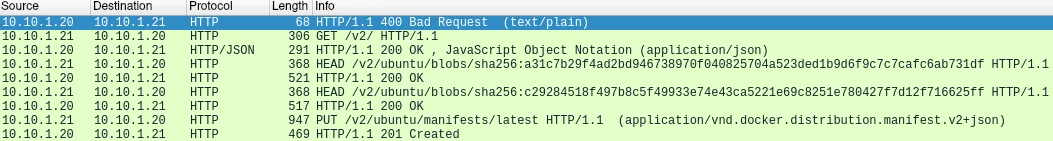

When uploading an image, a combination of HTTP methods including HEAD, GET and PUT are leveraged for the uploading of a Docker image. Figure-2 below shows the standard exchange between a Docker client and Docker registry server for a image to be uploaded.

Figure 2 – PCAP Network Flow

For our threat research scenario let’s assume we want to host a publicly accessible Docker registry that an adversary may push an image to. This could give security researchers insight into tools being used, what source IPs are being used to push infrastructure and the User-Agent (perhaps indicating the tool) involved in the docker push request. After this image is uploaded, we want to ensure that the adversary isn’t using our registry as a staging zone to be leveraged in future attacks, so we must identify a one-way upload path.

When examining the way Docker images are requested, if the Docker client cannot execute a HTTP GET, then an image cannot be downloaded. To enable this “one-way” data flow, at the HTTP protocol level it’s possible to forbid GET and HEAD requests by placing HTTP method restrictions for specific routes with a reverse proxy.

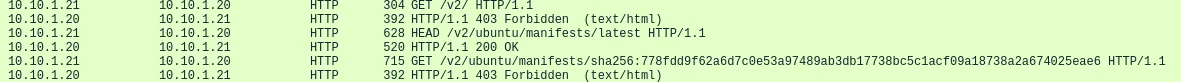

By preventing the HTTP GETs, but allowing HTTP PUTs, data that’s uploaded can rest safely on our honeypot servers without being used to further an attacker campaign. As the traffic in Figure – 3 shows, the client is denied when making the last GET request for the specific Docker image. The HTTP HEAD request inspects the manifest file for the latest Ubuntu image hosted in our Docker registry. The HTTP HEAD request could also be denied at the HTTP method level with the rest of the Nginx configuration. However, it would then cancel the act of pulling an image early in the image pulling transaction preventing us from seeing the HTTP GET request for the specific image. Figure-3 below shows an example Nginx reverse proxy configuration enforcing the HTTP method restrictions.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

upstream registry {

server registry:5000;

}

server {

client_max_body_size 2G;

location ~ ^/v2/ {

if ($http_user_agent ~ "^(docker\/1\.(3|4|5(?!\.[0-9]-dev))|Go ).*$") {

return 404;

}

limit_except POST HEAD PUT PATCH {

deny all;

}

proxy_pass http://registry;

}

}Figure 3 – Nginx Reverse Proxy

By seeing the follow-on HTTP GET requests for Docker images that are hosted, further information (IPs, User-Agents, time of day) into an attacker’s campaign can be identified. Figure -4 below demonstrates the requests being made where the HEAD method is allowed in order to see the corresponding GET request for the specific Docker image.

Figure 4 – Request Denied

While blocking protocol level interaction with a backend application is nothing new, the ability to combine these defense-in-depth architectures allows for a more flexible “honeypot”-esque design methodology and increases the ability to deploy applications in a way to prioritize data collection from adversaries and opportunistic attackers (i.e Cryptojacking payloads accompanied by a RCE exploit) .

By leveraging the actual codebase being deployed in a production environment, more realistic data about threats to an environment can be gathered. With that being said, blocking HTTP methods is not enough! The ability to limit the underlying functionality of an application itself is critical to further contain the application and prevent a breakout or zero day attack. Mandatory access control (MAC) technologies such as Seccomp, enables defenders to limit the specific syscalls an application can make.

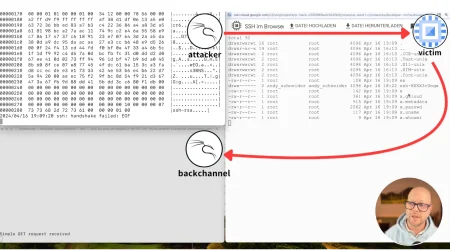

From Container to Sandbox

Whether you consider Docker “secure” can come down to the question of “what are you willing to trust it to be used for?”. Would you trust it to run an application for you on your local machine? Sure. Would you trust it to run someone else’s application on your machine? Probably. Would you trust it to run a malware sample on your personal machine? Most would say no, and/or hell no.

In our honeypot, if you don’t trust the container runtime, you can try to reinforce the security boundary of the container with few options.

- Syscall filter allow list

- AppArmor profiles for our application

- A hardened runtime

I’ll quickly explain why syscall filtering is not the best path forward: Currently Docker supports creating an allow list of syscalls that your container can have, but building that list accurately is a time-consuming and effortful task using tools like eBPF, strace, and maybe some custom code. The results are a very fragile, albeit secure, isolated program. Unless you are running very performance driven applications, this is not the recommended approach for our honeypot.

Let’s look at our other options.

AppArmor

Since this scenario is focusing on a Docker registry, we will look at how AppArmor can also be implemented for further container runtime hardening. The Docker engine supports the ability to have unique AppArmor profiles per container. Per the AppArmor Wiki:

“AppArmor confines individual programs to a set of files, capabilities, network access and rlimits, collectively known as the AppArmor policy for the program, or simply as a profile.” – A Quick Overview, AppArmor Wiki

For the Docker registry use case, we know that the container needs to be able to read (manifest files) and write to the location of where the Docker manifest files are stored as well as the Docker image itself (/var/lib/registry). For our Nginx reverse proxy, several examples exist already that can be reused to limit the functionality of said Docker container (restricting access to common binary paths, denying shell access, etc…).

While it’s entirely possible to manually craft an AppArmor profile, the Open Source utility Bane by Jesse Fraz makes this process significantly easier by leveraging a user-friendly TOML file for what should be read/written/executed/etc…. The configuration for the Docker Registry container can be seen below in Figure – 5.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

Name = "docker-registry"

[Filesystem]

# read only paths for the container

ReadOnlyPaths = [

"/bin/**",

"/boot/**",

"/dev/**",

"/etc/**",

"/home/**",

"/lib/**",

"/lib64/**",

"/media/**",

"/mnt/**",

"/opt/**",

"/proc/**",

"/root/**",

"/sbin/**",

"/srv/**",

"/tmp/**",

"/sys/**",

"/usr/**",

]

# paths where you want to log on write

# log all writes by default

LogOnWritePaths = [

"/**"

]

# paths where you can write

WritablePaths = [

"/var/lib/registry"

]

# allowed executable files for the container

AllowExec = [

"/bin/registry"

]

# denied executable files

DenyExec = [

"/bin/dash",

"/bin/bash",

"/bin/zsh",

"/bin/sh"

]

# allowed capabilities

[Capabilities]

Allow = [

"net_bind_service"

]

[Network]

Raw = false

Packet = false

Protocols = [

"tcp"

]Figure 5 – Bane TOML for Docker Registry

The Bane utility leverages this TOML configuration file to generate the AppArmor profile that can be leveraged by the Docker container at runtime. An important piece within the TOML configuration is configuring the container’s capabilities, as they are a known point of privilege escalation and container escapes.

Hardened Runtime

If you’re exhausted from trying to run AppArmor, SELinux, or seccomp-bpf to try and bolt on protections for our honeypot, we’re left to (IMHO) one good answer: hardened runtimes — the category of alternative Docker runtimes than the default runc that’s built into Docker. This could be a microvm like Firecracker and Kata Containers or a user-space kernel like gVisor. Because gVisor can be used across any cloud, host, or device, I’m going to use that as a demonstration.

- First install gVisor on the honeypot host.

- Configure docker to use a special configuration of runsc.

1

2

3

4

5

6

sudo runsc install --runtime runsc-ptrace -- \

--debug \

--debug-log=/var/log/runsc-ptrace/ \

--strace \

--log-packets \

--platform=ptrace3. Test out our registry container:

- docker run –rm –runtime=runsc-ptrace registry:2

If you were successful, we now have a gVisor-based container that’s logging network activity, syscalls, and debug information that we can use later. In the case of an exploit, the host OS is isolated but all attempts made to break out of the container would be logged. Let’s take a look at an exploit attempt:

1

2

3

4

> docker run --name registry -d --rm -p 5000:5000 --runtime=runsc-ptrace registry:2

> nmap -sS -p 5000 127.0.0.1

> docker exec -it registry /bin/sh

> registry > cat /etc/passwdIf you review the logs in /var/log/runsc-ptrace and you’ll see a file ending with `.boot`. This file contains the syscalls used by the container and the packets sent and received. We can confirm this with a quick service scan of our container:

1

2

3

4

>nmap -sT -p 5000 127.0.0.1 -sC -sV -T5 &

>tail -f /var/log/runsc-ptrace/*.boot | sniffer

I0823 08:44:59.363716 219554 sniffer.go:400] send tcp 172.17.0.2:5000 -> 172.17.0.1:47866 len:0 id:9bfb flags: S A seqnum: 1177466391 ack: 1314908684 win: 29184 xsum:0x65f0 options: {MSS:1460 WS:7 TS:true TSVal:3238849487 TSEcr:2917317093 SACKPermitted:true}

I0823 08:44:59.364203 219554 sniffer.go:400] recv tcp 172.17.0.1:47866 -> 172.17.0.2:5000 len:0 id:040c flags: A seqnum: 1314908684 ack: 1177466392 win: 502 xsum:0x584c options: {TS:true TSVal:2917317094 TSEcr:3238849487 SACKBlocks:[]}

In this example we see packets being sent and received on port 5000/TCP, the port of our registry. We can trace this data into the syscalls being made on the host or feed into netflow telemetry to watch the connection in context of other network events.

You now have strong logging that can trace back any breakout attempt and match it with network connections, as well as isolating the host from the container by using a separate dedicated kernel.

Zero Days and Host Hardening

As you can see, what we’re building is less of a honeypot and more of a sandbox that happens to be running a honeypot (hence the blog title name “Sand & Honey”). And the first rule of the sandbox club is “one is none, two is one”. Right now we only have one layer of defense standing in between an attacker with a zero day and the attacker taking over the host.

For those running honeypots within your security team, following best practices of defense in depth still apply. Best practices with regards to host hardening and strong network isolation are still necessary when designing an environment for honeypot deployment regardless of the underlying platform the host is running on (cloud/bare metal).

Next Steps

We’ve built a pretty simple sandbox for “RegistriesRUs”, but the same model can be applied to any API centric service you’d like to sandbox. This could be your favorite infrastructure service like an unprotected git repo, storage bucket, or, that one weird custom production service that an intern wrote. Whatever it is you can now exercise it with the power of crowds by dropping it on the Internet and seeing what bites

The authors recognize this approach will not work for use cases where high-interaction is required. However, as more of the cloud focused workloads shift to Kubernetes, Docker, and other API centric services the ability for researchers to easily deploy honeypots will be critical in understanding the latest attacks and defending their respective enterprise.

For content like this and more be sure to follow Lacework Labs on Twitter, LinkedIn, and Youtube to stay up to date on the latest research.

Copyright 2021 Lacework Inc. All rights reserved.

Categories

Suggested for you