Cluster Management in Cassandra: Achieving Scalability and High Availability

Author: Satish Rakhonde | | December 26, 2023

Apache Cassandra is a highly scalable and distributed NoSQL database management system designed to handle massive amounts of data across multiple commodity servers. Its decentralized architecture and robust fault-tolerant mechanisms make it an ideal choice for handling large-scale data workloads.

One of the key aspects of using Cassandra effectively is understanding and implementing cluster management. In this blog post, we will delve into the intricacies of cluster management in Cassandra and explore the best practices for achieving scalability and high availability in your database cluster.

Understanding Cassandra Cluster

A Cassandra cluster consists of multiple nodes working together to store and manage data. Each node is responsible for a portion of the data, and they communicate with each other using the gossip protocol to ensure consistency and synchronization.

The cluster is divided into one or more data centers, each with its own replication strategy and configuration. Understanding the cluster architecture is crucial before diving into cluster management.

Adding and Removing Nodes

To expand or shrink a Cassandra cluster, nodes can be added or removed dynamically. Adding a new node involves bootstrapping it into the cluster and allowing it to take responsibility for a portion of the data.

Similarly, removing a node requires ensuring that its data is replicated to other nodes before decommissioning it. We will discuss the step-by-step process of adding and removing nodes, ensuring data integrity.

Bootstrapping a Node

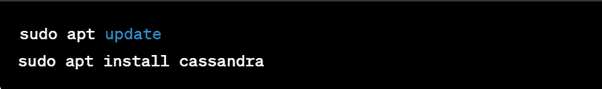

- Install Cassandra on the new node by following the installation instructions for your Linux distribution. For example, on Ubuntu, you can use the following commands:

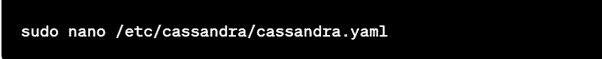

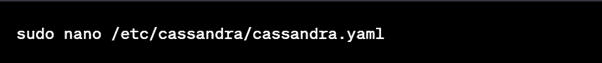

- Once Cassandra is installed, edit the `Cassandra.YAML configuration file located in the `/etc/cassandra/` directory:

- In the `Cassandra.YAML file, update the following properties to configure the new node:

- cluster_name: Set the name of your Cassandra cluster.

- seeds: Specify the IP addresses of the existing seed nodes in the cluster. These are the nodes that the new node will contact to join the cluster.

- Save the changes to the `Cassandra.yaml` file and exit the text editor.

- Start the Cassandra service on the new node:

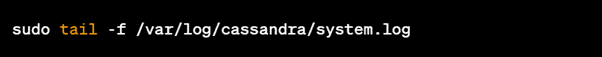

- Monitor the system logs to ensure the new node successfully joins the cluster. You can view the logs using the following command:

Decommissioning a Node

- SSH into the node you want to decommission from the Cassandra cluster.

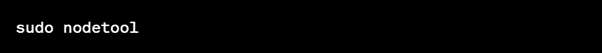

- Open the Cassandra nodetool by running the following command:

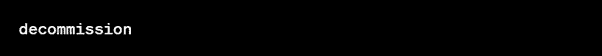

- Inside the nodetool command-line interface, enter the following command to decommission the node:

- Monitor the output to ensure that the decommissioning process completes successfully.

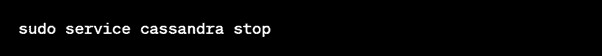

- Once the decommissioning process is finished, stop the Cassandra service on the node:

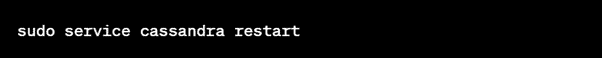

- Restart the Cassandra service on the remaining nodes in the cluster to ensure data redistribution and replication:

Load Balancing

Cassandra employs a token-based partitioning strategy, where data is distributed across nodes based on a token value. This distribution allows for efficient data retrieval and horizontal scalability. However, as the cluster grows and new nodes are added, ensuring data is evenly distributed across all nodes becomes crucial. We will explore strategies for balancing load in Cassandra, including automated and manual approaches.

Replication and Consistency

Cassandra provides built-in replication mechanisms to ensure high availability and fault tolerance. Replication involves creating multiple copies of data across nodes, both within a data center and across data centers. Configuring replication factors, placement strategies, and consistency levels are critical decisions that impact cluster performance and data durability. We will discuss various replication strategies, such as SimpleStrategy and NetworkTopologyStrategy, and how to choose the appropriate consistency level based on your application requirements.

Below are some steps:

Replication

- Open the Cassandra.yaml configuration file located in the /etc/cassandra/ directory:

- Locate the keyspaces section in the Cassandra.yaml file. This section defines the replication strategy and options for each keyspace.

- Determine the keyspace you want to configure for replication and find its corresponding definition in the keyspaces section.

- Set the strategy_class property to specify the replication strategy. For example, if you want to use the SimpleStrategy replication strategy, set it as follows:

- Set the replication_factor property to specify the number of replicas for the keyspace. For example, to have three replicas, set it as follows:

- Save the changes to the Cassandra.yaml file and exit the text editor.

- Restart the Cassandra service for the changes to take effect:

Consistency

- Determine the desired consistency level for your operations. Consistency levels determine how many replicas must respond for an operation to be considered successful.

- Open the Cassandra command-line interface (cqlsh) by running the following command:

- Connect to your Cassandra cluster by specifying the appropriate contact points:

- Set the desired consistency level using the following command:

Replace <consistency_level> with one of the available consistency levels, such as ONE, QUORUM, LOCAL_QUORUM, etc.For example, to set the consistency level to QUORUM, run:

Note: This command will set the consistency level for the current cqlsh session only. - Perform your operations (e.g., insert, update, select) in the cqlsh session with the specified consistency level.

Configuring replication and consistency is important based on your specific requirements and data model. Ensure that you understand the implications of your choices, as replication and consistency directly impact data availability, durability, and performance in Cassandra.

Cluster Monitoring and Health

Keeping a close eye on the cluster’s health is vital to prevent and mitigate potential issues. Cassandra offers various tools, such as nodetool, Cassandra Query Language (CQL) commands, and third-party monitoring solutions, to monitor cluster metrics, diagnose performance bottlenecks, and detect any anomalies. We will explore these tools and best practices for effective cluster monitoring, ensuring optimal performance and proactive troubleshooting.

Below are some steps:

- Monitoring Node Status:

- To check the status of a Cassandra node, open a terminal and run the following command:

- This command provides information about the status of the node, including its load, token ranges, and the status of other nodes in the cluster.

- Viewing Cluster Information:

- To get an overview of the Cassandra cluster and its nodes, run the following command:

- This command provides information about the cluster name, partitioner, snitch, and other configuration details.

- Monitoring Node Performance and Pending Read/Write Tasks:

- To view various performance metrics of a node, such as CPU usage, memory usage, and read/write latency, you can use the following command:

- This command provides thread pool statistics, including pending tasks, active tasks, and completed tasks.

- To check the number of pending read and write tasks in the node, use the following command:

- This command provides information about the pending tasks in the read and write thread pools.

- Monitoring Compaction:

- To monitor the progress and statistics of compaction processes in Cassandra, run the following command:

nodetool compactionstats - This command displays details about compaction tasks, including pending compactions, completed compactions, and their progress.

- To monitor the progress and statistics of compaction processes in Cassandra, run the following command:

Backup and Disaster Recovery

To safeguard your data against hardware failures, natural disasters, or human errors, implementing a robust backup and disaster recovery strategy is essential. Cassandra provides mechanisms like snapshots, incremental backups, and replication across data centers to ensure data durability and availability. We will discuss these strategies and explore how to perform backup and recovery operations in Cassandra effectively.

Below are some steps:

Backup

- Taking a Snapshot Backup:

- Open a terminal on the node you want to back up.

- Execute the following nodetool command to create a snapshot backup:

Replace <snapshot_name> with a unique name for the snapshot, and <keyspace_name> with the name of the keyspace you want to back up.The snapshot is created in the data directory under the keyspace name.

- Copying Snapshot Files:

Use the rsync or scp command to copy the snapshot files to a safe location or another storage system:

Replace /path/to/cassandra/data/<keyspace_name>/snapshots/<snapshot_name> with the path to the snapshot files and <destination_directory> with the destination directory where you want to store the backup.

Disaster Recovery

- Restoring from a Snapshot Backup:

- If you need to restore from a snapshot backup, copy the snapshot files to the appropriate location on the node where you want to restore the data.

- Stop the Cassandra service on the node:

- Delete the existing data for the keyspace you want to restore (optional but recommended):

- Start the Cassandra service on the node:

- The restored data will be automatically picked up by Cassandra.

- Incremental Backup and Restore:

- If you have incremental backups enabled, you can use the same snapshot backup process as mentioned earlier to create snapshots at regular intervals.

- During disaster recovery, you can restore the most recent snapshot and then apply the incremental backups to bring the data up to date.

It’s important to note that these commands provide a basic guideline for backup and disaster recovery in Cassandra. You should adapt them to your specific environment and backup strategy. Additionally, consider using additional tools or solutions to automate and enhance your backup and disaster recovery processes.

Conclusion

Effective cluster management is crucial for achieving scalability, high availability, and optimal performance in Apache Cassandra. By understanding the cluster architecture, adding and removing nodes correctly, implementing load balancing, configuring replication, monitoring the cluster health, and implementing a robust backup and recovery strategy, you can harness the full potential of Cassandra’s distributed nature. With these best practices, you can build and manage a resilient Cassandra cluster that can handle your organization’s growing data requirements and deliver consistent and reliable performance.

Related Posts

How to Solve the Oracle Error ORA-12154: TNS:could not resolve the connect identifier specified

The “ORA-12154: TNS Oracle error message is very common for database administrators. Learn how to diagnose & resolve this common issue here today.

Data Types: The Importance of Choosing the Correct Data Type

Most DBAs have struggled with the pros and cons of choosing one data type over another. This blog post discusses different situations.

How to Recover a Table from an Oracle 12c RMAN Backup

Our database experts explain how to recover and restore a table from an Oracle 12c RMAN Backup with this step-by-step blog. Read more.