The Network Also Needs to be Observable, Part 4: Telemetry Data Platform

Awesome, I have data! Now what?Requirements for a telemetry data platform that enables network observabilityStandard data bus for telemetryNetwork primitives supportEnriched dataHigh-cardinality storageImmediate query resultsMassive multi-tenancyStreaming analyticsAPIs and integrationsConclusionTelemetry data platform FAQsWhat is a telemetry data platform?What is high-cardinality?

Summary

In order to answer any question, network observability requires a broad range of telemetry data. It takes a capable platform to make the data useful. In this 4th part in the network observability series, Avi Freedman describes requirements for the data telemetry platform.

In the previous two blogs, I talked about the need to gather telemetry data from all of the devices and observation points in your network, and across the different types of telemetry available.

The previous blog demonstrates practically how gathering more telemetry data directly enhances your ability to answer the questions that help you plan, run, and fix the network by framing the kinds of questions you can and can’t answer with different types and sources of telemetry. If you have other great examples, please also feel free to start a conversation! I’m avi at kentik.com, and @avifreedman on Twitter.

Awesome, I have data! Now what?

Network observability deals with a wide diversity of high-speed and high cardinality real-time data and requires being able to ask questions both known and unknown. Doing this at network scale has been a hard problem for decades and requires a flexible, scalable, and high-performance “telemetry data platform.”

(When I speak of this sort of platform, I’m not speaking of New Relic’s well-known Telemetry Data Platform (TDP), specifically, but about telemetry platforms in general. It’s worth noting that Kentik and New Relic are partners: See our case study to learn more about how New Relic uses Kentik for network performance and digital experience monitoring, and see New Relic’s Kentik Firehose Quickstart to easily and quickly import network performance metrics from Kentik.)

Requirements for a telemetry data platform that enables network observability

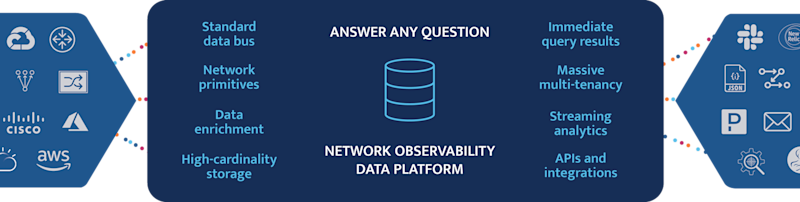

This creates a set of requirements for data architectures to support network observability:

Standard data bus for telemetry

While all that’s required is that data be able to be routed from and to different systems, in practice, most modern network observability data platforms will have a common data bus, often based on Kafka, to support real-time ingest, subscription/consumption, and export of telemetry data.

Network primitives support

Prefix, path, topology, underlay, and overlay are all concepts that are unique or have unique contexts in the network world. Without the ability to easily enrich, group, and query by prefix, network path, and under/overlay, it’s difficult for networkers to ask questions and reason about their infrastructure.

Enriched data

Whether it’s simpler enrichment (like adding IP geography or origin ASN to various network metrics) or completely real-time streaming metadata (like user and application orchestration mapping data and real-time routing association with traffic data), enrichment is key to being able to ask high-level questions of your network.

High-cardinality storage

One requirement of a modern network telemetry data platform is the ability to store at high resolution, at full cardinality. Network telemetry comes in dozens of types and hundreds of attributes, and each attribute can have millions of unique values. In modern, complex, distributed network and application infrastructures, it’s impossible to know in advance all the questions you may need to ask.

Generally, people building on their own data platforms go through multiple backends in their search. Some options we commonly see are:

- Backends focused on log telemetry, which can typically handle cardinality but are usually prohibitively expensive, being more oriented to less structured ASCII data.

- Time-series databases, which operations teams usually are already running. These usually fail quickly with network traffic data because of cardinality, routing, or other network data because of a lack of support for network primitives.

- OLAP/analytics databases that rely on rollups or cubing of data, which can look fast, as long as you remove the ability to ask unanticipated questions.

- Streaming databases can be useful for learning patterns across the data, but also remove support for quickly asking new questions over historical data.

- Distributed columnar databases, which we see most (and use at Kentik) to provide high-resolution storage and querying.

Immediate query results

Users need to be able to ask complex questions and get answers in seconds, not hours, to support modern “trail of thought” diagnostic workflows — again, at high cardinality and resolution.

Massive multi-tenancy

Operational data telemetry platforms need to be able to support dozens of queries. Not just from interactive users via the UI or API but from other operational systems within the network stack and across an organization’s operational systems. Kentik built our own data storage and querying platform because current OSS big-data systems either require rollups or don’t handle multi-tenant large queries well — often blocking on and losing data at ingest.

Streaming analytics

While it’s possible to simulate streaming analytics with high enough speed data storage and querying (early on at Kentik, we did this), to run a modern machine learning pipeline and thousands of real-time queries on streaming telemetry data, streaming analytics is the most common architecture, and is found in most modern and greenfield network telemetry data platforms.

APIs and integrations

Modern observability and telemetry data platforms must be open in spec and easy to integrate, ideally with built-in integrations to key cross-stack systems, to allow application, infrastructure, and security engineers to collaborate with a common operational picture. This typically means full APIs for provisioning and querying and a streaming API to feed other systems with the volume and richness of normalized and enriched network telemetry in real time.

Over the next few months, we’ll have panels to invite builders working in and around network observability to share tips, tricks, and platforms they’ve used for network observability and connect to broader operational and business observability platforms.

Conclusion

The good news is — with the right architecture and capabilities, you can create a data platform capable of supporting network observability and integrating across the business’s data platforms.

Going from nothing to a complete observability platform may sound like a huge lift. This is the business Kentik is in, and we’re happy to help, but for those charting their own path, we’re creating resources like this blog series that we hope will be helpful.

Telemetry data platform FAQs

What is a telemetry data platform?

A telemetry data platform is a specialized system designed to collect, store, analyze, and present telemetry data, which is machine-generated data used for monitoring and diagnosing issues in various systems. In the context of network observability, these platforms aggregate data from numerous network devices and endpoints, processing large volumes of high-cardinality, real-time data.

Telemetry data platforms provide critical insights into the health and performance of your network by identifying patterns, anomalies, and potential threats. They enable you to ask and answer both known and unanticipated questions about your network, transforming raw data into actionable intelligence.

These platforms are often characterized by their ability to handle high-resolution, high-cardinality storage, fast query responses, and comprehensive data enrichment capabilities. They also typically include features for streaming analytics and integrations with other operational systems, creating a cohesive ecosystem for network monitoring and diagnostics.

Telemetry data platforms are a fundamental component of a robust network observability strategy, offering the necessary tools to understand and optimize network operations.

What is high-cardinality?

High-cardinality refers to a data set that contains many unique values or categories. It’s a term often used in the context of databases and telemetry data, particularly when discussing the challenges of managing and analyzing large amounts of data with numerous distinct elements.

In a telemetry data platform, data cardinality comes into play when you have a vast number of unique source-destination pairs, different network paths, multiple applications, or a combination of these. For example, if you are monitoring network traffic data, each unique IP address, port number, protocol, or path would be considered a different category, creating high-cardinality data.

Managing high-cardinality data is a complex task, but it’s essential for network observability. The ability to handle high-cardinality data allows you to maintain granular visibility into your network. It enables you to ask complex, detailed questions about network performance and behavior, down to the level of individual network elements.

However, not all systems are capable of effectively storing and querying high-cardinality data. This can limit the scope and detail of your network observability. Therefore, when considering a telemetry data platform, it’s important to ensure that it can support high-cardinality data to provide the depth of insight needed for effective network management.