Automate the insurance claim lifecycle using Agents and Knowledge Bases for Amazon Bedrock

AWS Machine Learning - AI

FEBRUARY 8, 2024

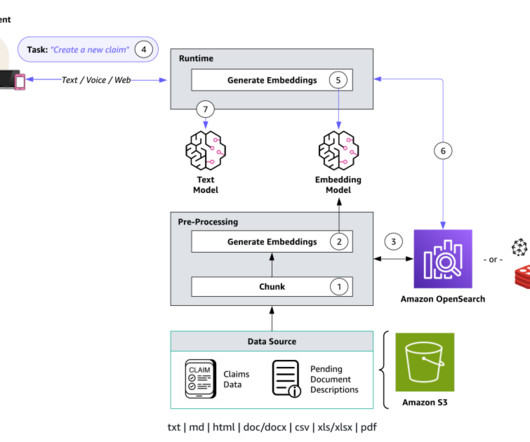

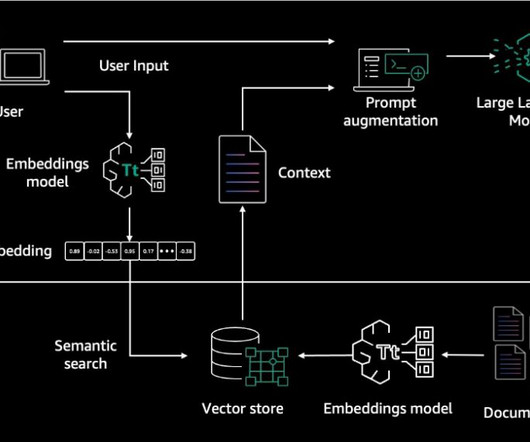

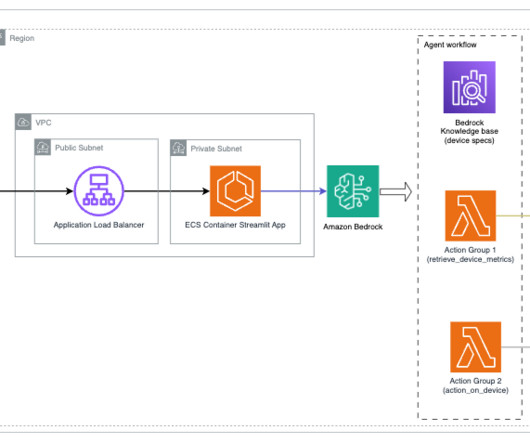

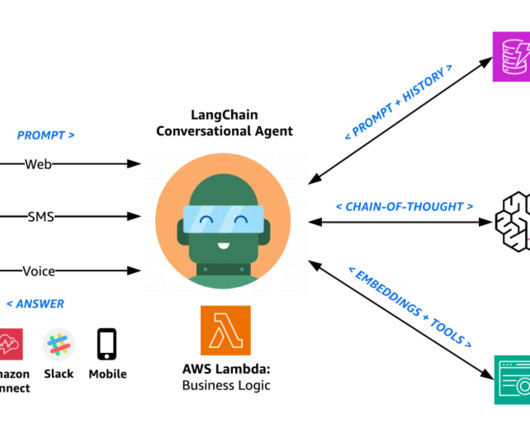

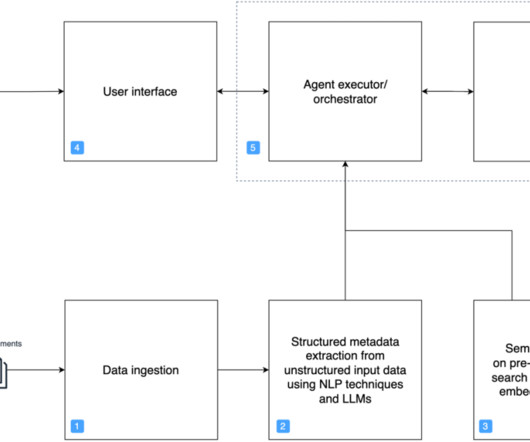

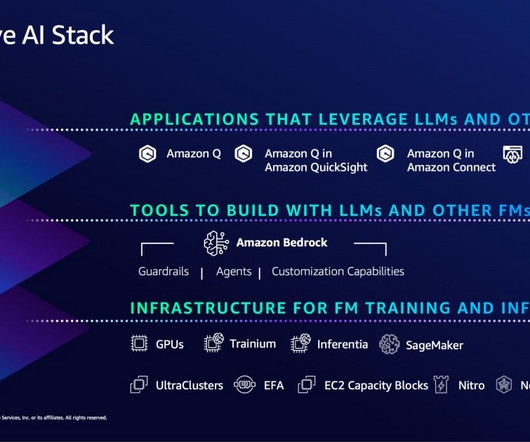

You can now use Agents for Amazon Bedrock and Knowledge Bases for Amazon Bedrock to configure specialized agents that seamlessly run actions based on natural language input and your organization’s data. System integration – Agents make API calls to integrated company systems to run specific actions.

Let's personalize your content