AI adoption accelerates as enterprise PoCs show productivity gains

CIO

APRIL 4, 2024

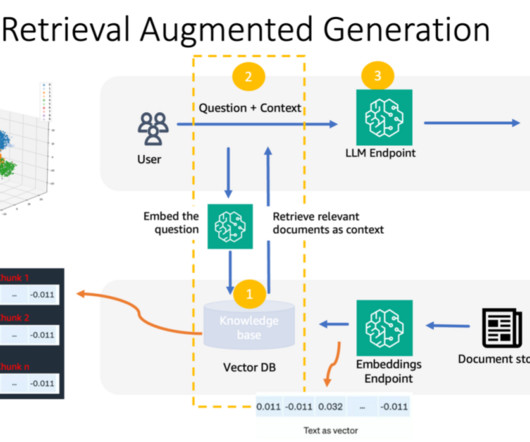

Some prospective projects require custom development using large language models (LLMs), but others simply require flipping a switch to turn on new AI capabilities in enterprise software. “AI We don’t want to just go off to the next shiny object,” she says. “We We want to maintain discipline and go deep.”

Let's personalize your content