Build a contextual chatbot application using Knowledge Bases for Amazon Bedrock

AWS Machine Learning - AI

FEBRUARY 19, 2024

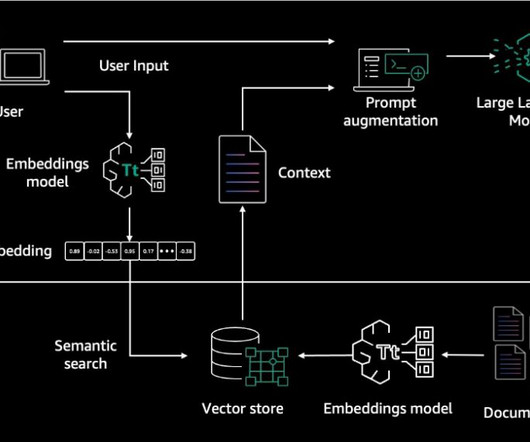

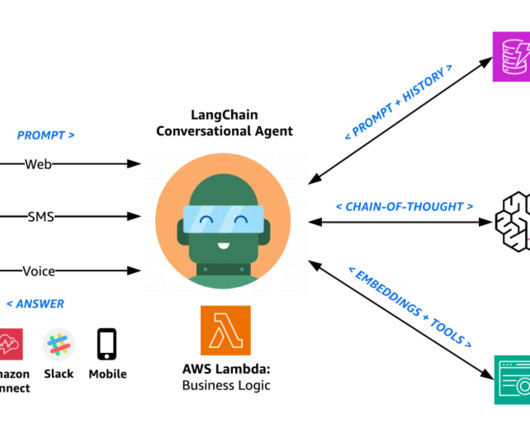

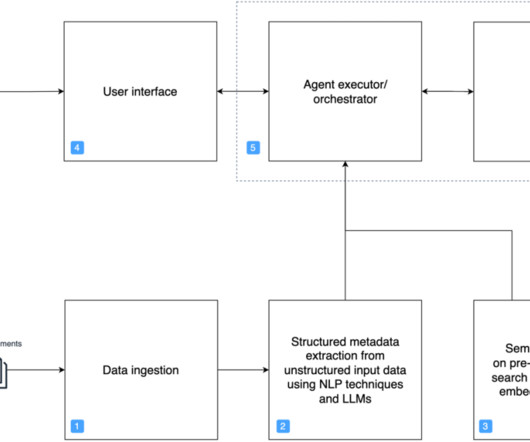

One way to enable more contextual conversations is by linking the chatbot to internal knowledge bases and information systems. Integrating proprietary enterprise data from internal knowledge bases enables chatbots to contextualize their responses to each user’s individual needs and interests.

Let's personalize your content