Use RAG for drug discovery with Knowledge Bases for Amazon Bedrock

AWS Machine Learning - AI

FEBRUARY 29, 2024

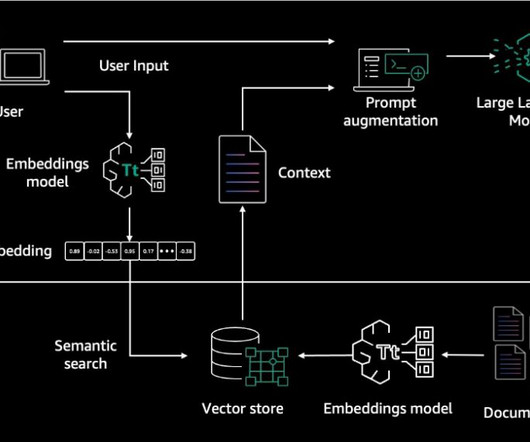

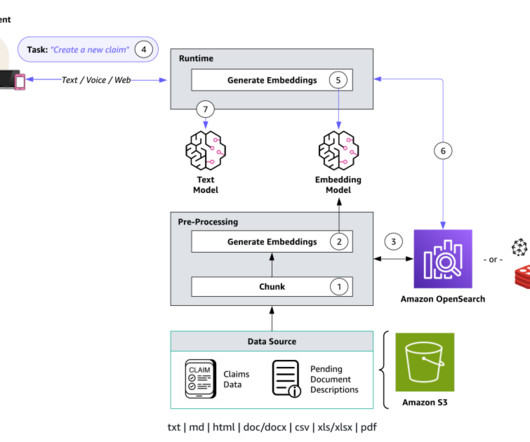

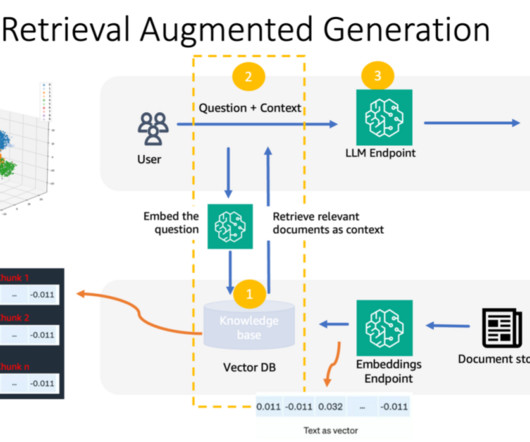

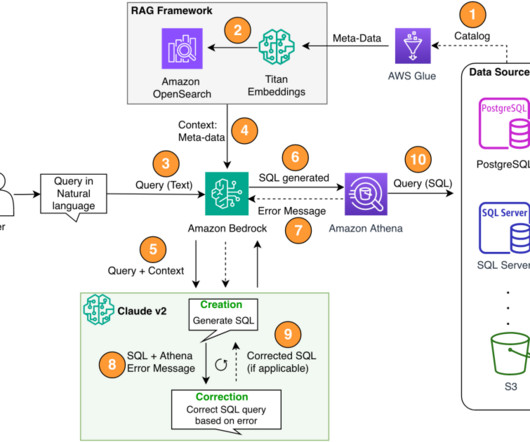

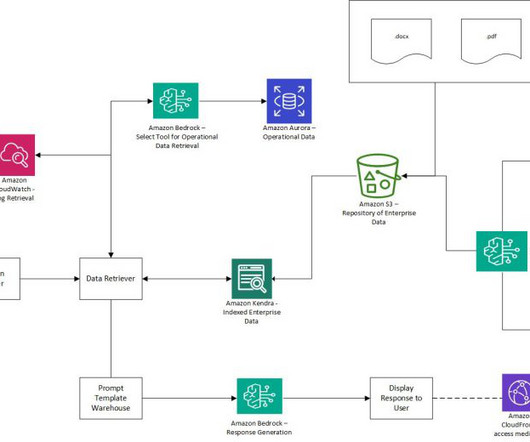

Knowledge Bases for Amazon Bedrock allows you to build performant and customized Retrieval Augmented Generation (RAG) applications on top of AWS and third-party vector stores using both AWS and third-party models. RAG is a popular technique that combines the use of private data with large language models (LLMs).

Let's personalize your content