How to Implement Client-Side Load Balancing With Spring Cloud

Dzone - DevOps

OCTOBER 21, 2024

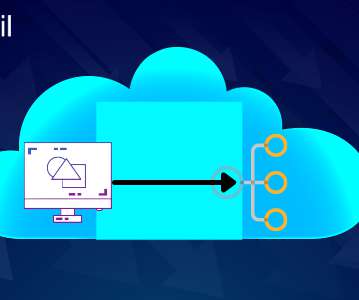

It is therefore important to distribute the load between those instances. The component that does this is the load balancer. Spring provides a Spring Cloud Load Balancer library. In this article, you will learn how to use it to implement client-side load balancing in a Spring Boot project.

Let's personalize your content