Deploying LLM on RunPod

InnovationM

APRIL 25, 2024

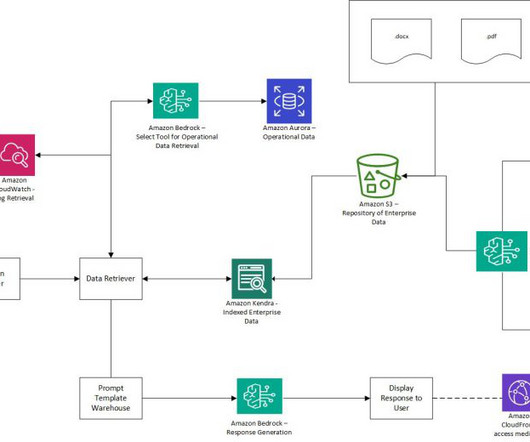

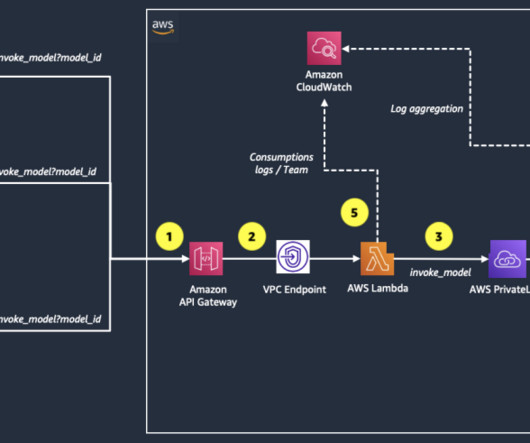

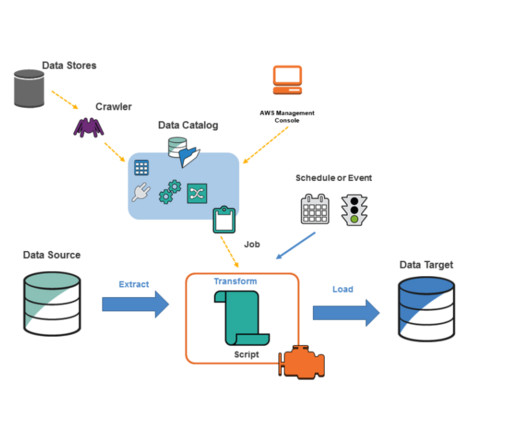

Engineered to harness the power of GPU and CPU resources within Pods, it offers a seamless blend of efficiency and flexibility through serverless computing options. Setup Environment: Ensure that your RunPod environment is properly set up with the necessary dependencies and resources to run the LLM. How to approach it?

Let's personalize your content