Knowledge Bases for Amazon Bedrock now supports hybrid search

AWS Machine Learning - AI

MARCH 1, 2024

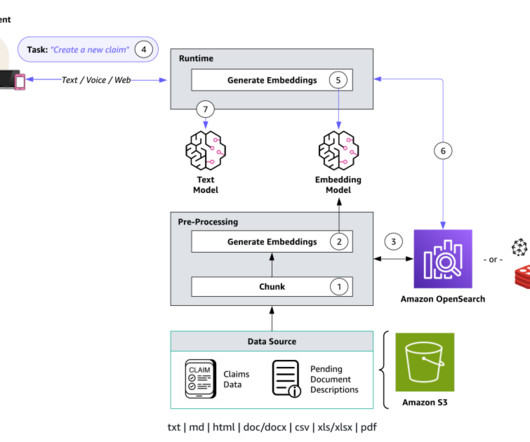

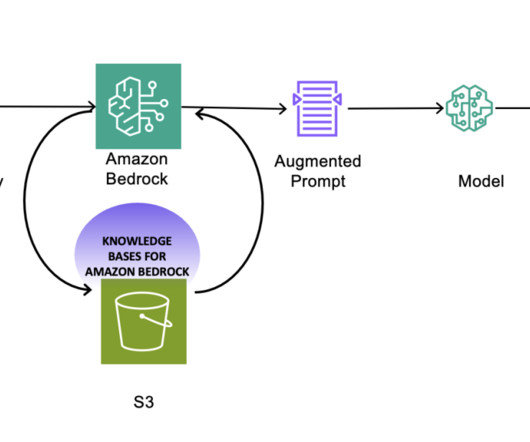

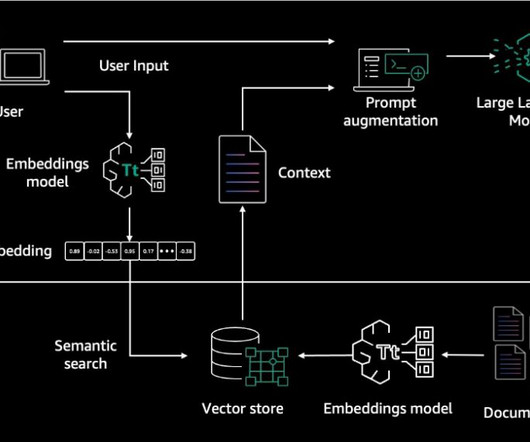

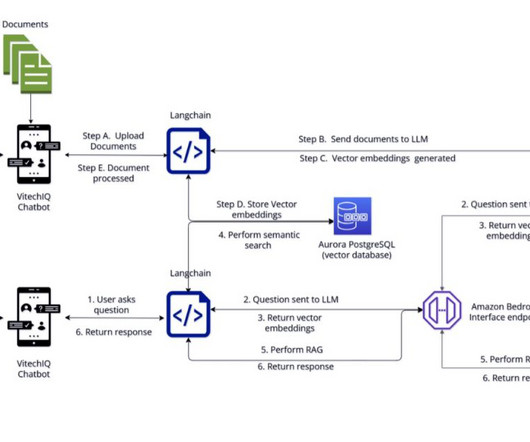

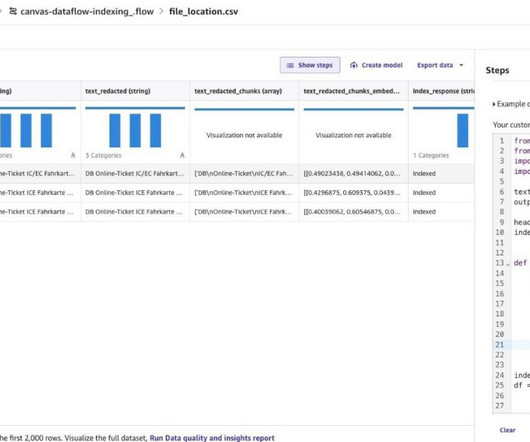

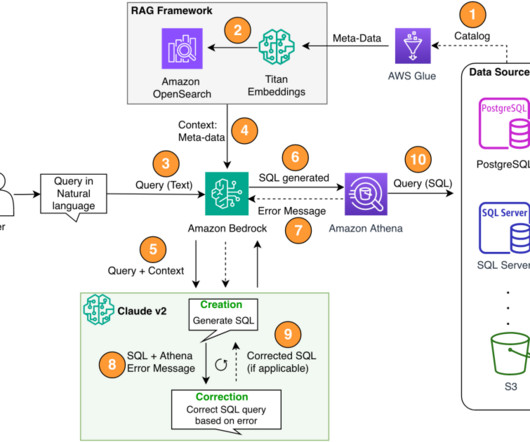

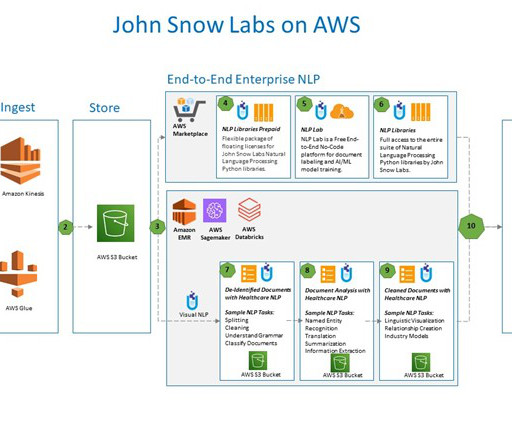

At AWS re:Invent 2023, we announced the general availability of Knowledge Bases for Amazon Bedrock. With a knowledge base, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data for fully managed Retrieval Augmented Generation (RAG).

Let's personalize your content